4 Layers of Agentic AI Systems

...explained visually.

Deploy AI apps by adding a Python decorator [open-source]

Beam is an open-source alternative to Modal that makes deploying serverless AI workloads effortless with zero infrastructure overhead.

Steps:

uv add beam-clientBuild your AI workflow.

Wrap the invocation around a method.

Decorate with the

@endpointdecorator and specify server config.

Key features:

Lightning-fast container launches < 1s

Distributed volume storage support

Auto-scales from 0 to 100s of containers

GPU support (4090s, H100s, or bring your own)

Deploy inference endpoints with simple decorators

Spin up isolated sandboxes for LLM-generated code

Completely open-source!

Beam GitHub repo → (don’t forget to star)

4 Layers of Agentic AI

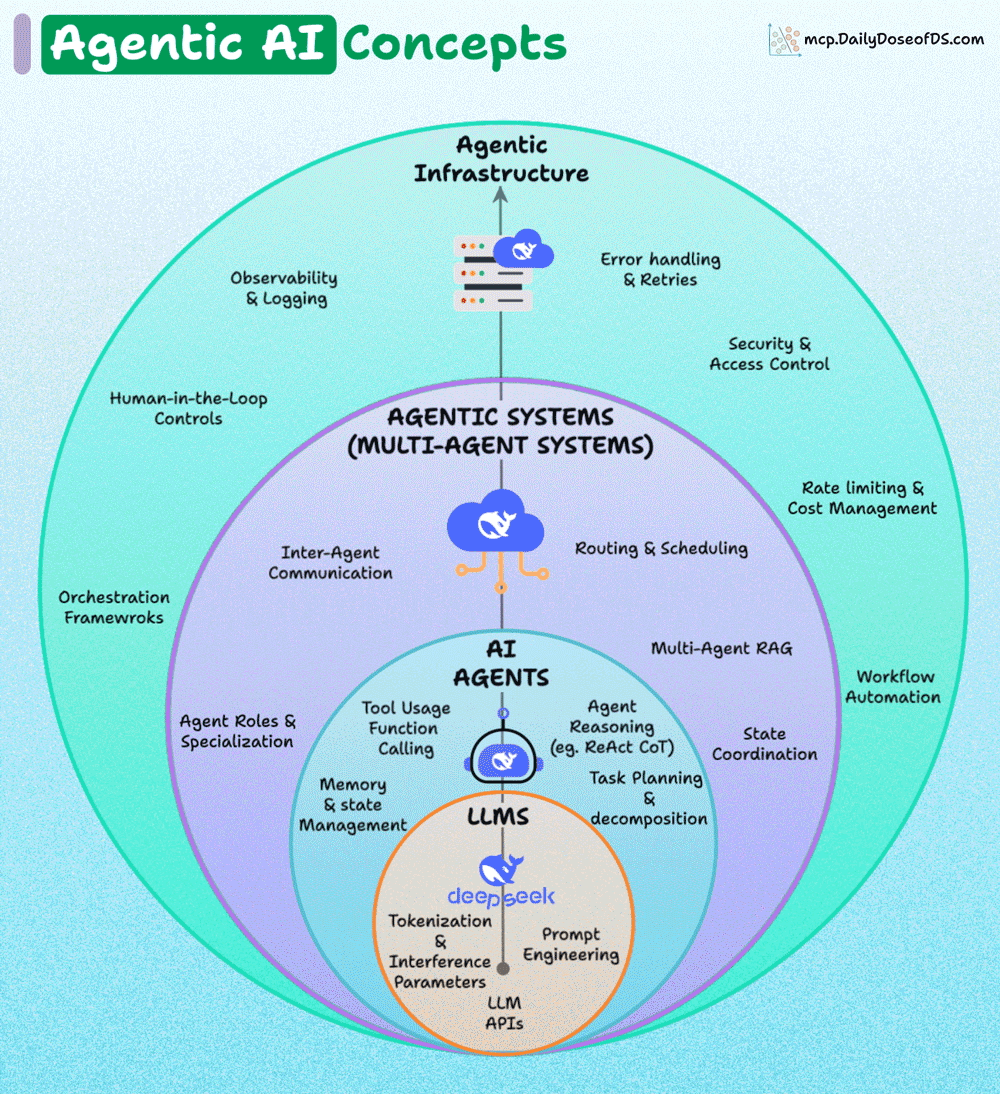

The following graphic depicts a layered overview of Agentic AI concepts, depicting how the ecosystem is structured from the ground up (LLMs) to higher-level orchestration (Agentic Infrastructure).

Let’s break it down layer by layer:

1) LLMs (foundation layer)

At the core, you have LLMs like GPT, DeepSeek, etc.

Core concepts here:

Tokenization & inference parameters: how text is broken into tokens and processed by the model.

Prompt engineering: designing inputs to get better outputs.

LLM APIs: programmatic interfaces to interact with the model.

This is the engine that powers everything else.

2) AI Agents (built on LLMs)

Agents wrap around LLMs to give them the ability to act autonomously.

Key responsibilities:

Tool usage & function calling: connecting the LLM to external APIs/tools.

Agent reasoning: reasoning methods like ReAct (reasoning + act) or Chain-of-Thought.

Task planning & decomposition: breaking a big task into smaller ones.

Memory management: keeping track of history, context, and long-term info.

Agents are the brains that make LLMs useful in real-world workflows.

3) Agentic systems (multi-agent systems)

When you combine multiple agents, you get agentic systems.

Features:

Inter-Agent communication: agents talking to each other, making use of protocols like ACP, A2A if needed.

Routing & scheduling: deciding which agent handles what, and when.

State coordination: ensuring consistency when multiple agents collaborate.

Multi-Agent RAG: using retrieval-augmented generation across agents.

Agent roles & specialization: Agents with unique purposes

Orchestration frameworks: tools (like CrewAI, etc.) to build workflows.

This layer is about collaboration and coordination among agents.

4) Agentic Infrastructure

The top layer ensures these systems are robust, scalable, and safe.

This includes:

Observability & logging: tracking performance and outputs (using frameworks like DeepEval).

Error handling & retries: resilience against failures.

Security & access control: ensuring agents don’t overstep.

Rate limiting & cost management: controlling resource usage.

Workflow automation: integrating agents into broader pipelines.

Human-in-the-loop controls: allowing human oversight and intervention.

This layer ensures trust, safety, and scalability for enterprise/production environments.

Overall, Agentic AI, as a whole, involves a stacked architecture, where each outer layer adds reliability, coordination, and governance over the inner layers.

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.