6 Components of Context Engineering

...explained visually.

The Real Bottleneck in AI Isn't What You Think

The bottleneck in AI has quietly shifted. It’s not the models, frameworks, or even data. It’s getting infrastructure that actually works.

Traditional cloud providers are built for enterprises: committed workloads, minimum spend requirements, and quota approvals that take days. By the time you’re training, hours have passed.

RunPod is the closest solution to an infra that just disappears. Pay by the second, stop when done. Start prototyping on a cheap A40, scale to H100s when validated.

Good infra gets out of your way. Available when you need it, invisible when you don’t.

Get started with RunPod here →

Thanks to RunPod for partnering today!

6 Components of Context Engineering

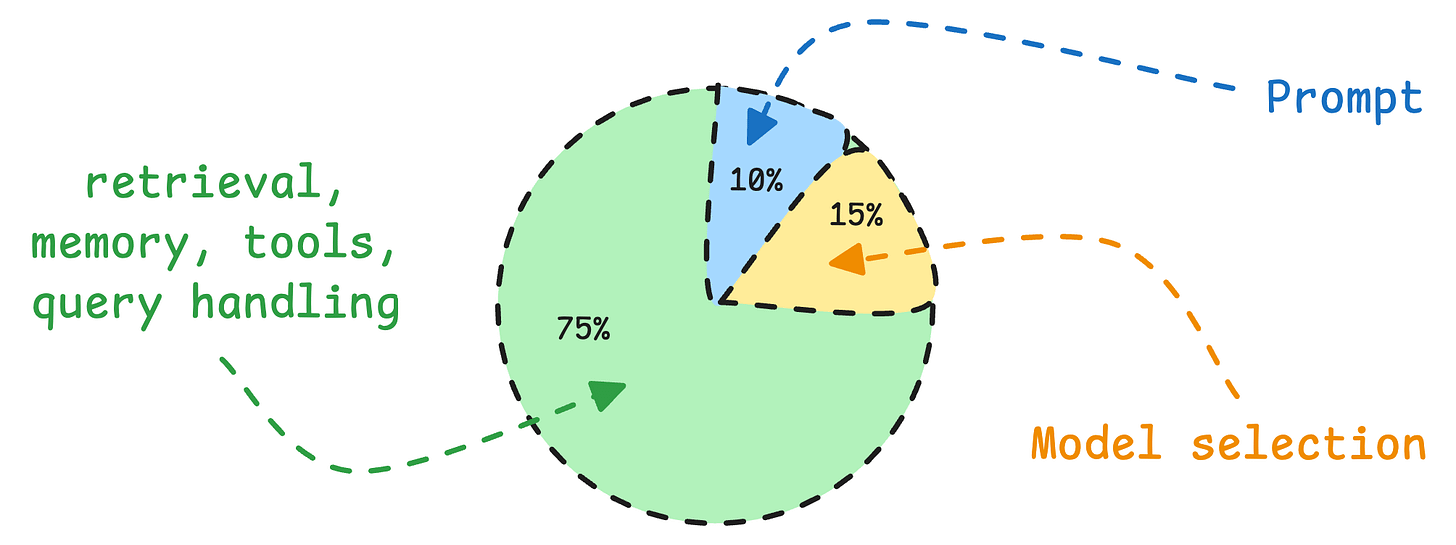

Here’s rough math on what determines your AI app’s output quality:

Model selection: 15%

Prompt: 10%

Everything else (retrieval, memory, tools, query handling): 75%

We’ve seen teams obsessing over the wrong 25% when the actual problem lies elsewhere.

And this is exactly why “context engineering” has quietly become the most important skill in AI engineering today.

It’s the art of getting the right information to the model at the right time in the right format.

And it has 6 core components, as depicted in the visual below:

Prompting Techniques

This is where most people stop. But even here, there’s more depth than people realize.

Classic prompting is about pattern recognition. You give the model examples, and it learns the format, style, and logic you want. Few-shot prompting still works remarkably well for structured tasks.

But advanced prompting is where things get interesting.

Techniques like Chain-of-thought prompting give the model thinking room. Instead of jumping straight to an answer, you ask it to reason step-by-step. This simple change can dramatically improve accuracy on complex problems.

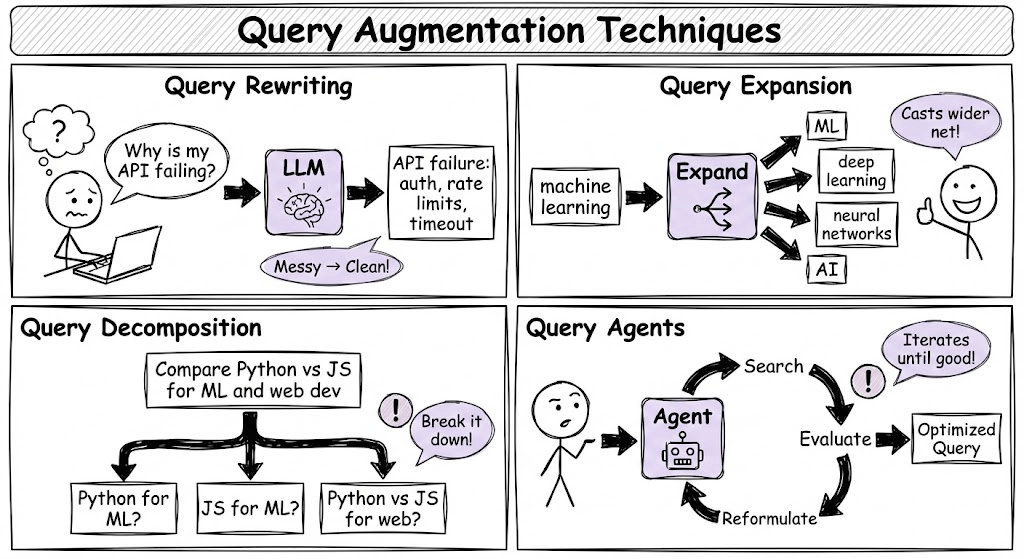

Query Augmentation

Users are lazy in writing queries.

When someone types “How do I make this work when my API call keeps failing?”, that’s almost useless to a retrieval system.

Query augmentation fixes this through several techniques:

Query Rewriting: An LLM takes that vague question and transforms it.

Query Expansion: Adding related terms and synonyms to cast a wider net.

Query Decomposition: Breaking a complex question into sub-questions that can be answered independently.

Query Agents: Using an agent to dynamically decide how to reformulate the query based on initial results.

Long-Term Memory

Say an agent has a great conversation with a user. The user shared preferences, context, and history. But as the session ends, it’s all gone.

Long-term memory fixes this with external storage:

Vector Databases: Store embeddings of past interactions for semantic search.

Graph Databases: Store conversations as relationships and entities.

The type of memory matters too:

Episodic memory signifies specific events

Semantic memory maintains general facts about the user, and

Procedural memory handles how the user likes things done.

Open-source tools like Cognee make this accessible, and you don’t need to build from scratch.

Short-Term Memory

Short-term memory is simply the conversation history. This one seems obvious, but it’s often mismanaged.

And here’s where teams mess up:

Stuffing too much into the context window (noise drowns out signal)

Not including enough (model lacks critical information)

Poor ordering (important context buried at the end)

No summarization strategy for long conversations

Knowledge base Retrieval

Most teams think about this as RAG, but that’s too narrow. RAG is one pattern, not the whole picture.

The real question is: How do you connect your AI to your organization’s data?

That knowledge lives everywhere, like: docs, wikis, databases, SaaS tools like Notion and Google Drive, APIs, and code repositories.

The retrieval pipeline has three layers:

Pre-Retrieval: How do you chunk docs? What metadata do you preserve? How do you handle tables and structured data? How do you keep everything in sync?

Retrieval: Which embedding model? Which retrieval strategy do you use: Vector search or hybrid with BM25? How do you re-rank?

Augmentation: How do you format retrieved context, include citations, handle contradictions, etc?

Open-source tooling like Airweave solves this end-to-end. Instead of building custom connectors for every data source, you can sync your knowledge bases and get unified access to Notion, Google Drive, databases, and more.

You can get 10x improvements in retrieval quality without changing the model, but by just fixing the chunking strategy or properly syncing knowledge sources.

Tools and Agents

A tool extends what the model can do because, without it, the model is stuck with just what’s in its weights and context window.

Moreover, an agent decides when and how to use those tools.

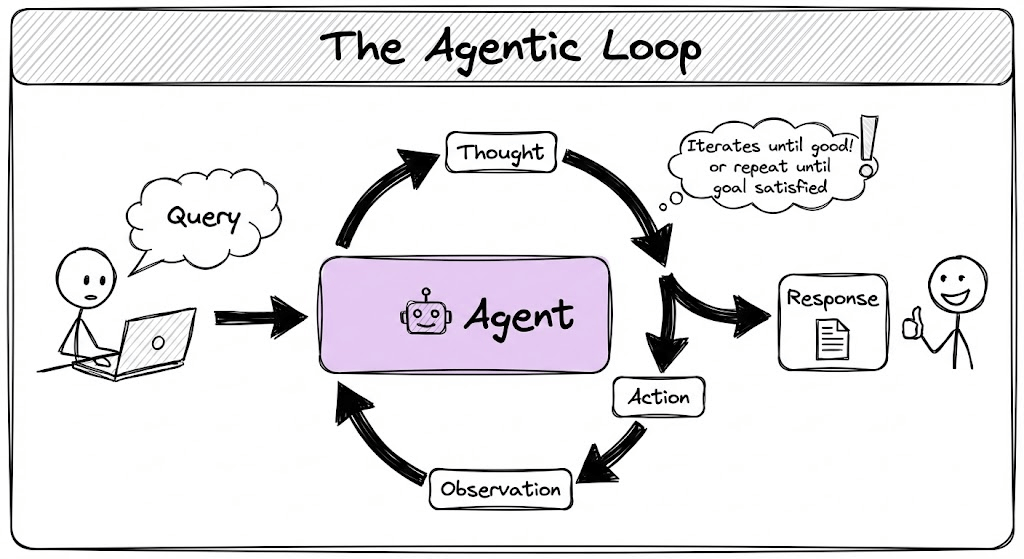

The basic loop looks like this: Query → Thought → Action → Observation → (repeat until goal satisfied) → Response

Single-agent architecture works for straightforward tasks. Most chatbots and copilots fall into this category.

A multi-agent architecture is better for complex workflows. You have specialized agents that collaborate. One does research, another writes, another critiques. They hand off work to each other.

MCPs take this to the next step!

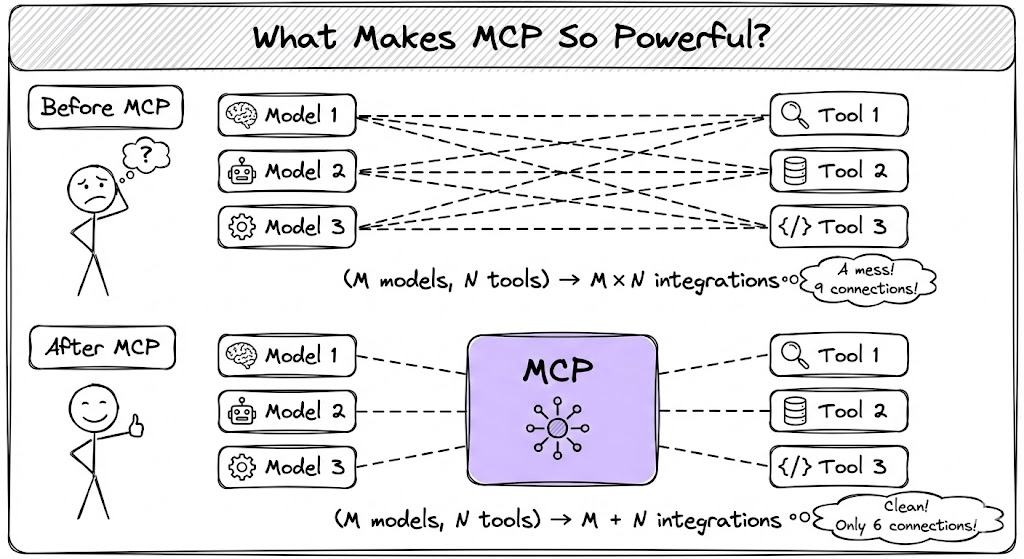

Traditional tool integration requires N×M connections. If you have 3 models and 4 tools, you need 12 integration points.

MCP changes this to N+M. Models and tools both connect to a standard protocol layer.

Some time back, prompt engineering made it sound like the magic was in crafting the perfect instruction.

Context engineering recognized that the real magic is in the entire info pipeline instead:

What context do you provide?

Where does that context come from?

How is it retrieved, filtered, and formatted?

What can the model do with tools?

What does it remember across sessions?

The visual we created breaks down all 6 components we discussed today:

If you’re building AI applications in 2025, this is the mental model you need.

Thanks for reading!