6 Must-know MCP Primitives for AI Engineers

...explained with usage.

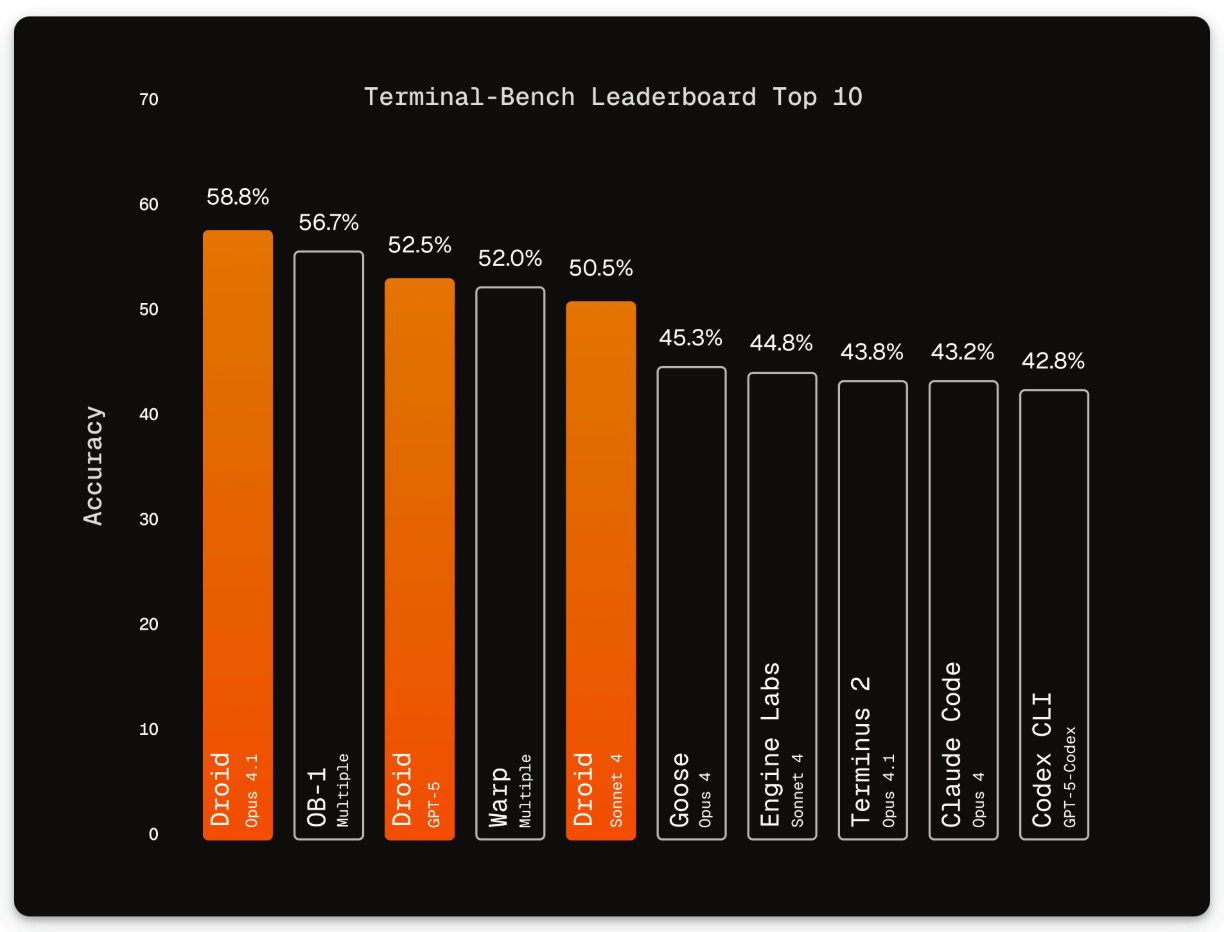

Droid: A new SOTA on Terminal-Bench

Droid by Factory has reached #1 on Terminal-Bench, the most challenging general software development benchmark, outperforming popular tools like Claude Code and Codex CLI.

For context, Terminal‑Bench benchmark measures AI agents’ ability to complete complex end‑to‑end tasks spanning coding, build/test and dependency management, data and ML workflows, systems and networking, security, and core CLI workflows.

Droids are available with any model, in any interface: CLI, IDE, Slack, Linear, Browser.

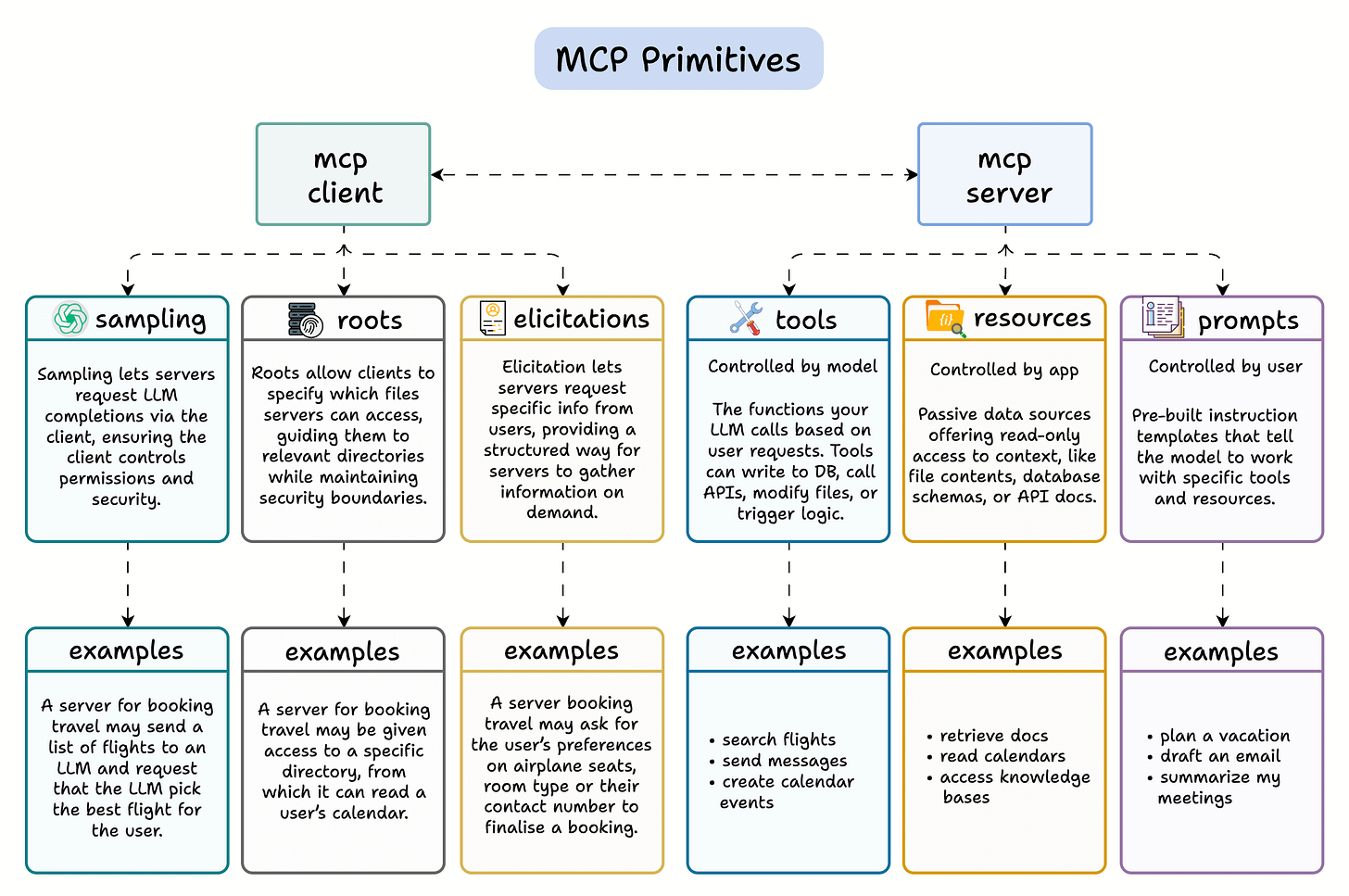

6 Core MCP Primitives

Developers feel MCP is just another tool calling standard, but that’s just scratching the surface.

But unlike simple tool calling, MCP creates a two-way communication between your AI apps and servers.

Here’s a breakdown of the 6 core MCP primitives that make MCPs powerful (explained with examples):

We covered all these details (with implementations) in the MCP crash course.

Part 1 covered MCP fundamentals, the architecture, context management, etc. →

Part 2 covered core capabilities, JSON-RPC communication, etc. →

Part 4 built a full-fledged MCP workflow using tools, resources, and prompts →

Part 5 taught how to integrate Sampling into MCP workflows →

Part 6 covered testing, security, and sandboxing in MCP Workflows →

Part 7 covered testing, security, and sandboxing in MCP Workflows →

Part 8 integrated MCPs with the most widely used agentic frameworks: LangGraph, LlamaIndex, CrewAI, and PydanticAI →

Part 9 covered using LangGraph MCP workflows to build a comprehensive real-world use case→

Let’s start with the client, the entity that facilitates conversation between the LLM app and the server, offering 3 key capabilities

1️⃣ Sampling

The client side always has an LLM.

Thus, if needed, the server can ask the client’s LLM to generate some completions, while the client still controls permissions and safety.

For example, an MCP server with travel tools can ask the LLM to pick the optimal flight from a list.

2️⃣ Roots

This allows the client to define what files the server can access, making interactions secured, sandboxed, and scoped.

For example, a server for booking travel may be given access to a specific directory, from which it can read a user’s calendar.

3️⃣ Elicitations

This allows servers to request user input mid-task, in a structured way.

For example, a server booking travel may ask for the user’s preferences on airplane seats, room type, or their contact number to finalise a booking.

Moving on, let’s talk about the MCP server now.

Serve also exposes 3 capabilities: tools, resources, and prompts

4️⃣ Tools

Controlled by the model, tools are functions that do things: write to DBs, trigger logic, send emails, etc.

For example:

search flights

send messages

create calendar events

5️⃣ Resources

Controlled by the app, resources are the passive, read-only data like files, calendars, knowledge bases, etc.

Examples:

retrieve docs

read calendars

access knowledge bases

6️⃣ Prompts

Controlled by the user, prompts are pre-built instruction templates that guide how the LLM uses tools/resources.

Examples:

plan a vacation

draft an email

summarize my meetings

Those were the 6 core MCP primitives that make MCPs powerful.

It also shows that MCP is not just another tool calling standard. Instead, it creates a two-way communication between your AI apps and servers to build powerful AI workflows.

We covered all these details (with implementations) in the MCP crash course.

Part 1 covered MCP fundamentals, the architecture, context management, etc. →

Part 2 covered core capabilities, JSON-RPC communication, etc. →

Part 4 built a full-fledged MCP workflow using tools, resources, and prompts →

Part 5 taught how to integrate Sampling into MCP workflows →

Part 6 covered testing, security, and sandboxing in MCP Workflows →

Part 7 covered testing, security, and sandboxing in MCP Workflows →

Part 8 integrated MCPs with the most widely used agentic frameworks: LangGraph, LlamaIndex, CrewAI, and PydanticAI →

Part 9 covered using LangGraph MCP workflows to build a comprehensive real-world use case→

Thanks for reading!