6 Types of Contexts for AI Agents

...explained from a context engineering perspective.

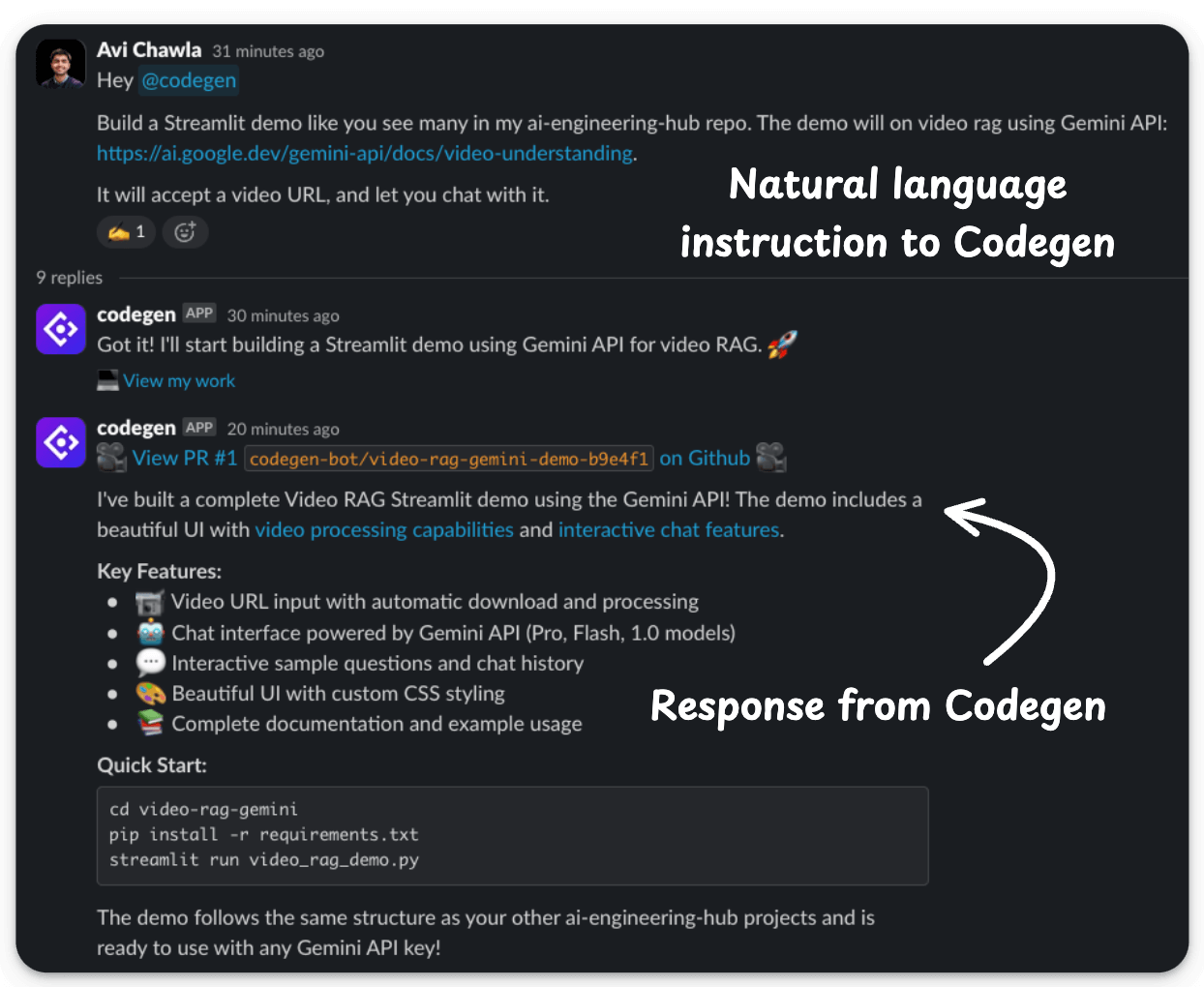

Codegen: Idea to feature in seconds!

Codegen lets you describe any code modification and let AI do the work.

We’ve integrated it into Slack, and now we can review PRs, ship features, and start new projects, all without ever leaving a chat window.

Below, I asked it to produce a video RAG using the Gemini API:

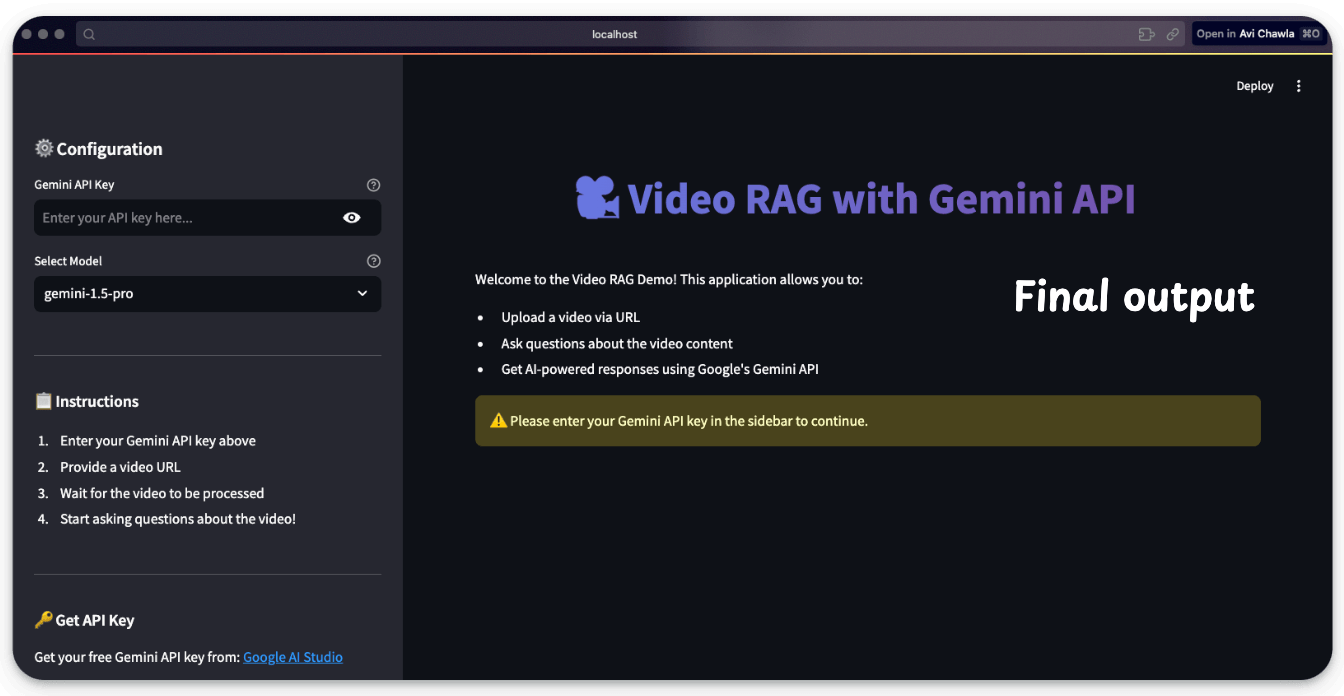

Codegen returned with a PR, which resulted in this:

Explore Codegen yourself here →

And yes, you can use it for free, just connect your GitHub account and assign a job to Codegen Agents.

Thanks to Codegen for partnering today!

Keras’ Post-training quantization in one line of code!

Keras now lets you quantize models with just one line of code.

Simply run model.quantize(quantization_mode) as depicted below:

You can either quantize your own models or any pre-trained model obtained from KerasHub.

It supports quantization to int4, int8, float8, and GPTQ modes.

6 types of contexts for AI Agents

A poor LLM can possibly work with an appropriate context, but a SOTA LLM can never make up for an incomplete context.

That is why production-grade LLM apps don’t just need instructions but rather structure, which is the full ecosystem of context that defines their reasoning, memory, and decision loops.

And all advanced agent architectures now treat context as a multi-dimensional design layer, not a line in a prompt.

Here’s the mental model to use when you think about the types of contexts for Agents:

Instructions → This defines the who, why, and how:

Who’s the agent? (PM, researcher, coding assistant)

Why is it acting? (goal, motivation, outcome)

How should it behave? (steps, tone, format, constraints)

Examples: This shows what good and bad look like:

This includes behavioral demos, structured examples, or even anti-patterns.

Models learn patterns much better than plain rules

Knowledge: This is where you feed it domain knowledge.

From business processes and APIs to data models and workflows

This bridges the gap between text prediction and decision-making

Memory: You want your Agent to remember what it did in the past. This layer gives it continuity across sessions.

Short-term: current reasoning steps, chat history

Long-term: facts, company knowledge, user preferences

Tools: This layer extends the Agent’s power beyond language and takes real-world action.

Each tool has parameters, inputs, and examples.

The design here decides how well your agent uses external APIs.

Tool Results

This layer feeds the tool’s results back to the model to enable self-correction, adaptation, and dynamic decision-making.

These are the exact six layers that help you build fully context-aware Agents.

That said, we did a crash course to help you implement reliable Agentic systems, understand the underlying challenges, and develop expertise in building Agentic apps on LLMs, which every industry cares about now.

Here’s everything we did in the crash course (with implementation):

In Part 1, we covered the fundamentals of Agentic systems, understanding how AI agents act autonomously to perform tasks.

In Part 2, we extended Agent capabilities by integrating custom tools, using structured outputs, and we also built modular Crews.

In Part 3, we focused on Flows, learning about state management, flow control, and integrating a Crew into a Flow.

In Part 4, we extended these concepts into real-world multi-agent, multi-crew Flow projects.

In Part 5 and Part 6, we moved into advanced techniques that make AI agents more robust, dynamic, and adaptable, like Guardrails, Async execution, Callbacks, Human-in-the-loop, Multimodal Agents, and more.

In Part 8 and Part 9, we primarily focused on 5 types of Memory for AI agents, which help agents “remember” and utilize past information.

In Part 10, we implemented the ReAct pattern from scratch.

In Part 11, we implemented the Planning pattern from scratch.

In Part 12, we implemented the Multi-agent pattern from scratch.

In Part 13 and Part 14, we covered 10 practical steps to improve Agentic systems.

Of course, if you have never worked with LLMs, that’s okay. We cover everything in a practical and beginner-friendly way.

👉 Over to you: Have we missed any context layer in this?

Thanks for reading!