7 Categorical Data Encoding Techniques

...summarized in a single frame.

Drag-and-drop UI to build AI agent workflows [open-source]!

Sim is a lightweight, user-friendly platform for building AI agent workflows in minutes.

Key features:

Real-time workflow execution

Connects with your favorite tools

Works with local models via Ollama

Intuitive drag-and-drop interface using ReactFlow

Multiple deployment options (NPM, Docker, Dev Containers)

Based on our testing, Sim is a better alternative to n8n with:

An intuitive interface

A much better copilot for faster builds

AI-native workflows for intelligent agents

GitHub repo → (don’t forget to star)

7 Categorical Data Encoding Techniques

Here are 7 ways to encode categorical features:

One-hot encoding:

Each category is represented by a binary vector of 0s and 1s.

Each category gets its own binary feature, and only one of them is “hot” (set to 1) at a time, indicating the presence of that category.

Number of features = Number of unique categorical labels

Dummy encoding:

Same as one-hot encoding but with one additional step.

After one-hot encoding, we drop a feature randomly.

This is done to avoid the dummy variable trap. We covered it here along with 8 more lesser-known pitfalls and cautionary measures that you will likely run into in your DS projects: 8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science.

Number of features = Number of unique categorical labels - 1.

Effect encoding:

Similar to dummy encoding but with one additional step.

Alter the row with all zeros to -1.

This ensures that the resulting binary features represent not only the presence or absence of specific categories but also the contrast between the reference category and the absence of any category.

Number of features = Number of unique categorical labels - 1.

Label encoding:

Assign each category a unique label.

Label encoding introduces an inherent ordering between categories, which may not be the case.

Number of features = 1.

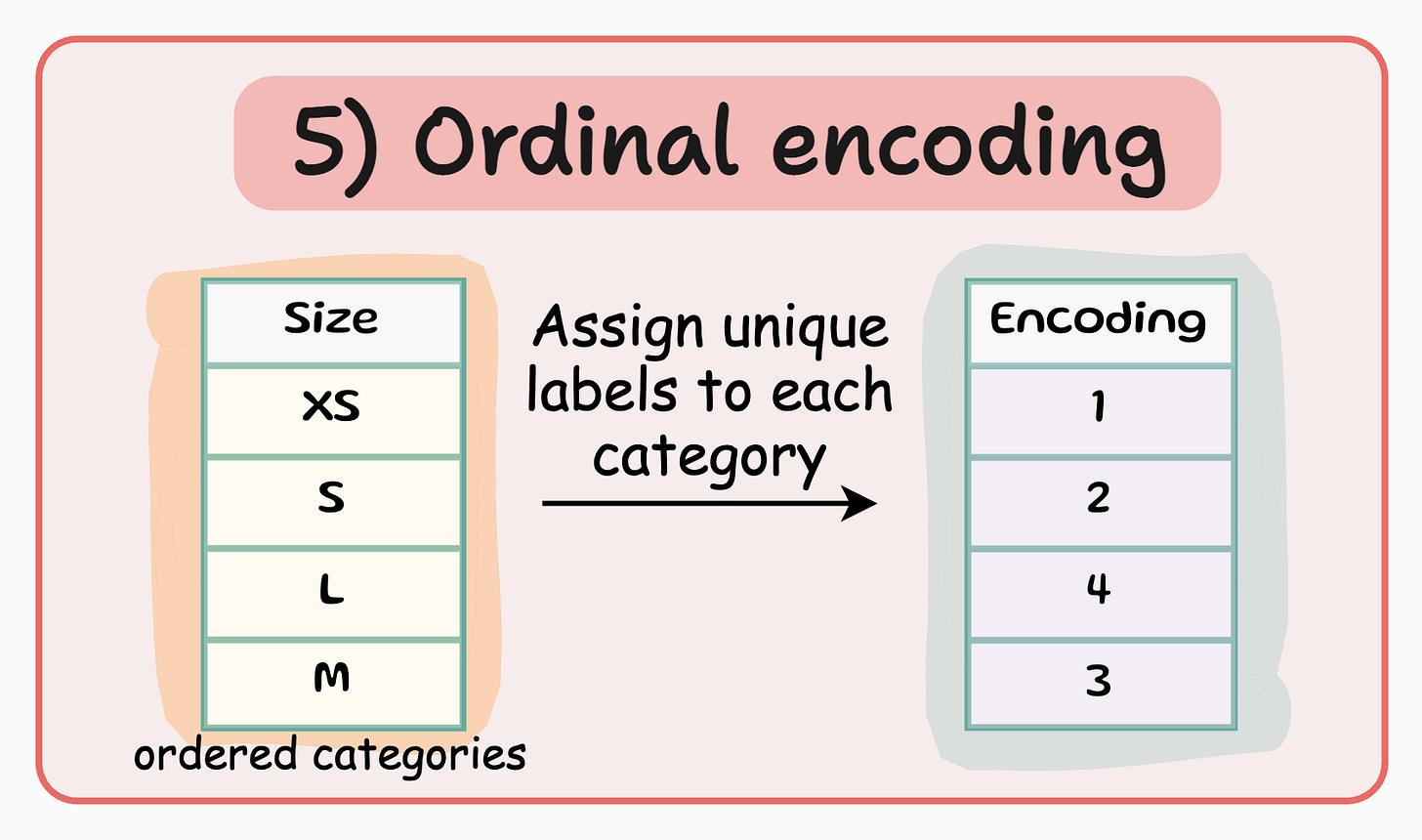

Ordinal encoding:

Similar to label encoding — assign a unique integer value to each category.

The assigned values have an inherent order, meaning that one category is considered greater or smaller than another.

Number of features = 1.

Count encoding:

Also known as frequency encoding.

Encodes categorical features based on the frequency of each category.

Thus, instead of replacing the categories with numerical values or binary representations, count encoding directly assigns each category with its corresponding count.

Number of features = 1.

Binary encoding:

Combination of one-hot encoding and ordinal encoding.

It represents categories as binary code.

Each category is first assigned an ordinal value, and then that value is converted to binary code.

The binary code is then split into separate binary features.

Useful when dealing with high-cardinality categorical features (or a high number of features) as it reduces the dimensionality compared to one-hot encoding.

Number of features = log(n) (in base 2).

While these are some of the most popular techniques, do note that these are not the only techniques for encoding categorical data.

You can try plenty of techniques with the category-encoders library.

👉 Over to you: What other common categorical data encoding techniques have I missed?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.