7 Patterns in Multi-Agent Systems

...explained visually.

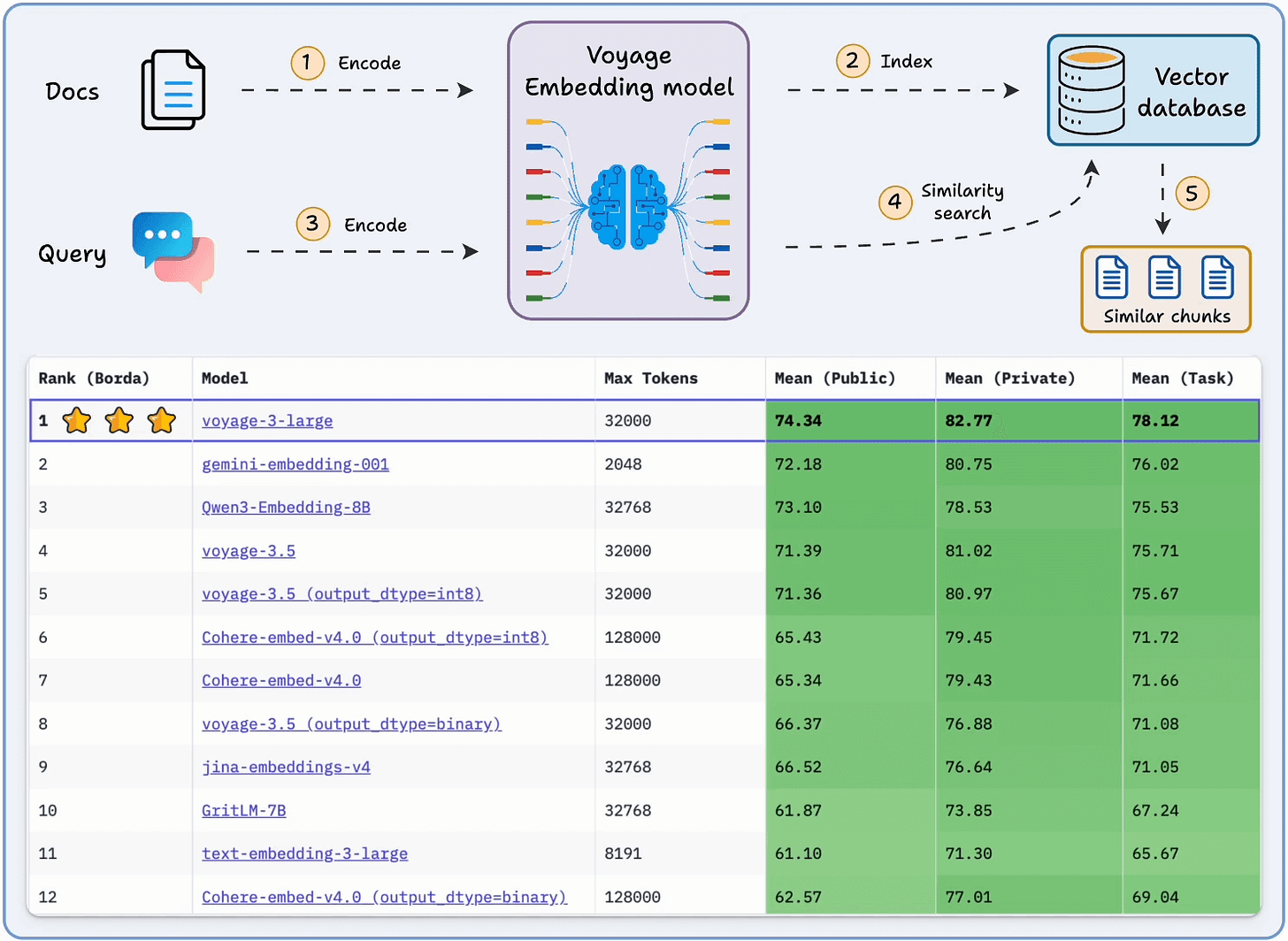

voyage-3-large embedding model just topped the RTEB leaderboard!

It’s a big deal because it:

ranks first across 33 eval datasets

outperforms OpenAI and cohere models

supports quantization to reduce storage costs

Here’s another reason that makes this model truly superior.

Most retrieval benchmarks test models on academic datasets that don’t reflect real-world data.

RTEB, on the other hand, is a newly released leaderboard on HuggingFace that evaluates retrieval models across enterprise domains like finance, law, and healthcare using a mix of open and held-out datasets to discourage overfitting.

This makes it a fair, transparent, and application-focused evaluation.

One reason voyage-3-large performs exceptionally well from a deployability standpoint is its use of quantization-aware training (QAT).

Most embedding models today are trained in full precision and then compressed afterward.

While this makes them run faster, the technique is lossy because the model loses the fine-grained relationships between activations that the model originally learned during training.

QAT simulates quantization during training, teaching the model to stay accurate and perform reliably even at lower precision (like int8 or binary).

voyage-3-large uses QAT to offer faster inference and reduce vector DB costs, as confirmed by the benchmark, with minimal drop in accuracy.

You can find the leaderboard here →

Thank you to Hugging Face for hosting this leaderboard and to MongoDB for partnering today!

7 Patterns in Multi-Agent Systems

Monolithic agents (single LLMs stuffed with system prompts) didn’t sustain for too long.

We soon realized that to build effective systems, we need multiple specialized agents that can collaborate and self-organize.

To achieve this, several architectural patterns have emerged for AI agents.

This visual explains the 7 core patterns of multi-agent orchestration, each suited for specific workflows:

Parallel:

Each agent tackles a different subtask, like data extraction, web retrieval, and summarization, and their outputs merge into a single result.

Perfect for reducing latency in high-throughput pipelines like document parsing or API orchestration.

Sequential:

Each agent adds value step-by-step, like one generates code, another reviews it, and a third deploys it.

You’ll see this in workflow automation, ETL chains, and multi-step reasoning pipelines.

Loop:

Agents continuously refine their own outputs until a desired quality is reached.

Great for proofreading, report generation, or creative iteration, where the system thinks again before finalizing results.

Router:

Here, a controller agent routes tasks to the right specialist. For instance, user queries about finance go to a FinAgent, legal queries to a LawAgent.

It’s the foundation of context-aware agent routing, as seen in emerging MCP/A2A-style frameworks.

Aggregator:

Many agents produce partial results that the main agent combines into one final output. So each agent forms an opinion, and a central one aggregates them into a consensus.

Common in RAG retrieval fusion, voting systems, etc.

Network:

There’s no clear hierarchy here, and agents just talk to each other freely, sharing context dynamically.

Used in simulations, multi-agent games, and collective reasoning systems where free-form behavior is desired.

Hierarchical:

A top-level planner agent delegates subtasks to workers, tracks their progress, and makes final calls. This is exactly like a manager and their team.

One thing we constantly think about when picking a pattern to build a multi-agent system (provided we do need an Agent and it has to be a multi-agent system) is not which one looks coolest, but which one minimizes friction between agents.

It’s easy to spin up 10 agents and call it a team. What’s hard is designing the communication flow so that:

No two agents duplicate work.

Every agent knows when to act and when to wait.

The system collectively feels smarter than any individual part.

👉 Over to you: Which patterns do you use the most?

Implement ReAct Agentic Pattern from Scratch

The above discussion focused on how multiple agents collaborate.

But it’s equally important to understand what happens inside each agent.

Because no matter how sophisticated your orchestration is, if each agent can’t reason, reflect, and act intelligently, the entire system collapses into chaos.

ReAct Agentic Pattern is one extremely popular Agentic patterns.

To understand this, consider the output of a multi-agent system below:

As shown above, the individual Agents are going through a series of thought activities before producing a response.

This is ReAct pattern in action!

More specifically, under the hood, many such frameworks use the ReAct (Reasoning and Acting) pattern to let LLM think through problems and use tools to act on the world.

This enhances an LLM agent’s ability to handle complex tasks and decisions by combining chain-of-thought reasoning with external tool use.

We have also seen this being asked in several LLM interview questions.

Thus, in this article, you explained the entire process of building a ReAct agent from scratch using only Python and an LLM:

Not only that, it also covers:

The entire ReAct loop pattern (Thought → Action → Observation → Answer), which powers intelligent decision-making in many agentic systems.

How to structure a system prompt that teaches the LLM to think step-by-step and call tools deterministically.

How to implement a lightweight agent class that keeps track of conversations and interfaces with the LLM.

A fully manual ReAct loop for transparency and debugging.

A fully automated

agent_loop()controller that parses the agent’s reasoning and executes tools behind the scenes.

Read here: Implement ReAct Pattern from scratch →

Thanks for reading!

Fascinating. Thanks for outlining why RTEB is so crucial. We realy need benchmarks that reflect practical, real-world scenarios over purely academic ones. QAT for deployability sounds genuinely promising; it's a smart approach to balance accuracy with efficiency. Curious to see more on its broader adoption.

Great info I’m working on my own AI 🤖