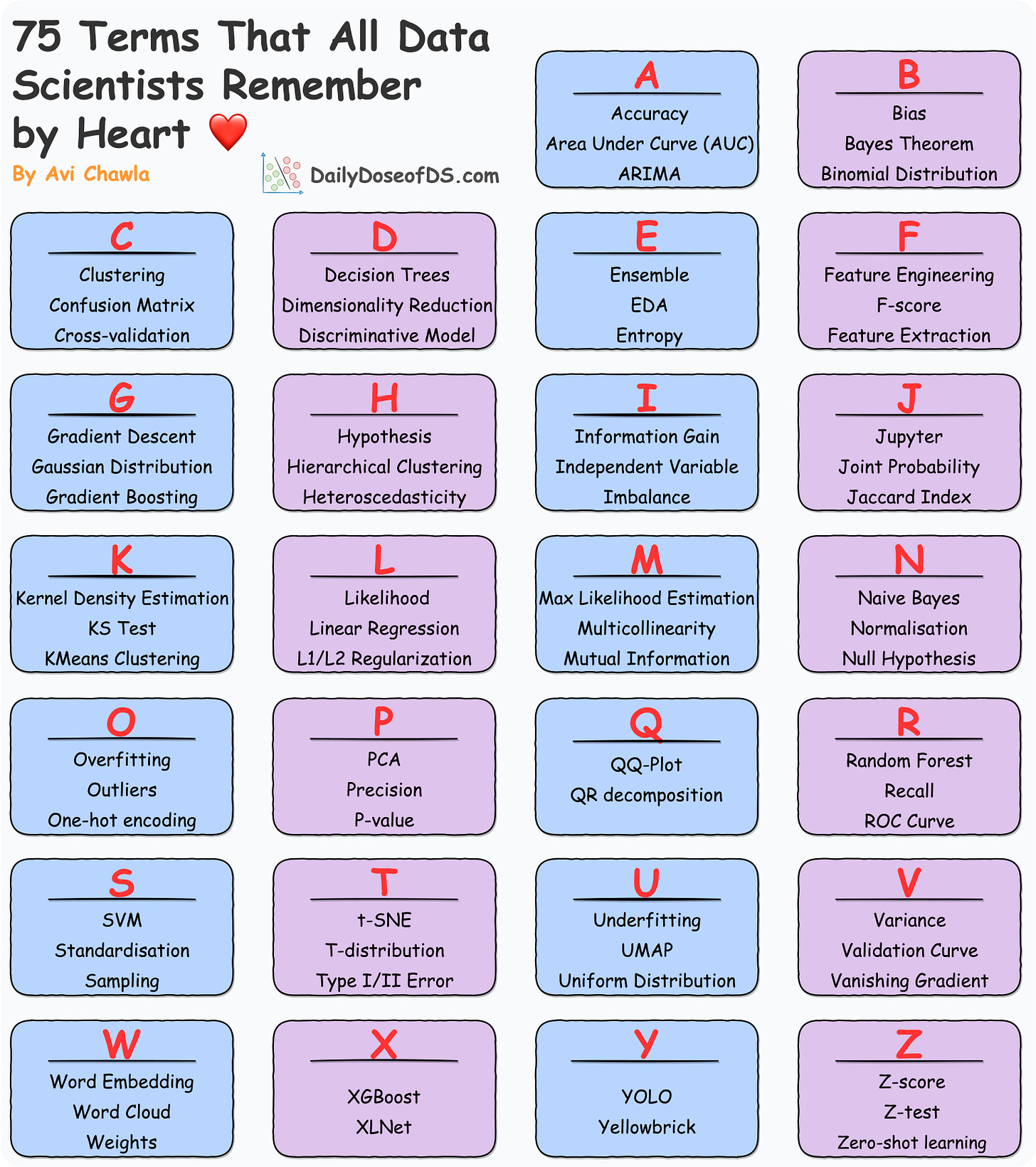

75 Key Terms That All Data Scientists Remember By Heart

Must-know concepts/terms in data science.

Data science has a diverse glossary. The sheet lists the 75 most common and important terms that data scientists use almost every day.

Thus, being aware of them is extremely crucial.

A:

Accuracy: Measure of the correct predictions divided by the total predictions.

Area Under Curve: Metric representing the area under the Receiver Operating Characteristic (ROC) curve, used to evaluate classification models.

ARIMA: Autoregressive Integrated Moving Average, a time series forecasting method.

B:

Bias: The difference between the true value and the predicted value in a statistical model.

Bayes Theorem: Probability formula that calculates the likelihood of an event based on prior knowledge.

Binomial Distribution: Probability distribution that models the number of successes in a fixed number of independent Bernoulli trials.

C:

Clustering: Grouping data points based on similarities.

Confusion Matrix: Table used to evaluate the performance of a classification model.

Cross-validation: Technique to assess model performance by dividing data into subsets for training and testing.

D:

Decision Trees: Tree-like model used for classification and regression tasks.

Dimensionality Reduction: Process of reducing the number of features in a dataset while preserving important information.

Discriminative Models: Models that learn the boundary between different classes.

E:

Ensemble Learning: Technique that combines multiple models to improve predictive performance.

EDA (Exploratory Data Analysis): Process of analyzing and visualizing data to understand its patterns and properties.

Entropy: Measure of uncertainty or randomness in information.

F:

Feature Engineering: Process of creating new features from existing data to improve model performance.

F-score: Metric that balances precision and recall for binary classification.

Feature Extraction: Process of automatically extracting meaningful features from data.

G:

Gradient Descent: Optimization algorithm used to minimize a function by adjusting parameters iteratively.

Gaussian Distribution: Normal distribution with a bell-shaped probability density function.

Gradient Boosting: Ensemble learning method that builds multiple weak learners sequentially.

H:

Hypothesis: Testable statement or assumption in statistical inference.

Hierarchical Clustering: Clustering method that organizes data into a tree-like structure.

Heteroscedasticity: Unequal variance of errors in a regression model.

I:

Information Gain: Measure used in decision trees to determine the importance of a feature.

Independent Variable: Variable that is manipulated in an experiment to observe its effect on the dependent variable.

Imbalance: Situation where the distribution of classes in a dataset is not equal.

J:

Jupyter: Interactive computing environment used for data analysis and machine learning.

Joint Probability: Probability of two or more events occurring together.

Jaccard Index: Measure of similarity between two sets.

K:

Kernel Density Estimation: Non-parametric method to estimate the probability density function of a continuous random variable.

KS Test (Kolmogorov-Smirnov Test): Non-parametric test to compare two probability distributions.

KMeans Clustering: Partitioning data into K clusters based on similarity.

L:

Likelihood: Chance of observing the data given a specific model.

Linear Regression: Statistical method for modeling the relationship between dependent and independent variables.

L1/L2 Regularization: Techniques to prevent overfitting by adding penalty terms to the model's loss function.

M:

Maximum Likelihood Estimation: Method to estimate the parameters of a statistical model.

Multicollinearity: A situation where two or more independent variables are highly correlated in a regression model.

Mutual Information: Measure of the amount of information shared between two variables.

N:

Naive Bayes: Probabilistic classifier based on Bayes Theorem with the assumption of feature independence.

Normalization: Scaling data to have a mean of 0 and standard deviation of 1.

Null Hypothesis: Hypothesis of no significant difference or effect in statistical testing.

O:

Overfitting: When a model performs well on training data but poorly on new, unseen data.

Outliers: Data points that significantly differ from other data points in a dataset.

One-hot encoding: Process of converting categorical variables into binary vectors.

P:

PCA (Principal Component Analysis): Dimensionality reduction technique to transform data into orthogonal components.

Precision: Proportion of true positive predictions among all positive predictions in a classification model.

p-value: Probability of observing a result at least as extreme as the one obtained if the null hypothesis is true.

Q:

QQ-plot (Quantile-Quantile Plot): Graphical tool to compare the distribution of two datasets.

QR decomposition: Factorization of a matrix into an orthogonal and an upper triangular matrix.

R:

Random Forest: Ensemble learning method using multiple decision trees to make predictions.

Recall: Proportion of true positive predictions among all actual positive instances in a classification model.

ROC Curve (Receiver Operating Characteristic Curve): Graph showing the performance of a binary classifier at different thresholds.

S:

SVM (Support Vector Machine): Supervised machine learning algorithm used for classification and regression.

Standardisation: Scaling data to have a mean of 0 and a standard deviation of 1.

Sampling: Process of selecting a subset of data points from a larger dataset.

T:

t-SNE (t-Distributed Stochastic Neighbor Embedding): Dimensionality reduction technique for visualizing high-dimensional data in lower dimensions.

t-distribution: Probability distribution used in hypothesis testing when the sample size is small.

Type I/II Error: Type I error is a false positive, and Type II error is a false negative in hypothesis testing.

U:

Underfitting: When a model is too simple to capture the underlying patterns in the data.

UMAP (Uniform Manifold Approximation and Projection): Dimensionality reduction technique for visualizing high-dimensional data.

Uniform Distribution: Probability distribution where all outcomes are equally likely.

V:

Variance: Measure of the spread of data points around the mean.

Validation Curve: Graph showing how model performance changes with different hyperparameter values.

Vanishing Gradient: Issue in deep neural networks when gradients become very small during training.

W:

Word embedding: Representation of words as dense vectors in natural language processing.

Word cloud: Visualization of text data where word frequency is represented through the size of the word.

Weights: Parameters that are learned by a machine learning model during training.

X:

XGBoost: Extreme Gradient Boosting, a popular gradient boosting library.

XLNet: Generalized Autoregressive Pretraining of Transformers, a language model.

Y:

YOLO (You Only Look Once): Real-time object detection system.

Yellowbrick: Python library for machine learning visualization and diagnostic tools.

Z:

Z-score: Standardized value representing how many standard deviations a data point is from the mean.

Z-test: Statistical test used to compare a sample mean to a known population mean.

Zero-shot learning: Machine learning method where a model can recognize new classes without seeing explicit examples during training.

👉 Over to you: Of course, a lot has been left out here. As an exercise, can you add more terms to this?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

👉 Sponsor the Daily Dose of Data Science Newsletter. More info here: Sponsorship details.

Find the code for my tips here: GitHub.

I like to explore, experiment and write about data science concepts and tools. You can read my articles on Medium. Also, you can connect with me on LinkedIn and Twitter.