A Common Misconception About Log Transformation

...and here's what it does.

Log transform is commonly used to eliminate skewness in data.

Yet, it is not always the ideal solution for eliminating skewness.

It is important to note that log transform:

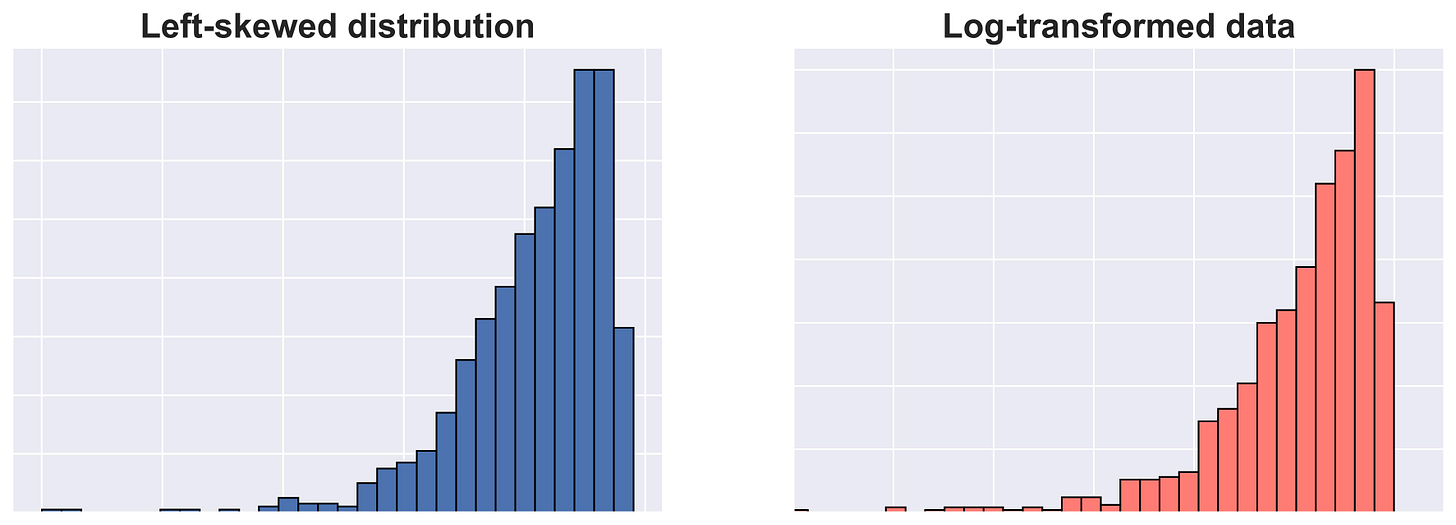

Does not eliminate left-skewness.

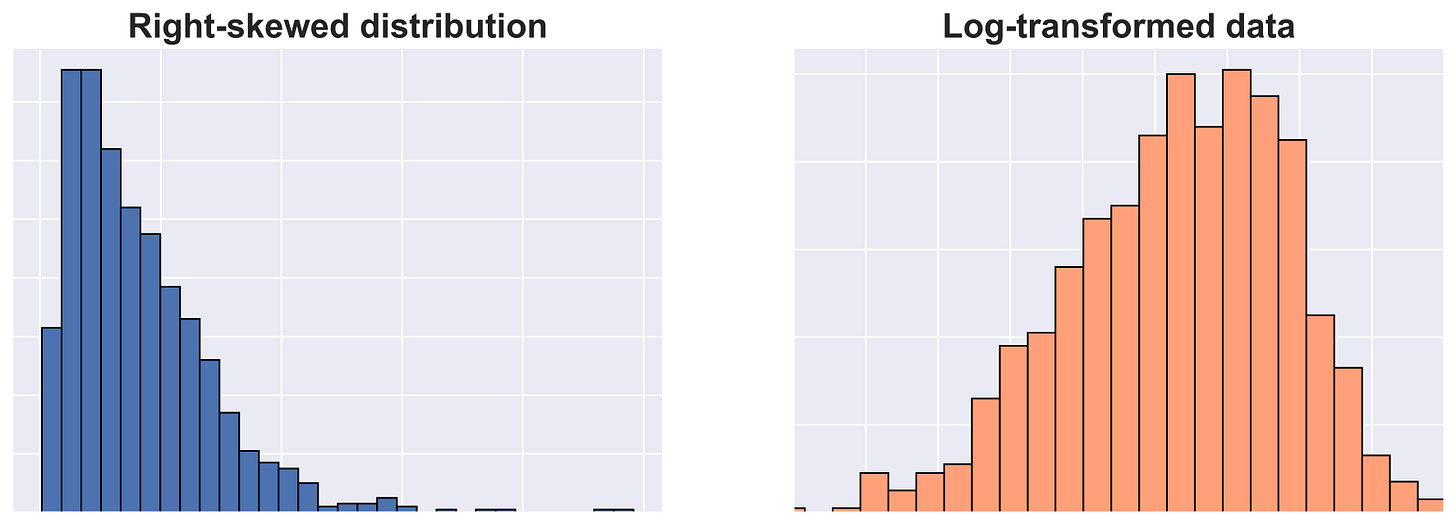

Only works for right-skewness, that too when the values are small and positive.

This is also evident from the image above.

It is because the log function grows faster for lower values. Thus, it stretches out the lower values more than the higher values.

Thus,

In case of left-skewness:

The tail exists to the left, which gets stretched out more than those to the right

Thus, skewness isn't affected much.

In case of right-skewness:

Majority of values and peak exists to the left, which get stretched out more.

However, the log function grows slowly when the values are large. Thus, the impact of stretch is low.

There are a few things you can do:

See if transformation can be avoided as it inhibits interpretability.

If not, try box-cox transform. It is often quite effective, both for left-skewed and right-skewed data. You can use it using Scipy’s implementation: Scipy docs.

👉 Over to you: What are some other ways to eliminate skewness?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

Latest full articles

If you’re not a full subscriber, here’s what you missed:

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Why Bagging is So Ridiculously Effective At Variance Reduction?

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

It's true, when you log transform, interpretably goes out the window. Would it be possible to have a post how to interpret model coefficients after the target variable was transformed using np.log10 ?

Can I convert it back ?