A Hands-on Guide to Agent Communication Protocol

...explained with step-by-step code.

Make Your APIs AI‑Ready in 90 Days

AI‑related API traffic surged 73% in 2024, but most APIs aren’t designed for it.

Postman’s playbook walks you through a 90‑day hands‑on journey:

Days 1–30: Convert messy API docs into machine‑readable formats

Days 31–60: Build infrastructure that supports AI automation

Days 61–90: Deploy AI agents to streamline collaboration at scale

Practical steps to prep your APIs for ML/AI workloads, built by engineers, for engineers.

Thanks to Postman for partnering today!

A Hands-on Guide to Agent Communication Protocol

Today, let’s learn how you can use the Agent Communication Protocol (ACP) to allow two agents (built in different frameworks) to work together.

For starters, ACP is a standardized, RESTful interface for Agents to discover and coordinate with other Agents, regardless of their framework.

So, essentially, just like A2A, it lets Agents communicate with Agents.

We’ll create a research summary generator, where:

Agent 1 drafts a general topic summary (built using CrewAI)

Agent 2 fact-checks & enhances it using web search (built using Smolagents).

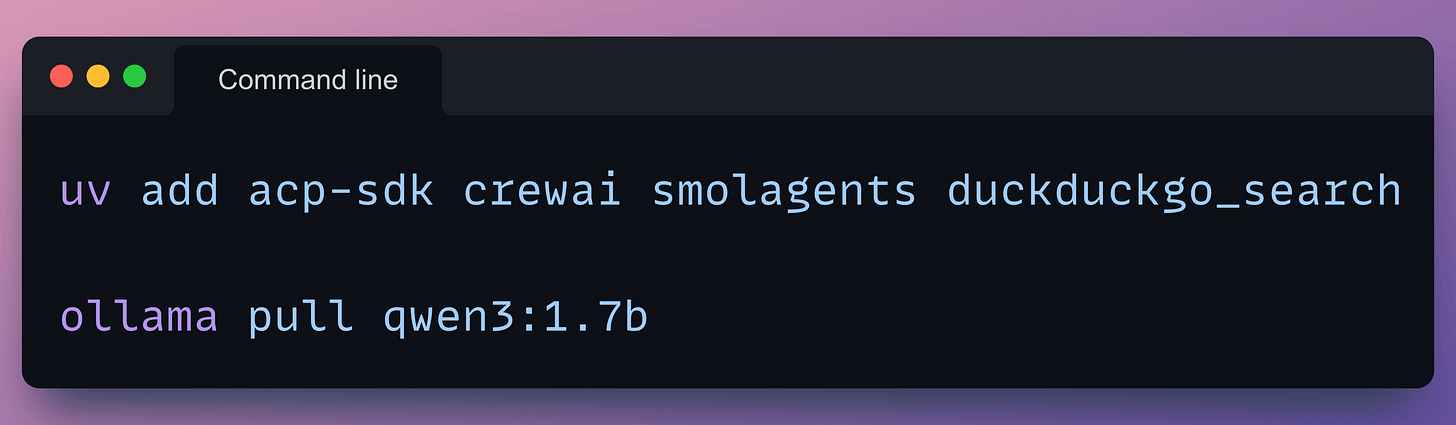

Start by installing some dependencies and a local LLM using Ollama:

In our case, we’ll have two servers, and each server will host one Agent.

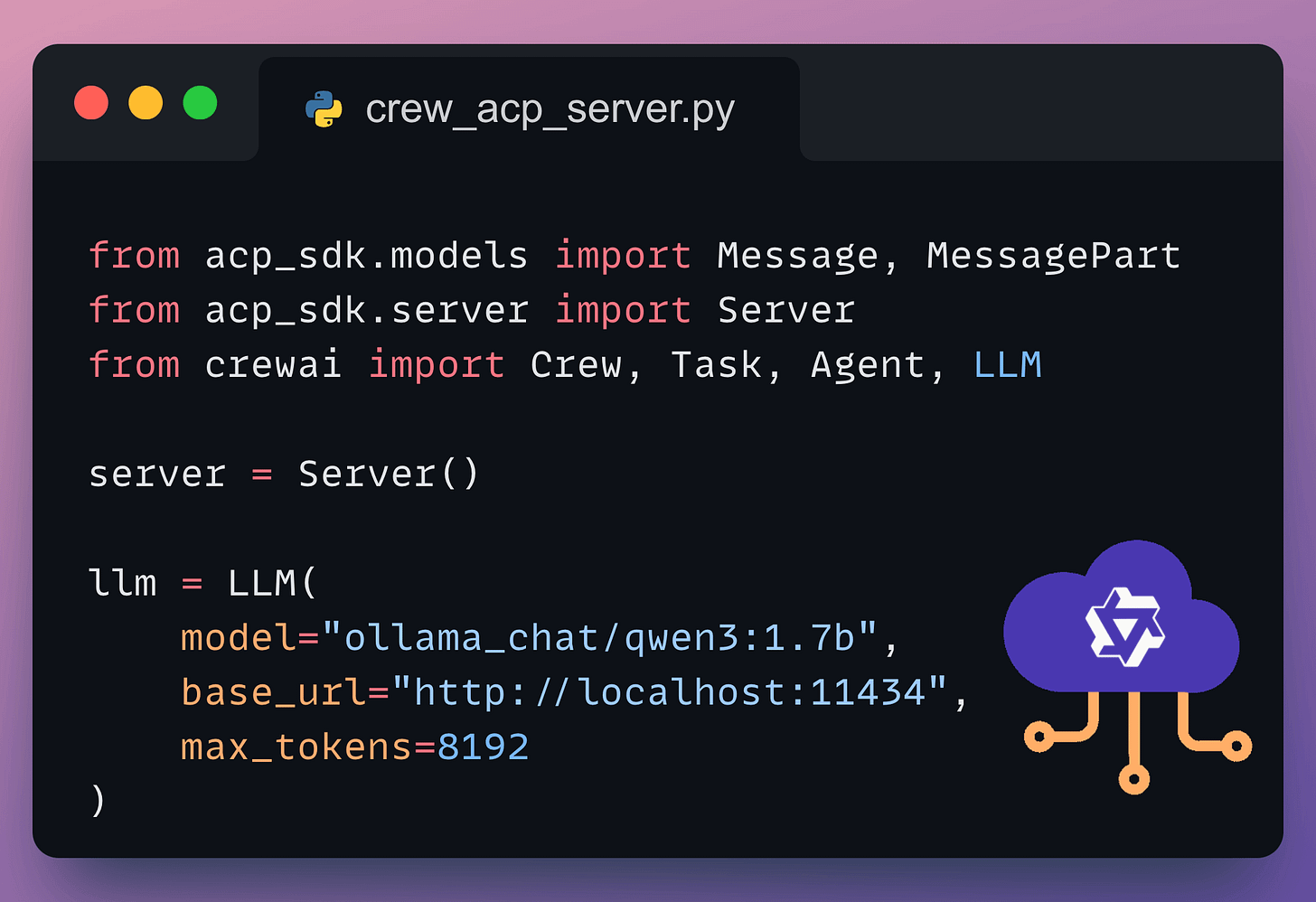

Let’s define the server that will host the CrewAI Agent and its LLM:

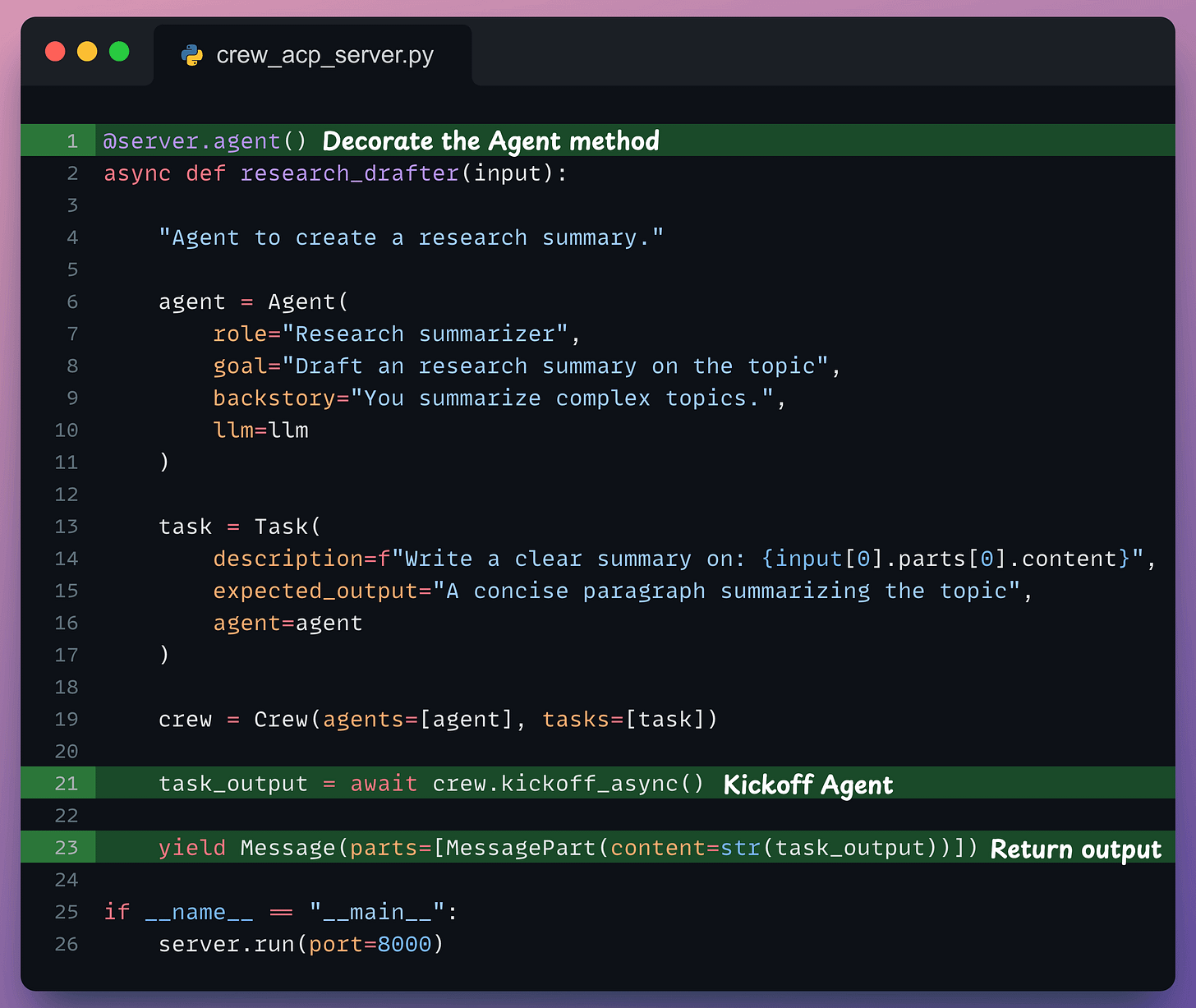

In ACP, your Agent should be enclosed within a method decorated with the @server.agent() decorator.

We do this below:

Line 1 → Decorate the method.

Line 2 → Declare the method with an input parameter. Note that the name of this function would be used as the agent name for discovery via ACP.

Line 6-21 → Build the Agent and kick off the Crew.

Line 23 → Return the output in the expected ACP format.

Line 26 → Serve on a REST-based ACP server running locally.

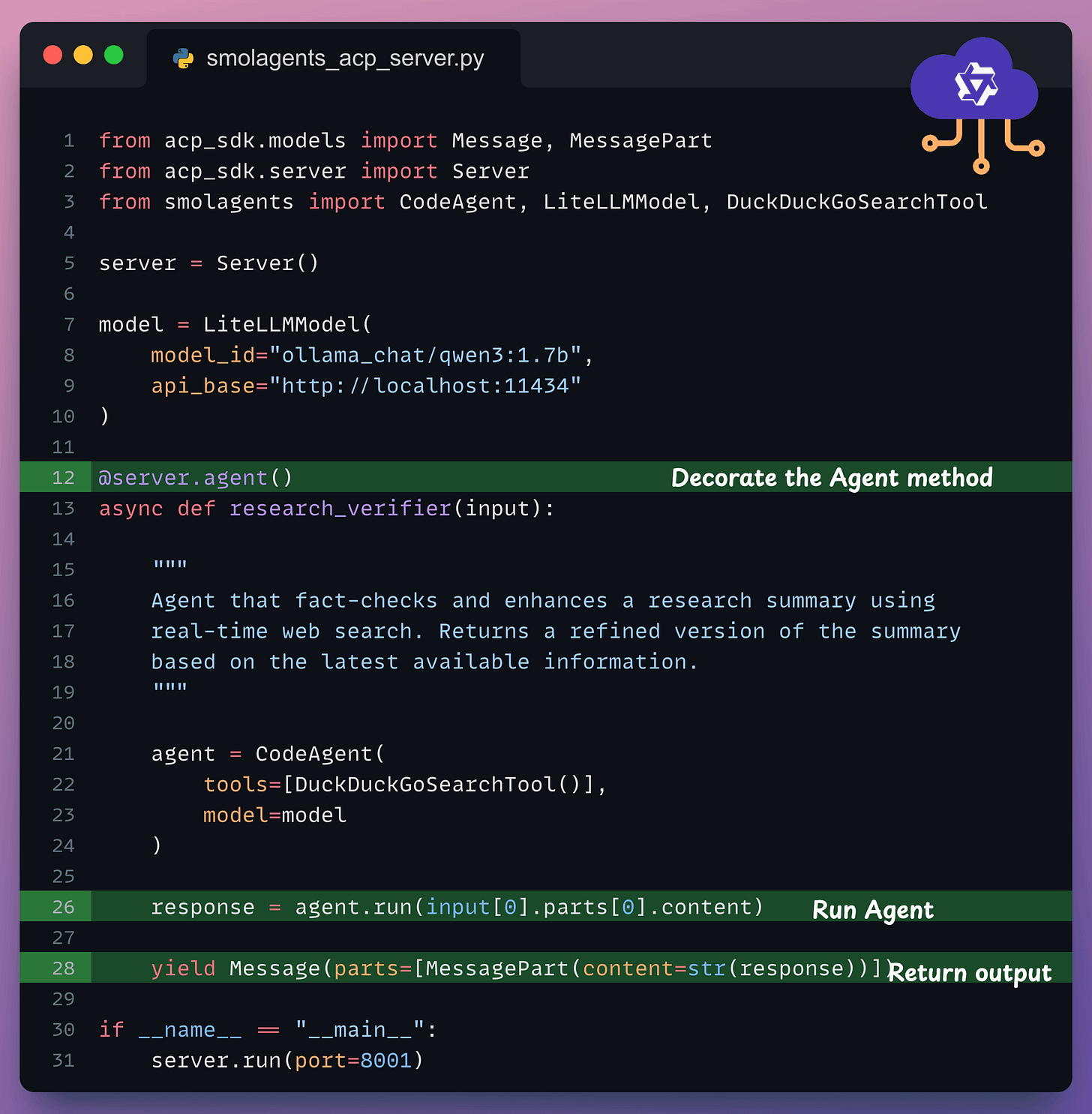

Next, we repeat similar steps for the second server that will host the Smolagents Agent and its LLM:

Line 1-10 → Some imports, defining the Server and the LLM.

Line 12 → Decorate the method.

Line 21-28 → Build the Agent with a web search tool, run the Agent, and return the output.

Line 31 → Serve on a REST-based ACP server running locally.

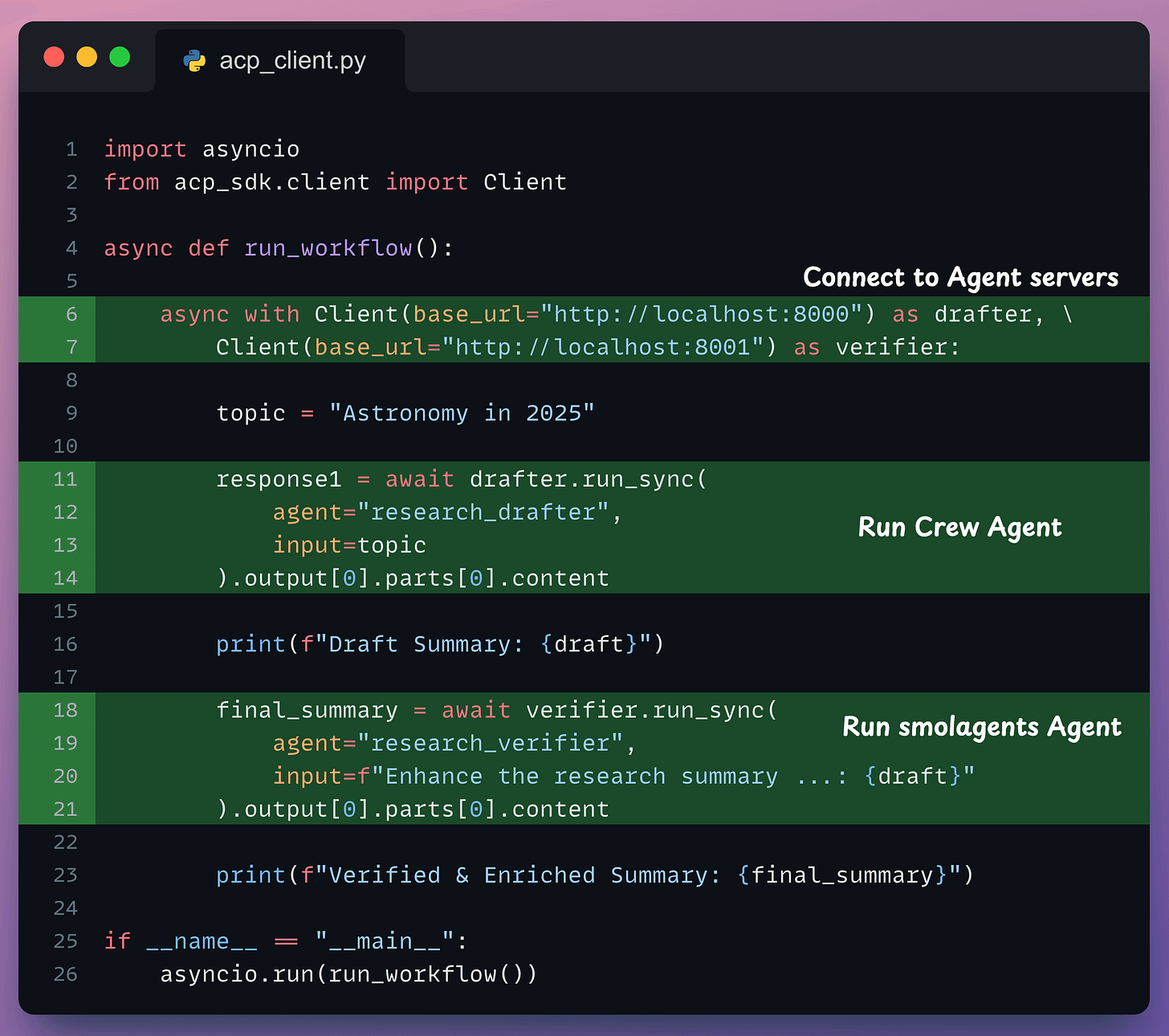

Finally, we use an ACP client to connect both agents in a simple workflow.

Line 6-7 → Connect the client to both servers.

Line 11-14 → The client invokes the first agent and receives an output.

Line 18-21 → The output is passed to the second agent for enhancement..

Done!

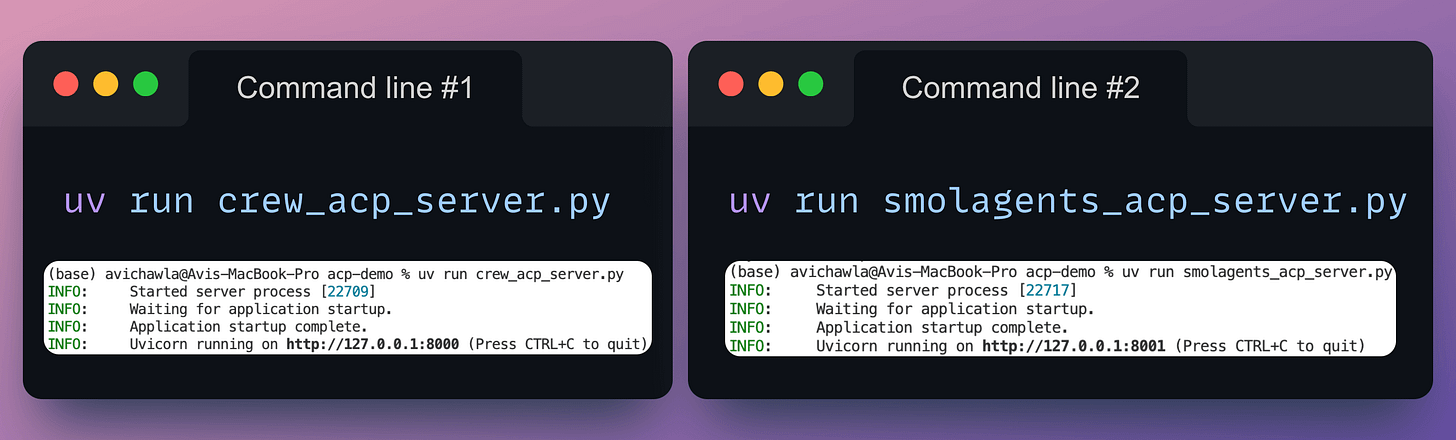

Run your servers as follows:

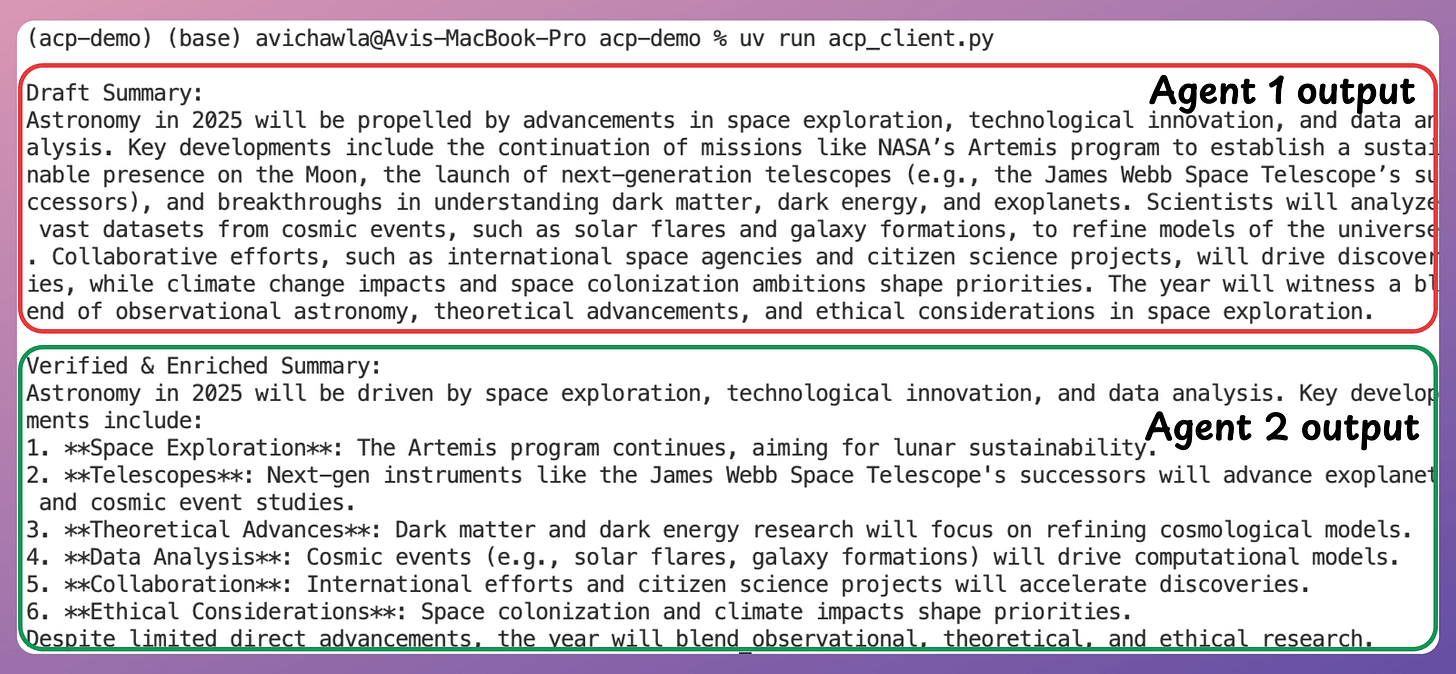

And then run the client to get an output from a system that’s powered by ACP:

This demo showcases how you can use ACP to enable Agents to communicate via a standardized protocol, even if they are built using different frameworks.

Here’s how ACP is different from A2A:

ACP is built for local-first, low-latency communication; A2A is optimized for web-native, cross-vendor interoperability

ACP uses structured RESTful interfaces; A2A supports more flexible, natural interactions.

ACP excels in controlled, edge, or team-specific setups; A2A shines in broader cloud-based collaboration

The code for this issue is available in this GitHub repo →

👉 Over to you: Would you like us to cover more content on ACP in future issues?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.