A Simple and Intuitive Guide to Understanding Precision and Recall

Using the mindset technique.

I have seen many folks struggling to intuitively understand Precision and Recall.

These fairly straightforward metrics often intimidate many.

Yet, adopting the Mindset Technique can be incredibly helpful.

Let me walk you through it today.

For simplicity, we’ll call the "Positive class" as our class of interest.

Precision

Formally, Precision answers the following question:

“What proportion of positive predictions were actually positive?”

Let’s understand that from a mindset perspective.

When you are in a Precision Mindset, you don’t care about getting every positive sample correctly classified.

But it’s important that every positive prediction you get should actually be positive.

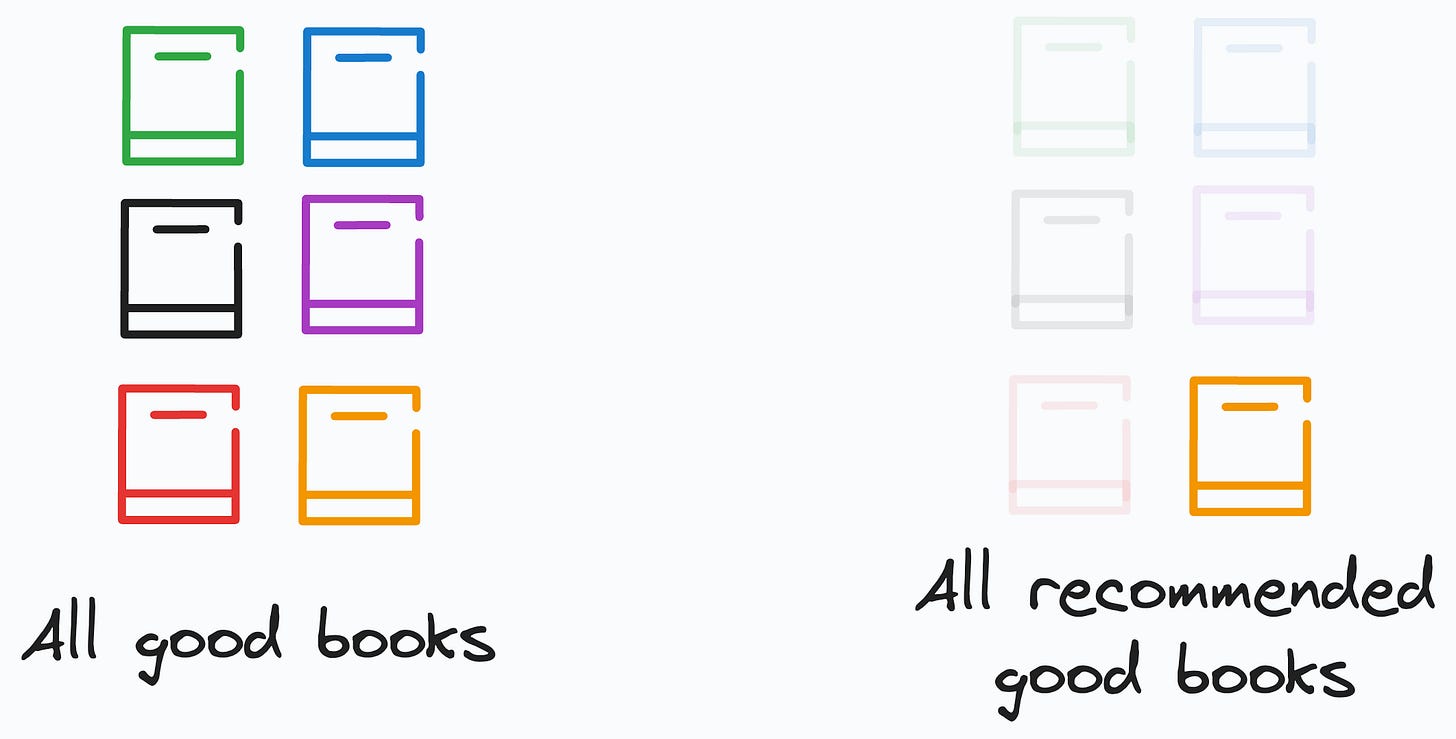

The illustration below is an example of high Precision. All positive predictions are indeed positive, even though some positives have been left out.

For instance, consider a book recommendation system. Say a positive prediction means you’d like the recommended book.

In a Precision Mindset, you are okay if the model does not recommend all good books in the world.

But what it recommends should be good.

So even if this system recommended only one book and you liked it, this gives a Precision of 100%.

This is because what it classified as “Positive” was indeed “Positive.”

To summarize, in a high Precision Mindset, all positive predictions should actually be positive.

Recall

Recall is a bit different. It answers the following question:

“What proportion of actual positives was identified correctly by the model?”

When you are in a Recall Mindset, you care about getting each and every positive sample correctly classified.

It’s okay if some positive predictions were not actually positive.

But all positive samples should get classified as positive.

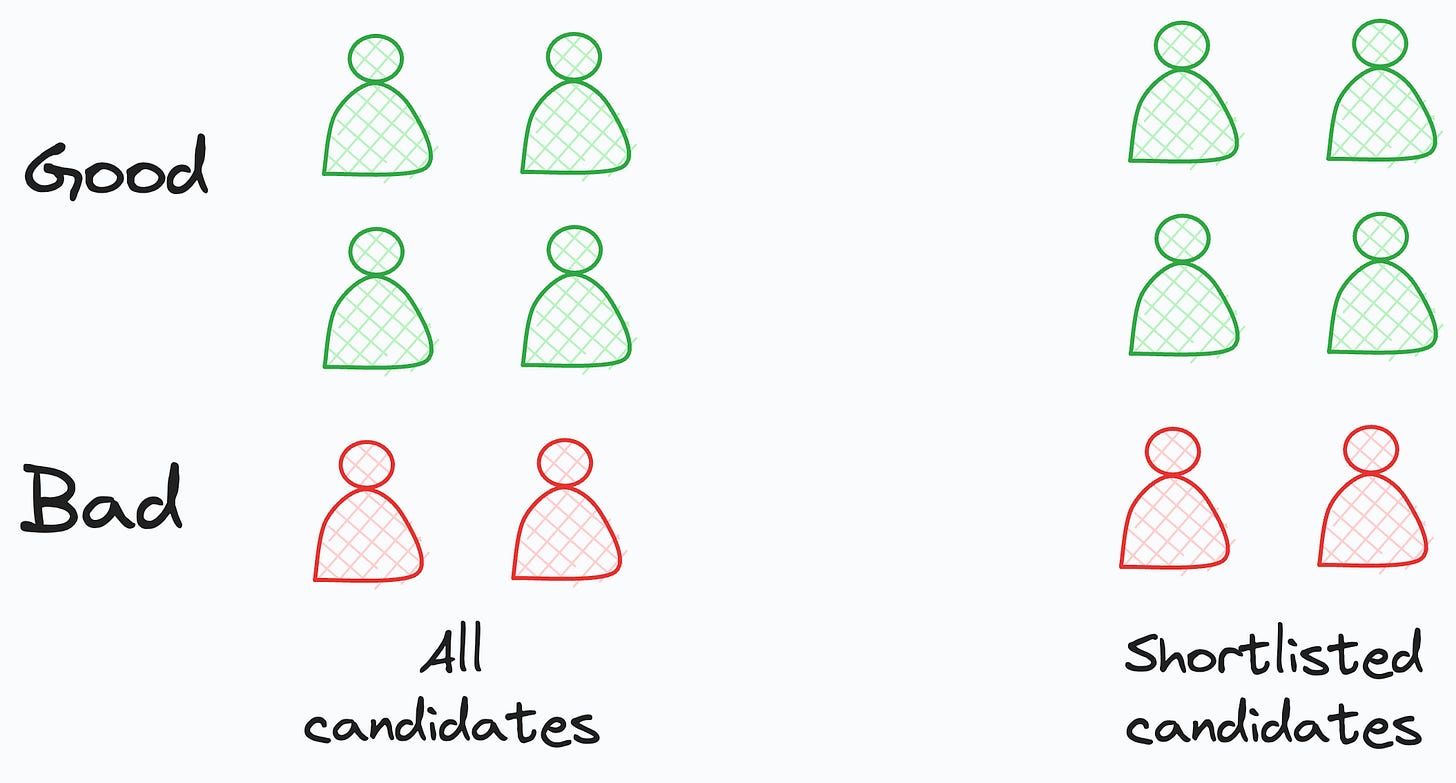

The illustration below is an example of high recall. All positive samples were classified correctly as positive, even though some were actually negative.

For instance, consider an interview shortlisting system based on their resume. A positive prediction means that the candidate should be invited for an interview.

In a Recall Mindset, you are okay if the model selects some incompetent candidates.

But it should not miss out on inviting any skilled candidate.

So even if this system says that all candidates (good or bad) are fit for an interview, it gives you a Recall of 100%.

This is because it didn’t miss out on any of the positive samples.

Which metric to choose entirely depends on what’s important to the problem at hand:

Optimize Precision if:

You care about getting ONLY high quality positive predictions.

You are okay if some quality (or positive) samples are left out.

Optimize Recall if:

You care about getting ALL quality (or positive) samples correct.

You are okay if some non-quality (or negative) samples also come along.

I hope that was helpful :)

👉 Over to you: What analogy did you first use to understand Precision and Recall?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed last month:

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

Simple and precise, thank you

Excellent. We don't do enough of getting the intuition right in machine learning so this is a great complement.