A Technique to Remember Precision and Recall

A simple and intuitive guide to precision and recall.

Precision and Recall are fairly straightforward metrics that often intimidate many.

If you are one of them, let me walk you through a technique today, which I call the “mindset technique.”

Precision

Formally, Precision answers the following question:

“What proportion of positive predictions were actually positive?”

Let’s understand that from a mindset perspective.

When we are in a Precision Mindset, we don’t care about getting every positive sample classified correctly.

But it’s important that every positive prediction we get should actually be positive.

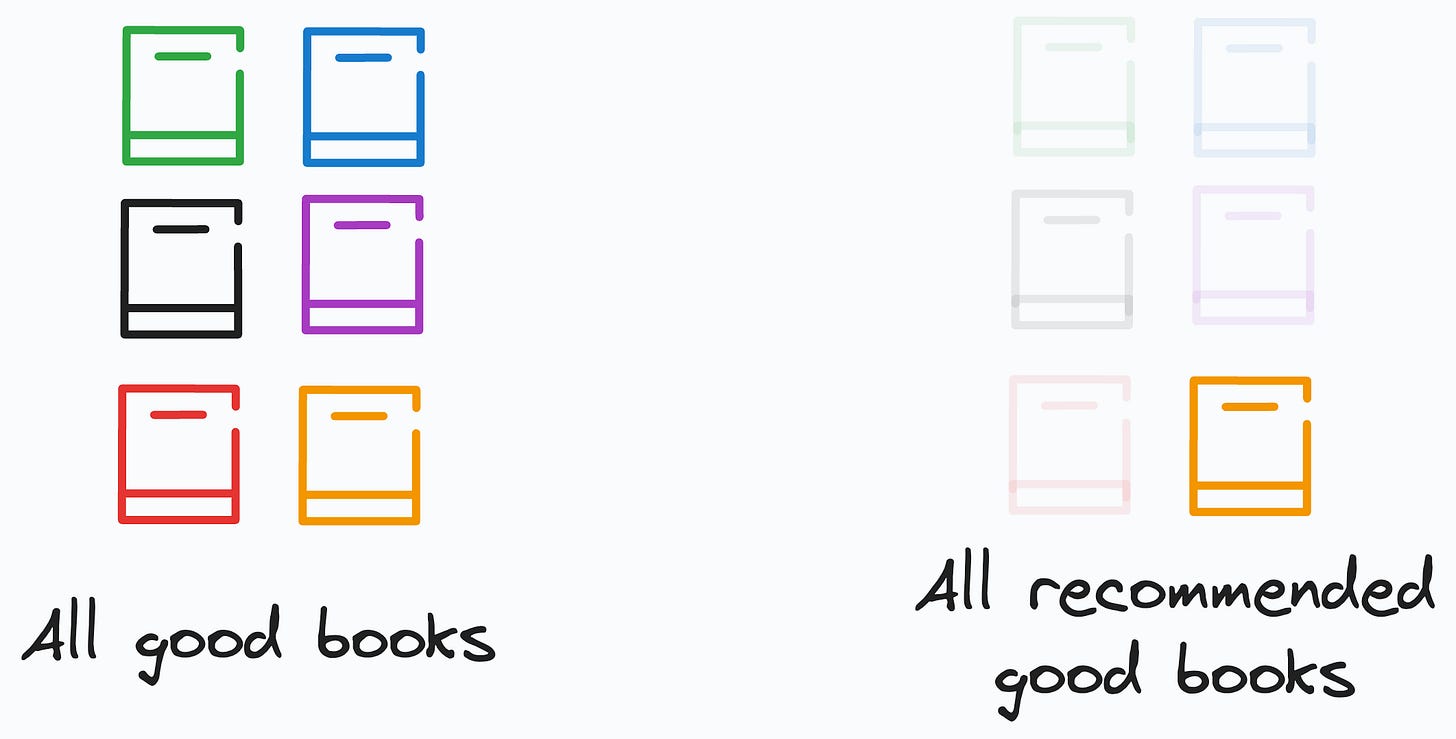

For instance, consider a book recommendation system where a positive prediction means we like the recommended book.

In a Precision Mindset, we are okay if the model does not recommend all good books in the world.

But what it recommends should be good.

So even if this system recommended only one book and we liked it, this gives a Precision of 100%.

This is because what it classified as “Positive” was indeed “Positive.”

To summarize, in a high-precision mindset, all positive predictions should actually be positive.

Recall

Recall is a bit different. It answers the following question:

“What proportion of all actual positives was identified correctly by the model?”

When we are in a Recall Mindset, we care about getting each and every positive sample correctly classified.

It’s okay if some positive predictions were not actually positive.

However, all positive samples should be classified as positive.

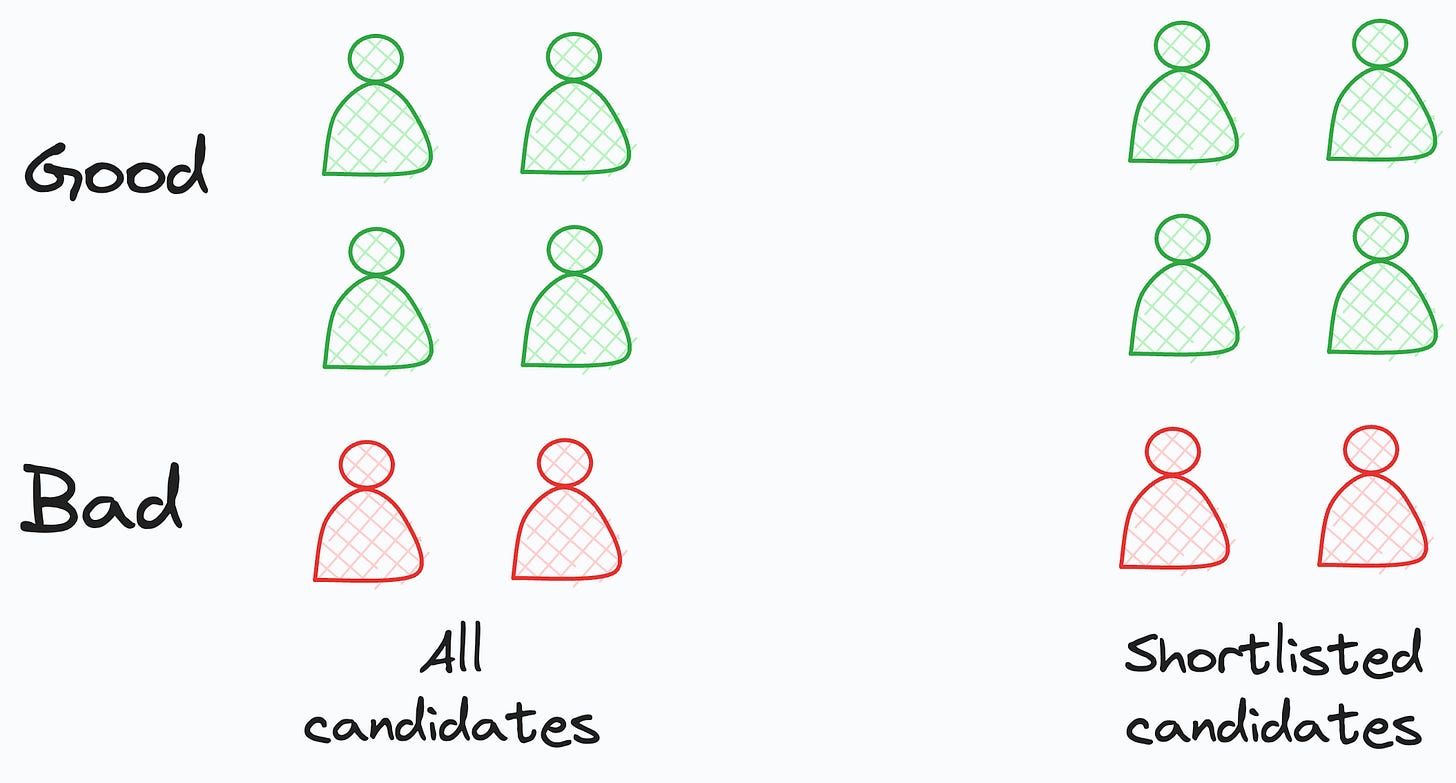

For instance, consider an interview shortlisting system based on their resume. A positive prediction means that the candidate should be invited for an interview.

In a Recall Mindset, you are okay if the model selects some incompetent candidates.

But it should not miss out on inviting any skilled candidate.

So even if this system says that all candidates (good or bad) are fit for an interview, it gives us a Recall of 100%.

This is because it didn’t miss out on any of the positive samples.

Which metric to choose entirely depends on what’s important to the problem at hand:

Optimize Precision if:

We care about getting ONLY high quality positive predictions.

We are okay if some quality (or positive) samples are left out.

Optimize Recall if:

We care about getting ALL quality (or positive) samples correct.

We are okay if some non-quality (or negative) samples also come along.

But practically speaking, solely optimizing for high Recall is not that useful.

This is because even if we implement a basic function that always predicts “positive,” the classifier will still have a 100% Recall. This is because our naive model correctly classifies all positive instances.

However, optimizing for high Precision does require some engineering effort.

I hope that was helpful :)

👉 Over to you: What analogy did you first use to understand Precision and Recall?

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

Conformal Predictions: Build Confidence in Your ML Model’s Predictions

Quantization: Optimize ML Models to Run Them on Tiny Hardware

5 Must-Know Ways to Test ML Models in Production (Implementation Included)

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of 87,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.