A Visual Guide to AdaBoost

A step-by-step intuitive explanation.

The following visual summarizes how Boosting models work:

As depicted above:

Boosting is an iterative training process.

The subsequent model puts more focus on misclassified samples from the previous model.

The final prediction is a weighted combination of all predictions

However, many find it difficult to understand how this model is precisely trained and how instances are reweighed for subsequent models.

AdaBoost is a common Boosting model, so let’s understand how it works.

The core idea behind Adaboost is to train many weak learners to build a more powerful model. This technique is also called ensembling.

Specifically talking about Adaboost, the weak classifiers progressively learn from the previous model’s mistakes, creating a powerful model when considered as a whole.

These weak learners are usually decision trees.

Let me make it more clear by implementing AdaBoost using the DecisionTreeClassifier class from sklearn.

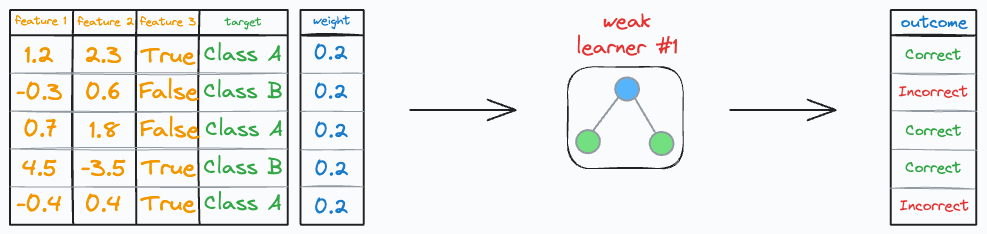

Consider we have the following classification dataset:

To begin, every row has an equal weight, and it is equal to (1/n), where n is the number of training instances.

Step 1: Train a weak learner

In Adaboost, every decision tree has a unit depth, and they are also called stumps.

Thus, we define DecisionTreeClassifier with a max_depth of 1, and train it on the above dataset.

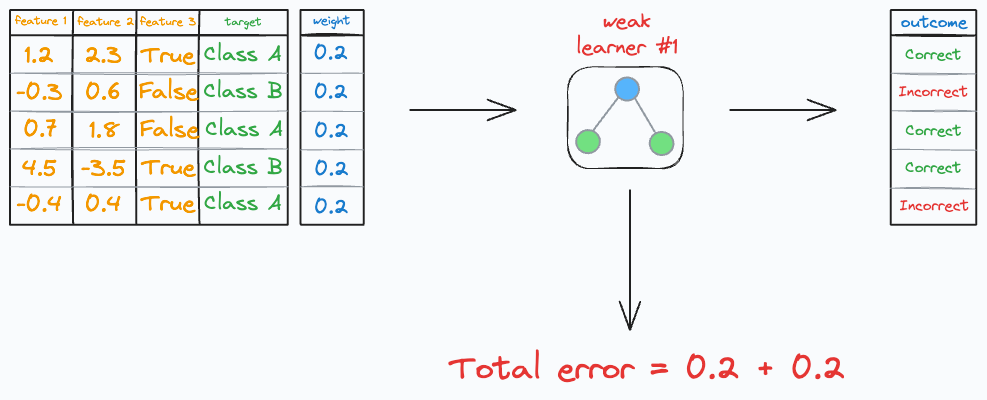

Step 2: Calculate the learner’s cost

Of course, there will be some correct and incorrect predictions.

The total cost (or error/loss) of this specific weak learner is the sum of the weights of the incorrect predictions.

In our case, we have two errors, so the total error is:

Now, as discussed above, the idea is to let the weak learn progressively learn from previous learner’s mistakes.

So, going ahead, we want the subsequent model to focus more on the incorrect predictions produced earlier.

Here’s how we do this:

Step 3: Calculate the learner’s importance

First, we determine the importance of the weak learner.

Quite intuitively, we want the importance to be inversely related to the above error.

If the weak learner has a high error, it must have a lower importance.

If the weak learner has a low error, it must have a higher importance.

One choice is the following function:

This function is only defined between [0,1].

When the error is high (~1), this means there were no correct predictions → This gives a negative importance to the weak learner.

When the error is low (~0), this means there were no incorrect predictions → This gives a positive importance to the weak learner.

If you feel there could be a better function to use here, you are free to use that and call it your own Boosting algorithm.

In a similar fashion, we can create our own Bagging algorithm too, which we discussed here.

Now, we have the importance of the learner.

The importance value is used during model inference to weigh the predictions from the weak learners.

So the next step is to…

Step 4: Reweigh the training instances

All the correct predictions are weighed down as follows:

And all the incorrect predictions are weighed up as follows:

Once done, we normalize the new weights to add up to one.

That’s it!

Step 5: Sample from reweighed dataset

From step 4, we have the reweighed dataset.

We sample instances from this dataset in proportion to the new weights to create a new dataset.

Next, go back to step 1 — Train the next weak learner.

And repeat the above process over and over for some pre-defined max iterations.

That’s how we build the AdaBoost model.

See, it wasn’t that difficult to understand Adaboost, was it?

All we have to do is consider the errors from the previous model, reweigh it for the next model, and repeat.

I hope that helped!

👉 Over to you: Now that you understand the algorithm, go ahead today and try implementing this as an exercise. Only use the DecisionTreeClassifier class from sklearn and follow the steps discussed here. Let me know if you face any difficulties.

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

A Beginner-friendly Introduction to Kolmogorov Arnold Networks (KANs).

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing.

How To (Immensely) Optimize Your Machine Learning Development and Operations with MLflow.

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of 77,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.