A Visual Guide to Stochastic, Mini-batch, and Batch Gradient Descent

...with advantages and disadvantages.

Gradient descent is a widely used optimization algorithm for training machine learning models.

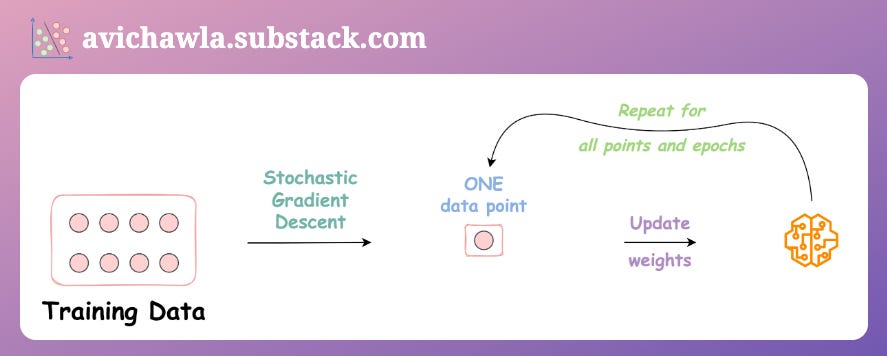

Stochastic, mini-batch, and batch gradient descent are three different variations of gradient descent, and they are distinguished by the number of data points used to update the model weights at each iteration.

🔷 Stochastic gradient descent: Update network weights using one data point at a time.

Advantages:

Easier to fit in memory.

Can converge faster on large datasets and can help avoid local minima due to oscillations.

Disadvantages:

Noisy steps can lead to slower convergence and require more tuning of hyperparameters.

Computationally expensive due to frequent updates.

Loses the advantage of vectorized operations.

🔷 Mini-batch gradient descent: Update network weights using a few data points at a time.

Advantages:

More computationally efficient than batch gradient descent due to vectorization benefits.

Less noisy updates than stochastic gradient descent.

Disadvantages:

Requires tuning of batch size.

May not converge to a global minimum if the batch size is not well-tuned.

🔷 Batch gradient descent: Update network weights using the entire data at once.

Advantages:

Less noisy steps taken towards global minima.

Can benefit from vectorization.

Produces a more stable convergence.

Disadvantages:

Enforces memory constraints for large datasets.

Computationally slow as many gradients are computed, and all weights are updated at once.

Over to you: What are some other advantages/disadvantages you can think of? Let me know :)

👉 Read what others are saying about this post on LinkedIn and Twitter.

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

👉 If you love reading this newsletter, feel free to share it with friends!

Find the code for my tips here: GitHub.

I like to explore, experiment and write about data science concepts and tools. You can read my articles on Medium. Also, you can connect with me on LinkedIn and Twitter.

Hello Avi,

Thanks, this helped. A small suggestion though, IMO, A simple animation over the iteration loops in the mathematical equation, b/w GD/BSG/SGD, could have helped to visualize this better, for beginners