An Overlooked Limitation of Traditional kNNs

...and here's how to supercharge them.

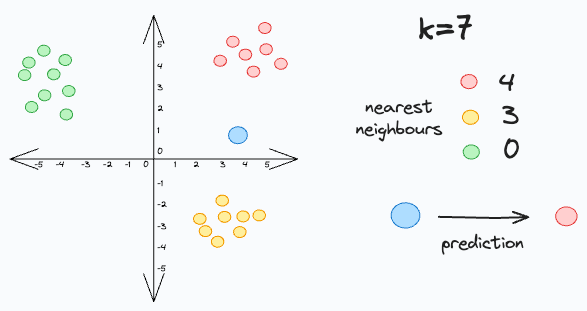

kNNs, by default, classify a new data point as follows:

Count its class-wise “k” nearest neighbors.

Assign the data point to the class with the highest count.

As a result, during classification, the vicinity of a class is entirely ignored.

Yet, this may be extremely important, especially when you have a class with few samples.

For instance, as shown below, with k=5, a new data point can NEVER be assigned to the red class.

While it is easy to tweak the hyperparameter “k” visually in the above demo, this approach is infeasible in high-dimensional datasets.

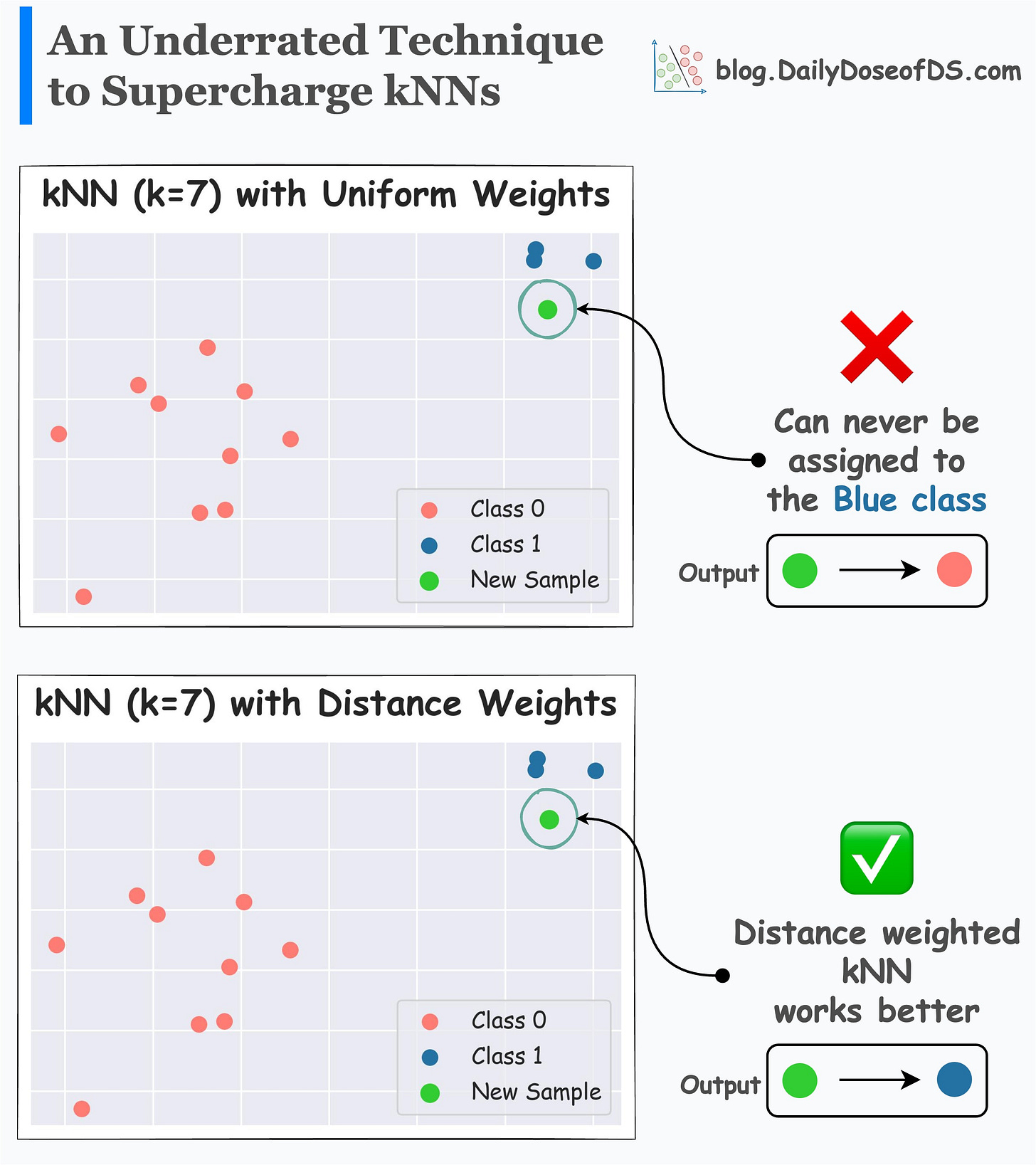

Distance-weighted kNNs are a more robust alternative to traditional kNNs.

As the name suggests, they consider the distance to the nearest neighbor during prediction.

Thus, the closer a specific neighbor, the more will be its impact on the final prediction.

Its effectiveness is evident from the image below.

Traditional kNN (with k=7) can never predict the blue class.

Distance-weighted kNN is more robust in its prediction.

As per my observation, a distance-weighted kNN typically works much better than a traditional kNN. And this makes intuitive sense as well.

Yet, this may go unnoticed because, by default, the kNN implementation of sklearn considers “uniform” weighting.

👉 Over to you: What are some other ways to make kNNs more robust when a class has few samples?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

Thanks for reading!

Whenever you’re ready, here are a couple of more ways I can help you:

Get the full experience of the Daily Dose of Data Science. Every week, receive two 15-mins data science deep dives that:

Make you fundamentally strong at data science and statistics.

Help you approach data science problems with intuition.

Teach you concepts that are highly overlooked or misinterpreted.

Promote to 32,000 subscribers by sponsoring this newsletter.

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!