ARQ: A New Structured Reasoning Approach for LLMs

...that actually prevents hallucinations (explained visually).

ARQ: Structured Reasoning Approach for LLMs

Researchers have open-sourced a new reasoning approach that actually prevents hallucinations in LLMs.

It beats popular techniques like Chain-of-Thought and has a SOTA success rate of 90.2%.

It’s implemented in the Parlant open-source framework to build instruction-following Agents (GitHub repo).

Here’s the core problem with current techniques that this new approach solves.

We have enough research to conclude that LLMs often struggle to assess what truly matters in a particular stage of a long, multi-turn conversation.

For instance, when you give Agents a 2,000-word system prompt filled with policies, tone rules, and behavioral dos and don’ts, you expect them to follow it word by word.

But here’s what actually happens:

They start strong initially.

Soon, they drift and start hallucinating.

Shortly after, they forget what was said five turns ago.

And finally, the LLM that was supposed to “never promise a refund” is happily offering one.

This means they can easily ignore crucial rules (stated initially) halfway through the process.

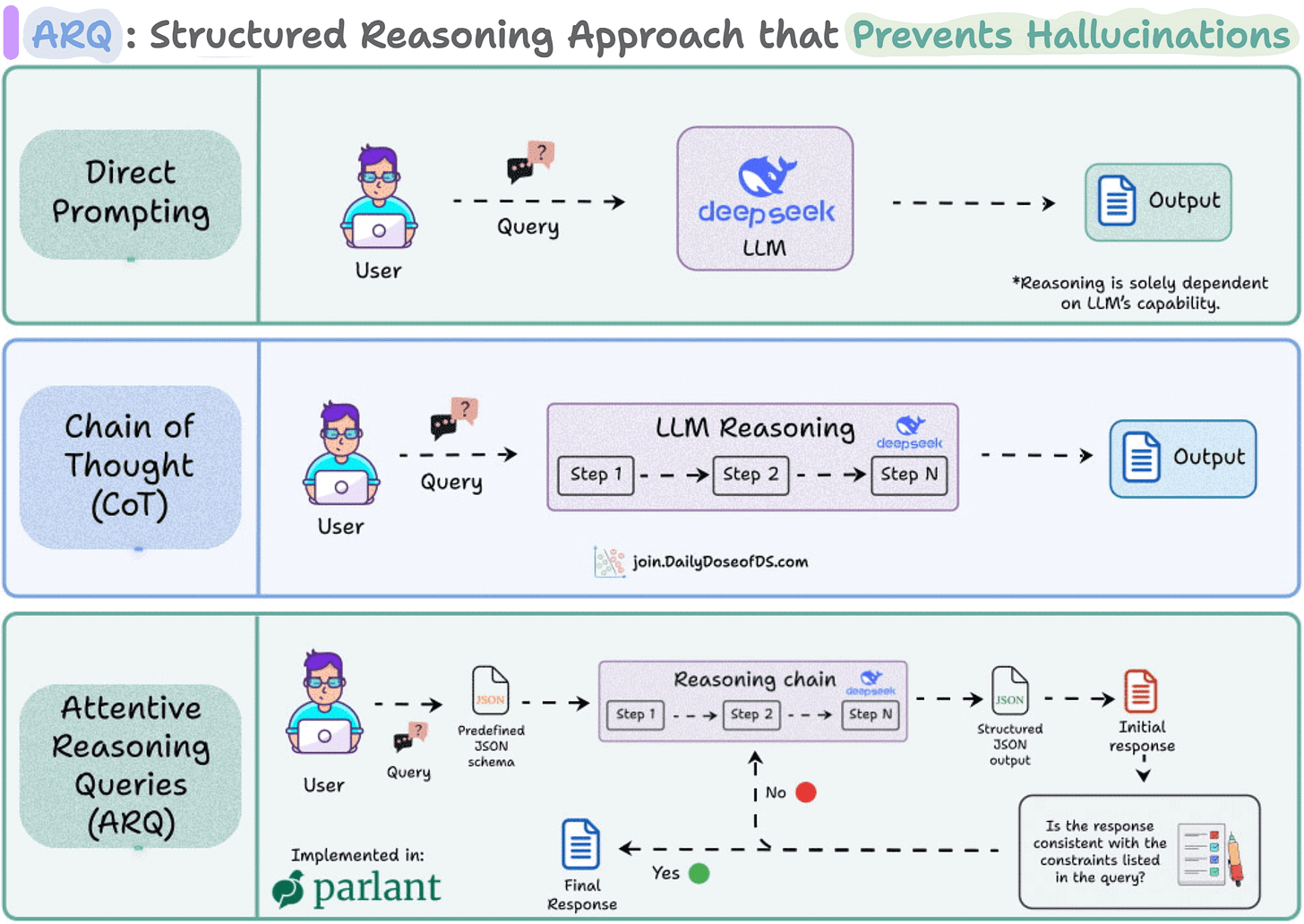

We expect techniques like Chain-of-Thought will help.

But even with methods like CoT, reasoning remains free-form, i.e., the model “thinks aloud” but it has limited domain-specific control.

That’s the exact problem the new technique, called Attentive Reasoning Queries (ARQs), solves.

Instead of letting LLMs reason freely, ARQs guide them through explicit, domain-specific questions.

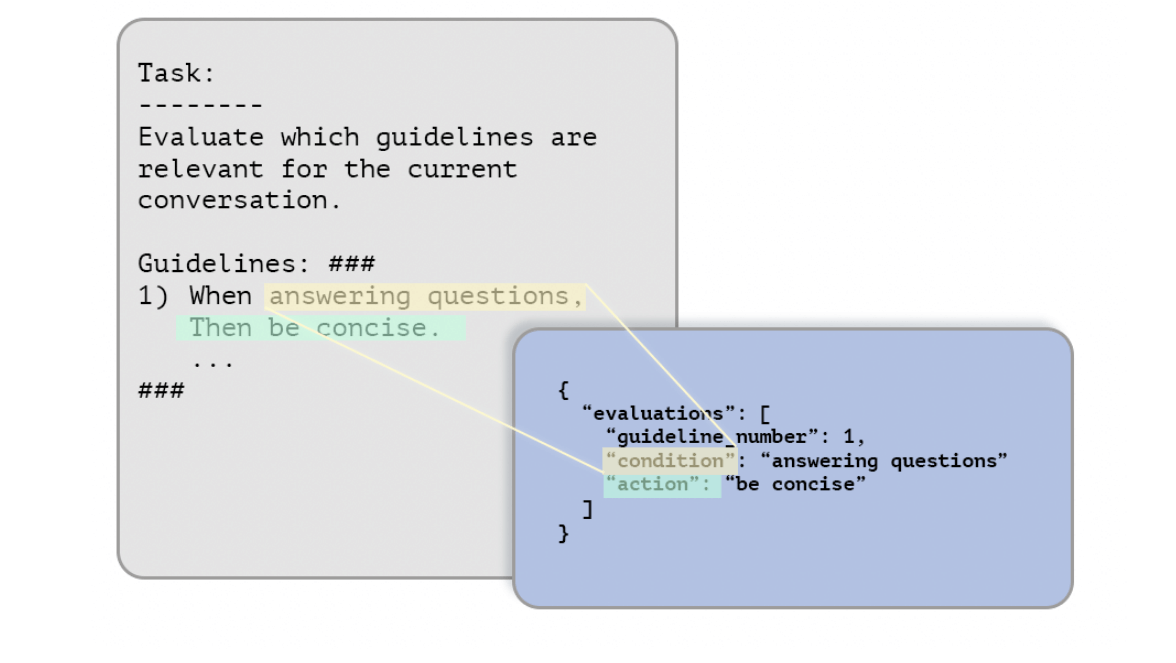

Essentially, each reasoning step is encoded as a targeted query inside a JSON schema.

For example, before making a recommendation or deciding on a tool call, the LLM is prompted to fill structured keys like:

{

“current_context”: “Customer asking about refund eligibility”,

“active_guideline”: “Always verify order before issuing refund”,

“action_taken_before”: false,

“requires_tool”: true,

“next_step”: “Run check_order_status()”

}This type of query does two things:

Reinstate critical instructions by keeping the LLM aligned mid-conversation.

Facilitate intermediate reasoning, so that the decisions are auditable and verifiable.

By the time the LLM generates the final response, it’s already walked through a sequence of *controlled* reasoning steps, which did not involve any free text exploration (unlike techniques like CoT or ToT).

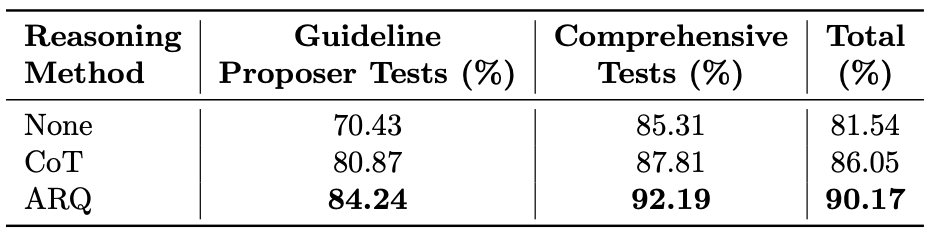

Here’s the success rate across 87 test scenarios:

ARQ → 90.2%

CoT reasoning → 86.1%

Direct response generation → 81.5%

This approach is actually implemented in Parlant, a recently trending open-source framework to build instruction-following Agents (14k stars).

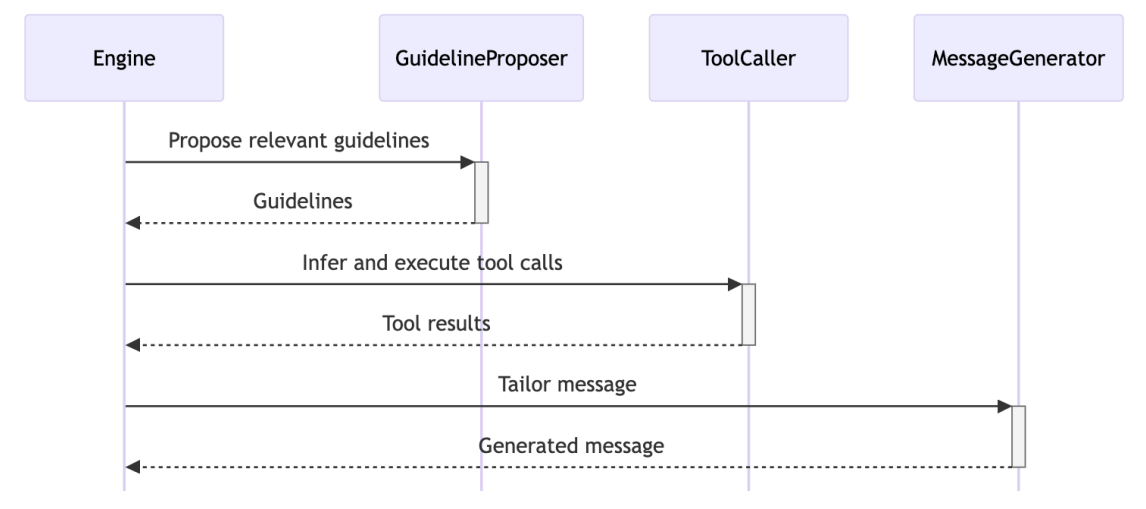

ARQs are integrated into three key modules:

Guideline proposer to decide which behavioral rules apply.

Tool caller to determine what external functions to use.

Message generator, when it produces the final customer-facing reply.

You can see the full implementation and try it yourself.

But the core insight applies regardless of what tools you use:

When you make reasoning explicit, measurable, and domain-aware, LLMs stop improvising and start reasoning with intention. Free-form thinking sounds powerful, but in high-stakes or multi-turn scenarios, structure always wins.

You can find the Parlant GitHub repo here →

Thanks for reading!

While the introduction of Attentive Reasoning Queries (ARQs) and the associated Parlant framework represent a meaningful improvement in structuring LLM reasoning, the claim that this “actually prevents hallucinations” overlooks the deeper, more fundamental challenges of large language models. The empirical results (90.2% success rate across 87 test scenarios) are promising, but they remain within a narrowly defined domain (customer-service conversational agents) using a single underlying model. Such results do not generalise to the full breadth of reasoning tasks or to the general world of open-ended language generation and knowledge retrieval. Moreover, the metrics focus on “success” in constrained scenarios rather than offering a robust demonstration of hallucination elimination across diverse and adversarial contexts.

More importantly, the paper does not address the underlying brittleness of neural nets trained via back-propagation on large corpora — the probabilistic nature of their knowledge, the lack of explicit symbolic reasoning, the difficulty of generalising outside the training distribution, and the absence of guaranteed truth-grounding. While ARQs impose structure and help mitigate certain failure modes (instruction drift, forgetting constraints, tool-use mis-selection), they do not change the fundamentally statistical, pattern-matching architecture of the model itself. In other words, ARQs are a valuable engineering layer on top of an imperfect substrate, but they do not erase the need for critical evaluation of generated outputs or replace the necessity of external verification or human oversight.