Boost Sklearn Model Training and Inference by Doing (Almost) Nothing

...along with a lesser-known advice on vectorized operations.

The best thing about sklearn is that it provides a standard and elegant API across each of its ML model implementations:

Train the model using

model.fit().Predict the output using

model.predict().Compute the accuracy using

model.score().and more.

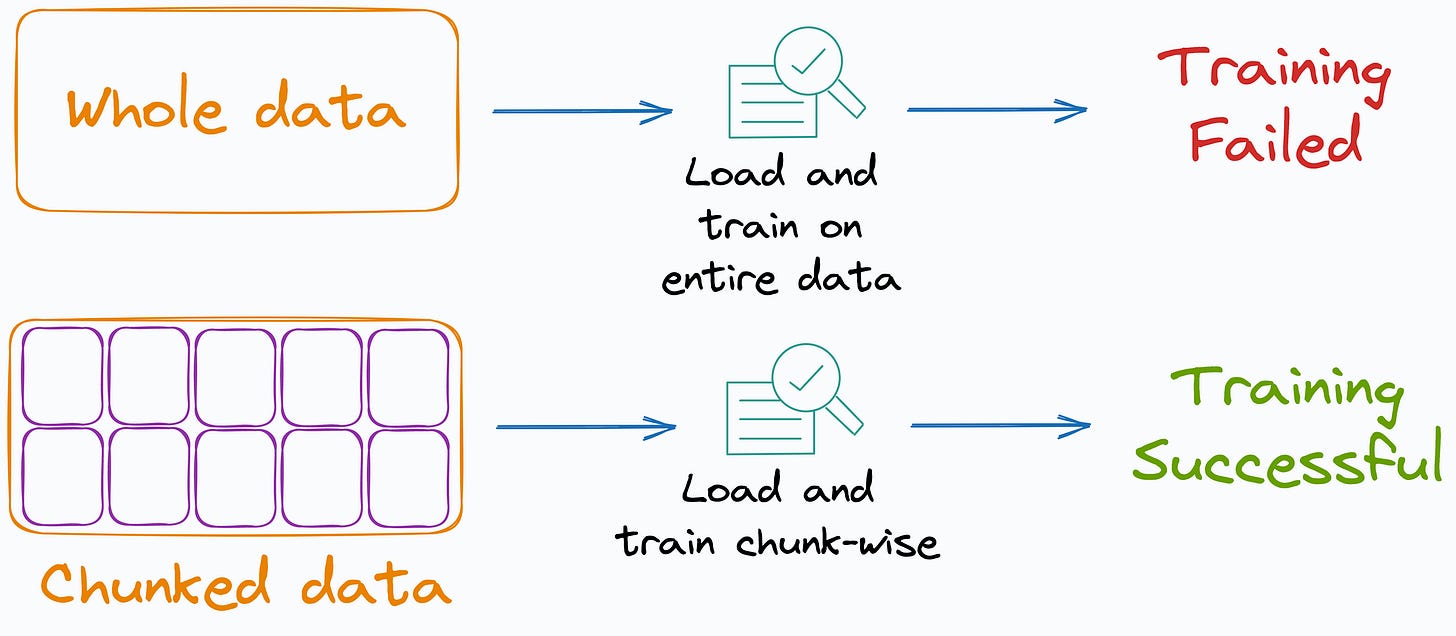

However, the problem with training a model this way is that the entire dataset must be available in memory to train the model.

But what if the dataset itself is large enough to load in memory?

These are called out-of-memory datasets, and training on such datasets can be:

time-consuming, or,

impossible due to memory constraints.

One thing that I don’t find many sklearn users utilizing in such situations is its incremental learning API.

As the name suggests, it lets the model learn incrementally from a mini-batch of instances.

This prevents limited memory constraints as only a few instances are loaded in memory at once.

What’s more, by loading and training on a few instances at a time, we can speed up the training of sklearn models.

Why?

Usually, when we use the model.fit(X, y) method to train a model in sklearn, the training process is vectorized but on the entire dataset.

While vectorization provides magical run-time improvements when we have a bunch of data, the performance often degrades after a certain point.

Thus, by loading fewer training instances at a time into memory and applying vectorization, we can get a better training run-time.

Let’s test it.

Consider we have a classification dataset occupying ~4 GBs of local storage space:

20 Million training instances

5 features

2 classes

We train a SGDClassifier model using sklearn on the entire dataset as follows:

The above training takes about 251 seconds.

Next, let’s train the same model using the partial_fit() API of sklearn. This is implemented below:

We load data from the CSV file

large_dataset.csvin chunks.After loading a specific chunk, we will invoke the

partial_fit()API on theSGDClassifiermodel.

The training time is reduced by ~8 times, which is massive.

This validates what we discussed earlier:

While vectorization provides magical run-time improvements when we have a bunch of data, it is observed that the performance may degrade after a certain point.

Thus, by loading fewer training instances at a time into memory and applying vectorization, we can get a better training run-time.

Here, we must also compare the model coefficients and prediction accuracy of the two models.

The following visual depicts the comparison between model_full and model_chunk:

Both models have similar coefficients and similar performance.

Here, it is also worth noting that not all sklearn estimators implement the partial_fit() API.

Here’s the list of models that do:

Of course, the list is short. Yet, it is surely worth exploring to see if you can benefit from it.

Having said that, once we have trained our sklearn model (either on a small dataset or large), we may want to deploy it.

However, it is important to note that sklearn models are backed by NumPy computations, which can only run on a single CPU core.

Thus, in a deployment scenario, this can lead to suboptimal run-time performance.

Nonetheless, it is possible to compile many sklearn models to tensor operations, which can be loaded on a GPU to gain immense speedups.

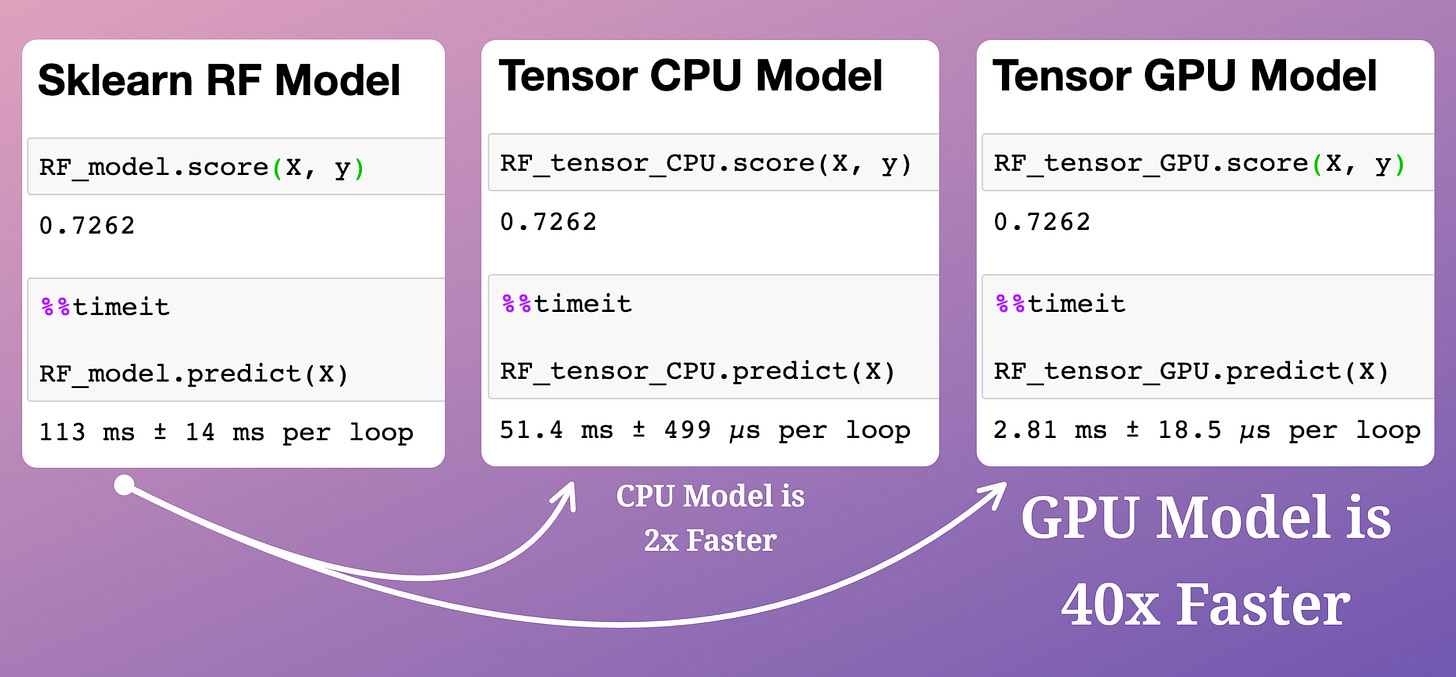

This is evident from the image below:

The compiled model runs:

Over twice as fast on a CPU.

~40 times faster on a GPU, which is huge.

All models have the same accuracy — indicating no loss of information during compilation.

If you want to learn more about this critical model compilation technique, we discussed it in detail here: Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

👉 Over to you: What are some other ways to boost sklearn models?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed last month:

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Why Bagging is So Ridiculously Effective At Variance Reduction?

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

Very well drafted and edited!!! Learnt something new today surely going to use it.

Great post! Small changes leading to huge impacts!!