Build a 3Blue1Brown Video Generator using Strands Agents from AWS

Agent building with no system prompts!

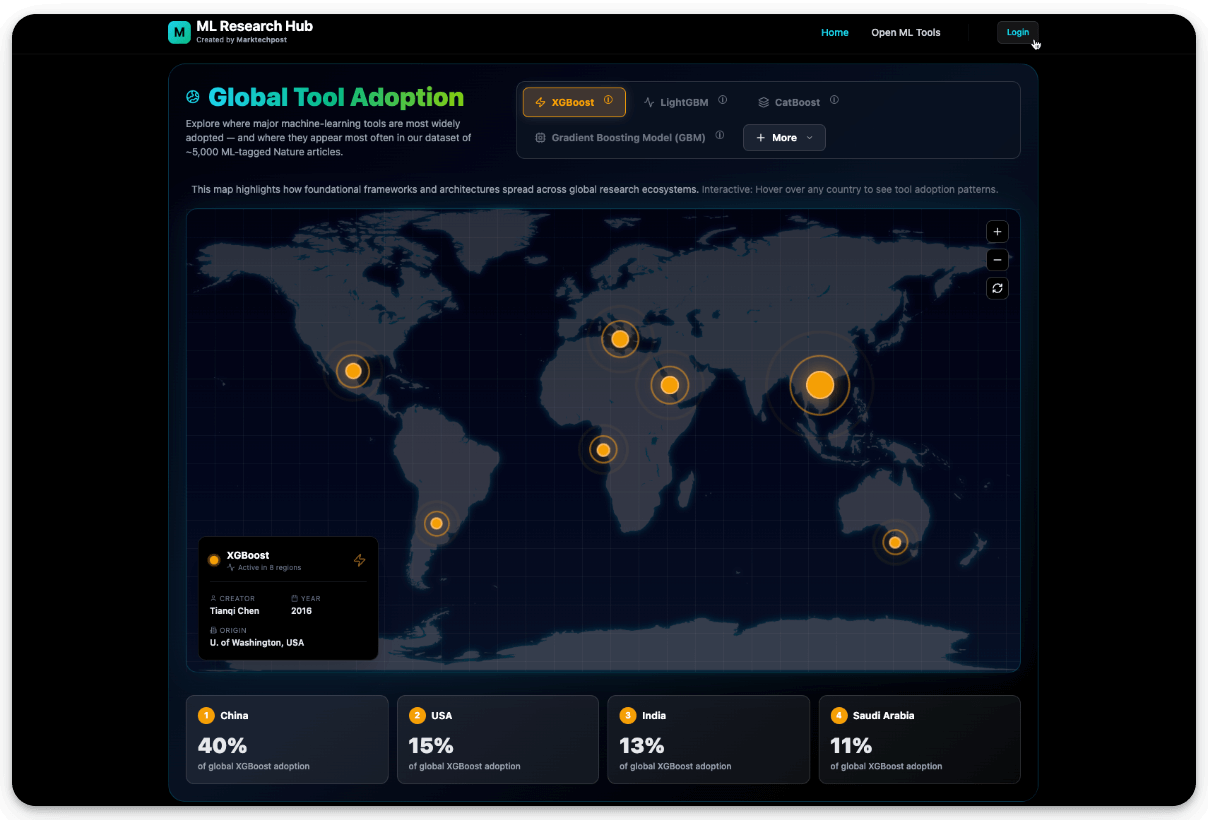

What ML tools are researchers actually using?

Marktechpost released an interactive landscape analyzing 5,000+ research papers from Nature journals in 2025. It reveals which ML tools research scientists are actually using across 125 countries.

Some findings:

The tools powering real science aren’t what you’d expect. While everyone’s talking about GenAI, classical ML (Random Forest, SVMs, Scikit-learn) accounts for 47% of all use cases. Adding ensemble methods (XGBoost, LightGBM, CatBoost) takes this to 77%.

There’s a geographic split. 90% of ML tools originate from the US, but China accounts for 43% of ML-enabled research papers. India has emerged as the third-largest contributor, applying ML to agriculture, climate modeling, and medical diagnostics.

XGBoost, Transformers, ResNet, U-Net, and YOLO emerge among the most-used frameworks, solving real problems in imaging, genomics, and environmental science.

The interactive dashboard breaks down tool adoption by country, field, and research type, and you can use it to explore what’s actually working in production research environments.

Build a 3Blue1Brown Video Generator using Strands Agents from AWS

The challenge with most agent frameworks today is that they still force devs to hardcode workflows, decision trees, and failure paths.

That works until the agent sees something you did not anticipate.

As a solution, instead of over-engineering orchestration, newer agent systems lean into the reasoning capabilities of modern models and let them drive the workflow.

You define the goal, provide tools, and allow the agent to decide how to get there.

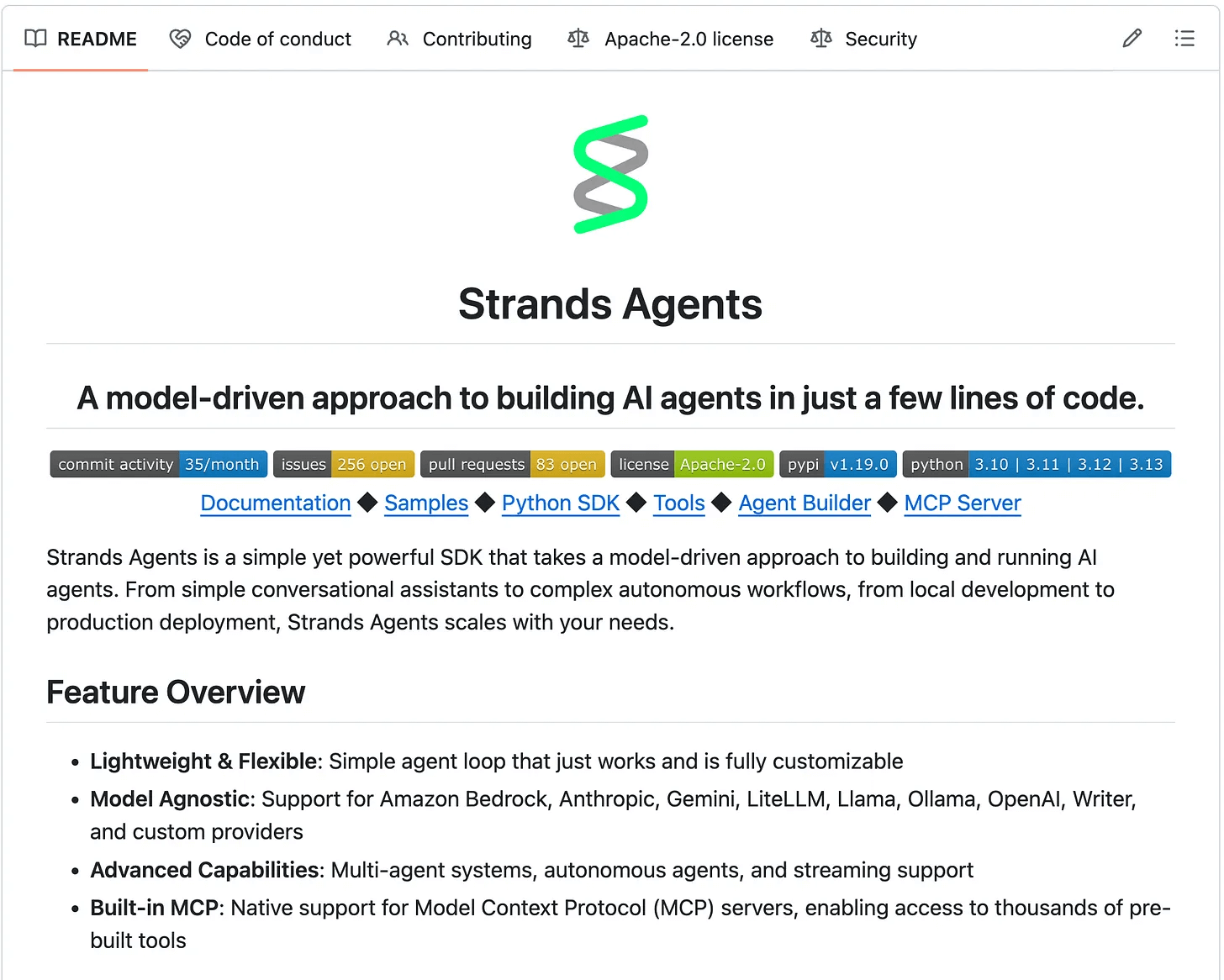

Strands Agents from AWS is a good example of this shift.

It is not about adding more rules or abstractions, but about letting the model plan, act, and recover dynamically.

Today, let’s do a hands-on walkthrough on this to learn how you can build Agents with a model-driven agent loop.

Everything will run 100% locally!

Let’s begin!

Implementation

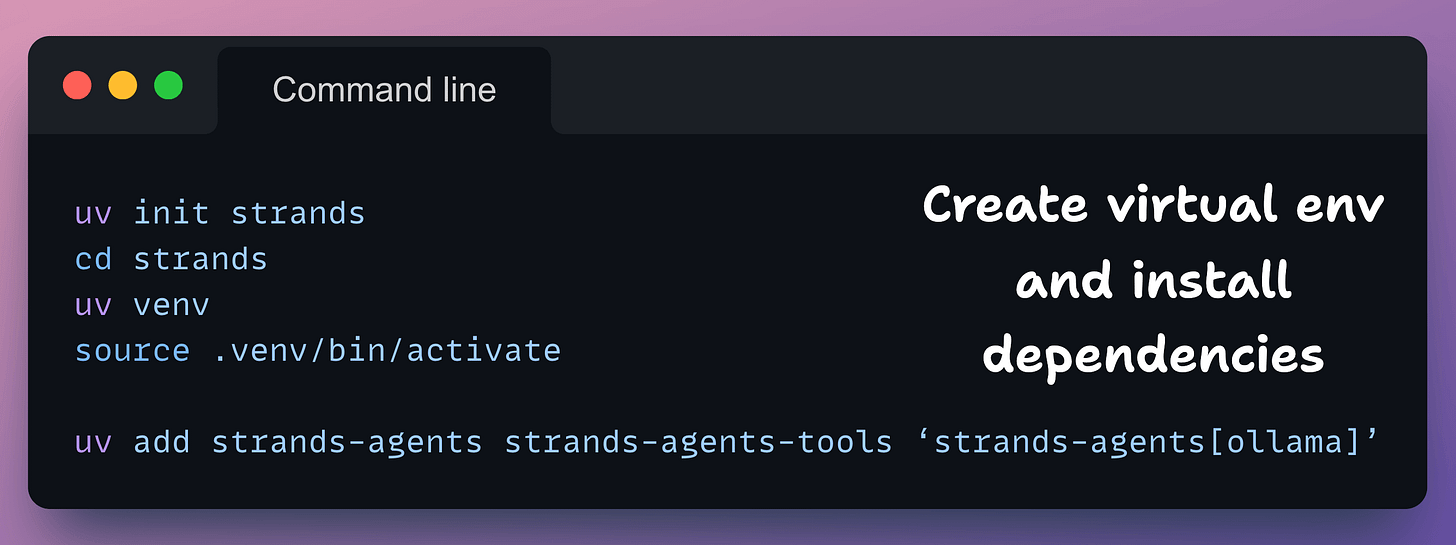

Start by setting a uv environment and installing the required frameworks:

Make sure you have Ollama installed and its server is running locally.

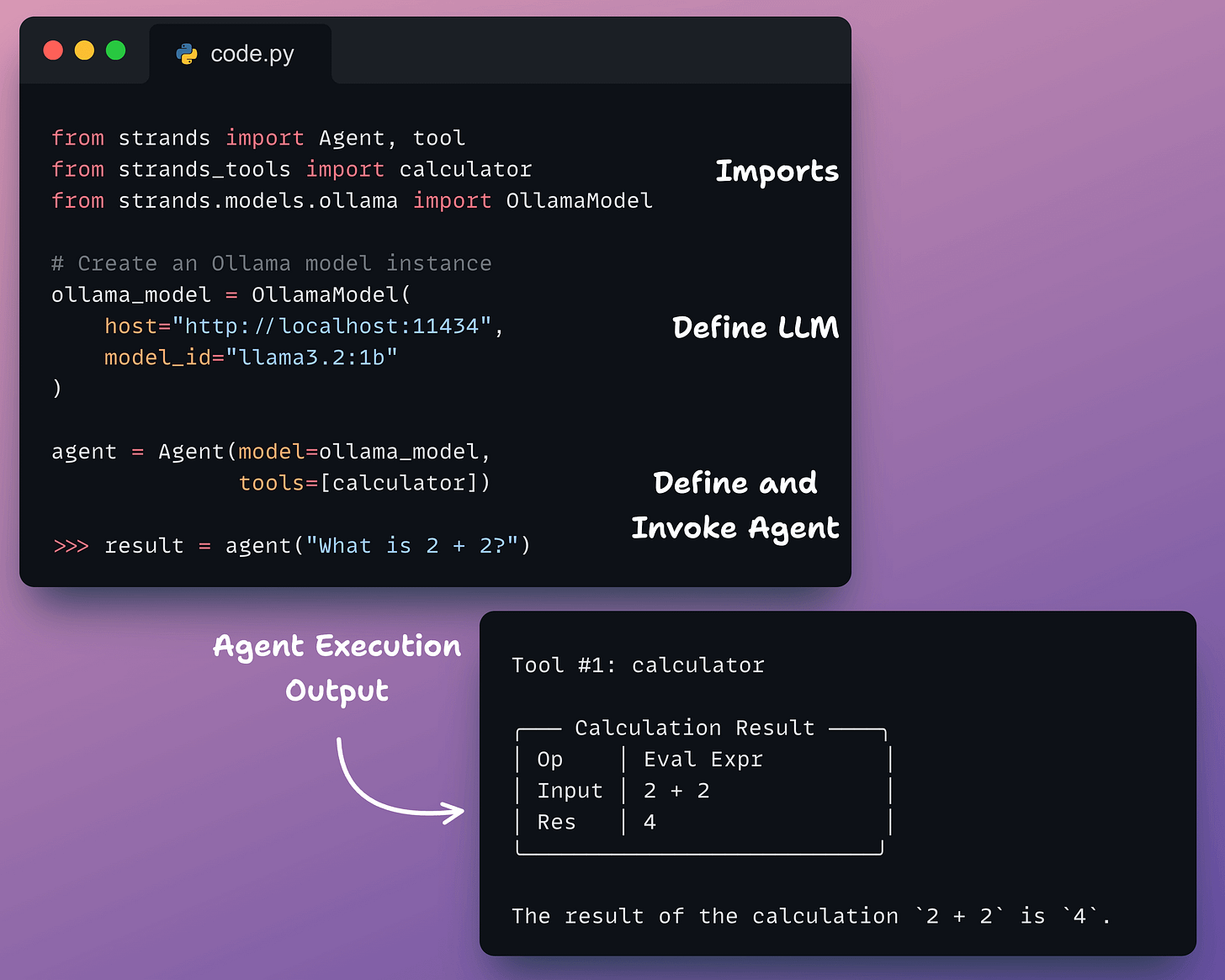

Now building and running an Agent is as simple as these few lines of code:

You can print metrics, like token usage with

result.totalTokens, run-time withresult.latencyMs, or runresult.get_summary()get a detailed nested JSON summary with tool calls, traces, etc.

While this is a minimal example, notice that there are no system prompts, no routing logic, no hardcoded steps, and no predefined workflow.

It simply defines three things: the model, the tools to use, and the task.

From there, the agent loop takes over.

At the time of Agent invocation, the model decides whether it needs a tool, which tool to use, how to structure the input, and when to stop.

Everything is model-driven.

So instead of encoding decision trees like “if math then calculator” or “if API fails then retry,” the model handles planning and execution dynamically. The workflow emerges at runtime based on the goal and available tools.

Strands Agents shifts agent development away from brittle orchestration and toward letting modern LLMs do what they are already good at: reasoning, planning, and adapting step by step.

Let’s look at a more concrete and real-world example.

We’ll build an MCP-powered Agent that can create 3Blue1Brown-style videos for us by just taking a simple prompt.

Yet again, the process stays similar:

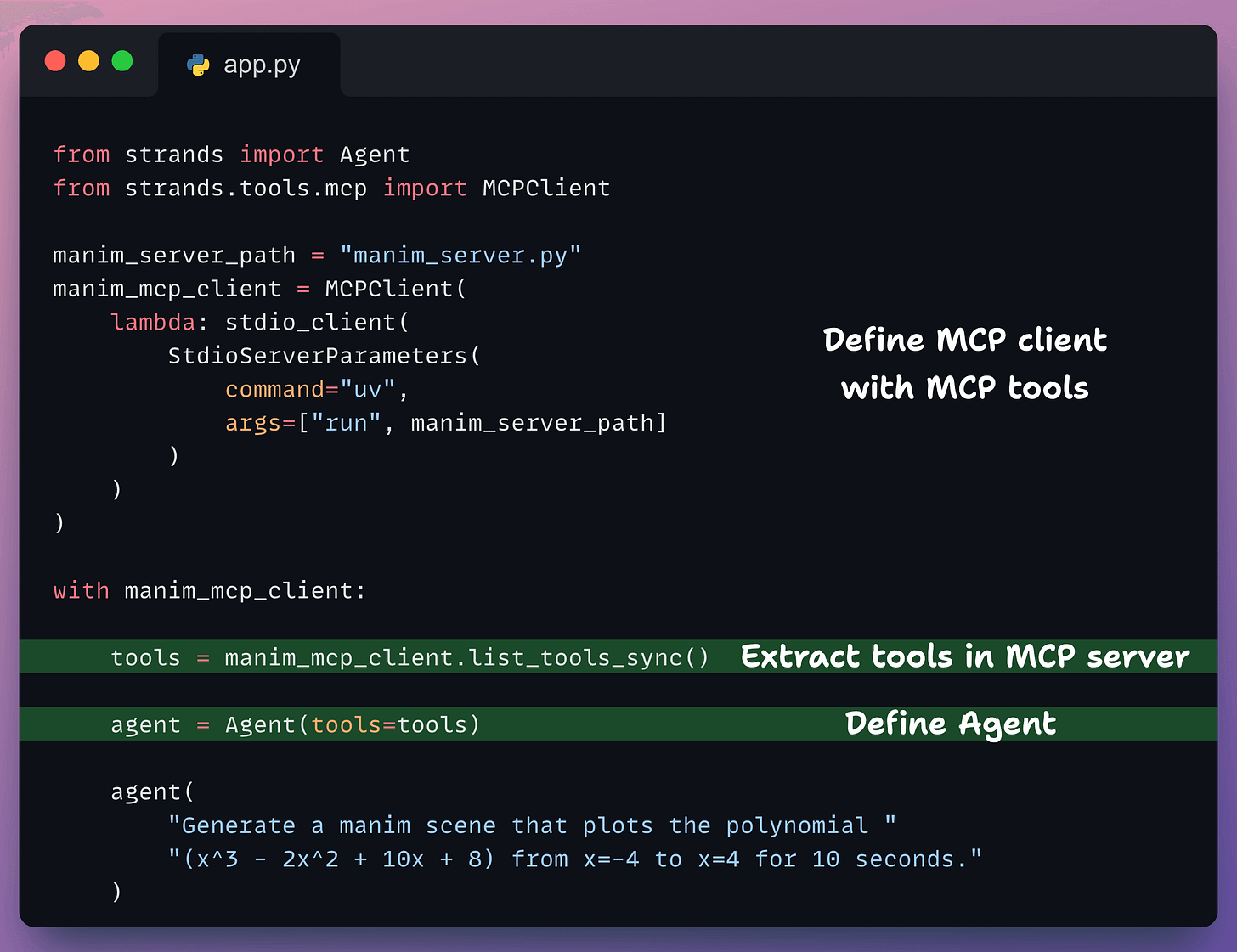

In the above code:

An MCP client connects to an MCP server. This server has a tool that executes the Manim script generated by the model.

We extract the tool via

list_tools_sync()method and define the Agent with these tools.Finally, we execute the Agent!

This produces the following video output:

Notice that we defined the Agent with only tools. No system prompts or workflow rules. The model decides when and how to use Manim based purely on the task.

And a single natural language call that drives the entire flow. The model plans the steps, generates the Manim scene, and invokes the right tool autonomously.

Lastly, if needed, you can also deploy the Agent over the Amazon Bedrock AgentCore Runtime in a few lines of code!

It is a secure, serverless runtime purpose-built for deploying and scaling dynamic AI agents and tools using any open-source framework, including Strands Agents, LangChain, LangGraph, and CrewAI.

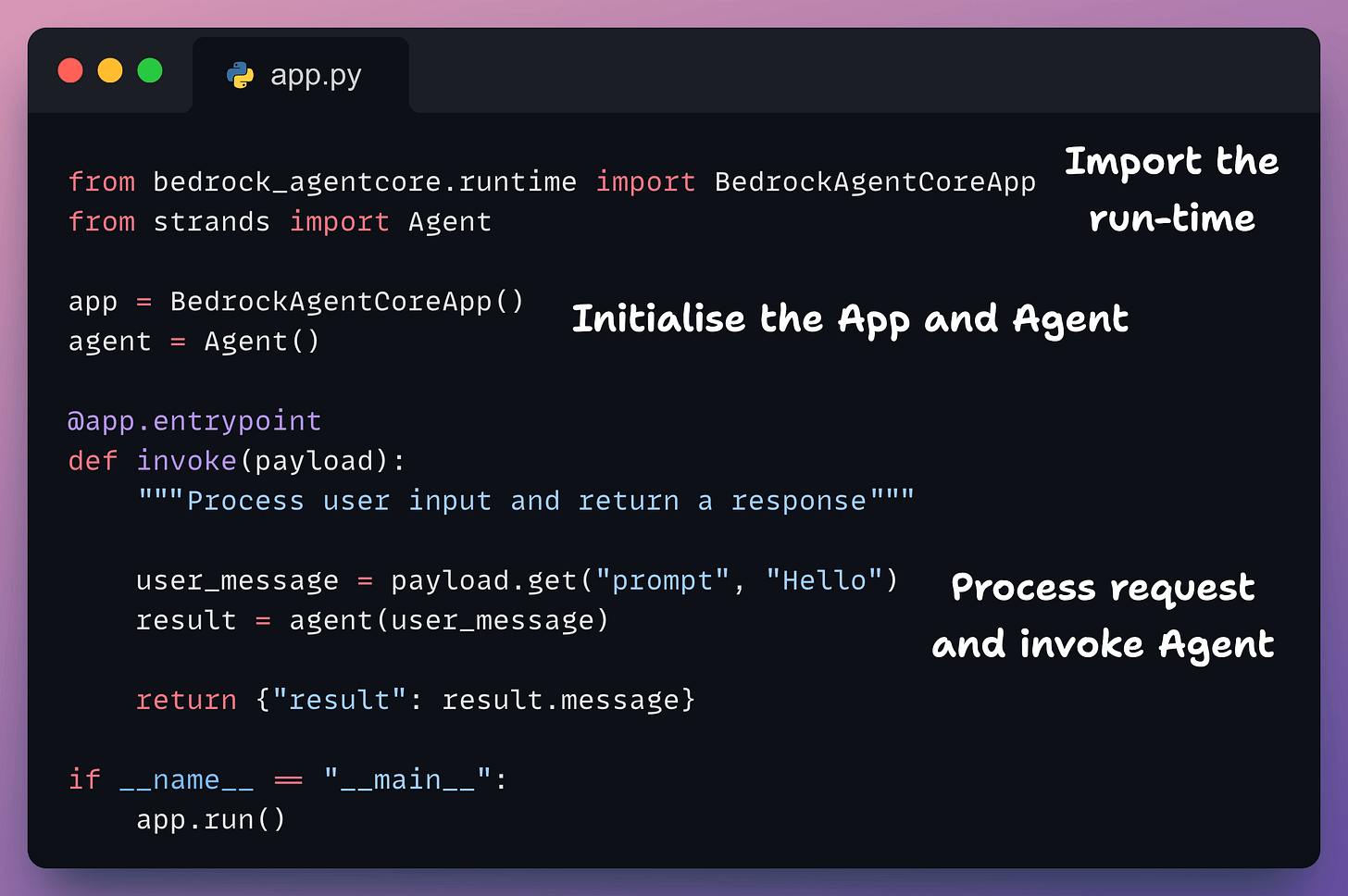

Here’s a simple example:

In the above code, we:

Import the Bedrock AgentCore runtime alongside Strands

Initialize a

BedrockAgentCoreAppthat handles scaling, security, and the request lifecycle.Define a Strands

Agentexactly the same way as before.Register a single entrypoint function that receives user input, forwards it to the agent, and returns the response.

You can find more details in the documentation here →

This example above shows what makes Strands Agents genuinely interesting. You are not assembling an agent out of prompts, routers, and special cases.

You are defining capabilities and letting the model reason its way through the task, which lowers the barrier to building useful agents while still leaving plenty of room for control, customization, and experimentation.

A minimal setup can already produce surprisingly capable behavior, and as you add better tools or models, the agent naturally becomes more powerful without rewriting the workflow.

You can find the Strands Agents GitHub repo here →

👉 Over to you: What would you like us to build next with Strands Agents?

Thanks to AWS for building this and working with us on today’s newsletter issue.

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.