Build Human-like Memory for Your AI Agents

A must-read research paper on Agents!

Agentic and RAG systems typically struggle with real-time knowledge updates and fast data retrieval.

Today, let’s discuss a recent paper by Zep that introduces an interesting temporally-aware knowledge graph architecture to address this.

In a gist, Zep’s memory system for AI agents outperforms several traditional approaches, offering accuracy improvements of up to 18.5% and reducing latency by 90%.

Let’s dive in!

The challenge

Retrieving static documents is often sufficient in typical LLM systems, like RAGs:

However, several real-world enterprise applications demand more.

AI agents need:

Real-time updates from live conversations.

A memory architecture capable of linking historical and current contexts.

The ability to resolve conflicts and validate facts as new data arrives.

Zep’s solution

The paper solves this by proposing a continuously learning, hierarchical, and temporally aware knowledge graph.

Think of it as human memory for AI Agents.

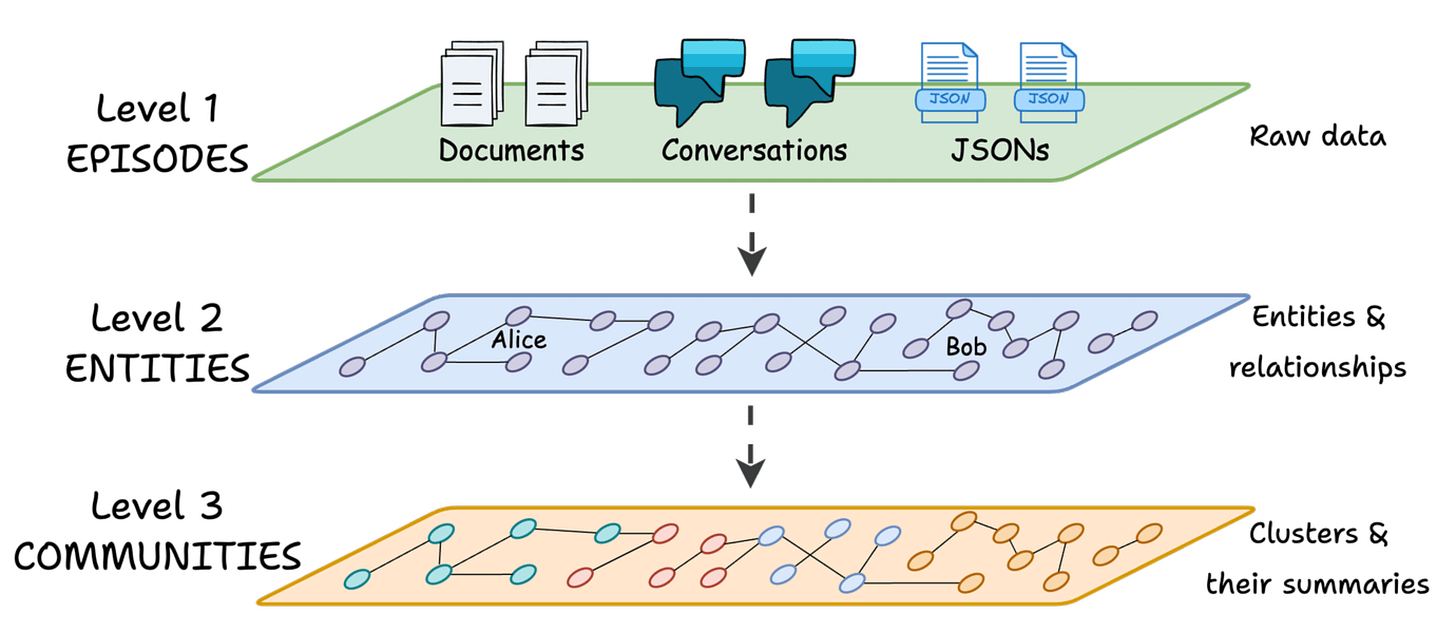

The following visual explains the memory component for Agents:

The memory structure has three key layers:

Episodic memory: Stores raw data like documents, conversations, JSONs, etc., ensuring no information is lost.

Semantic memory: Extracts entities and relationships, forming connections like "Alice WORKS_AT Google."

Community memory: Groups related entities into clusters for a high-level overview, such as a community summarizing Alice’s professional network.

Here's how this relates to human memory:

Think of how we process a conversation.

Imagine Alice tells you, "I started working at Google in 2020, collaborated with Bob on an AI project last year, and graduated from Stanford."

1) Episodic Memory:

You store the raw conversation, including Alice’s words and the setting where the conversation happened.

2) Semantic Memory:

From Alice’s words, you extract key facts like:

“Alice WORKS_AT Google.”

“Alice COLLABORATED_WITH Bob on an AI project in 2023.”

“Alice GRADUATED_FROM Stanford.”

Similarly, Zep’s approach processes episodic data, extracts entities and relationships, and stores them as "semantic edges" in the knowledge graph.

3) Community Memory:

Over time, you build a broader understanding of Alice’s network—her expertise in AI, connections like Bob, and affiliations with Google and Stanford.

Zep mirrors this by grouping related entities into a community, providing a summarized view of Alice’s professional landscape.

And on top of all this, it also maintains a temporal-structure.

For instance, if Alice says, "I moved to Meta in 2023," Zep doesn’t overwrite the previous fact about Google. Instead, it updates the knowledge graph to reflect both states with timestamps:

Alice WORKS_AT Google (Valid: 2020–2023)

Alice WORKS_AT Meta (Valid: 2023–Present)

This bi-temporal approach allows Zep to preserve the timeline of events (chronological order) and the order of data ingestion (transactional order).

As a result, Zep’s memory design for AI agents delivers up to 18.5% higher accuracy with 90% lower latency when compared to tools like MemGPT.

Interesting, isn’t it?

You can read this paper here: Memory paper by Zep.

We shall do a hands-on demo of this pretty soon.

Big thanks to Zep for giving us early access to this interesting paper and letting us to showcase their research.

👉 Over to you: What are your suggestion to further improve this system?

Thanks for reading!

will u implement it in the future. I think it is used for hyperpersionalization for enterprise. Like create a chatbot that answer the question and have knowledge about each customer.