Build Unified API Endpoints for Agents

...in a minute across any framework (Crew, LangGraph, Strands)!

MongoDB.local San Francisco on January 15th

MongoDB.local SF is happening on January 15th, a full-day event packed with technical sessions, product demos, and direct access to MongoDB experts.

Developers, architects, DevOps engineers, and anyone building (or learning to build) with MongoDB can attend.

The speaker lineup is also solid. You’ll hear from

CJ Desai (MongoDB’s CEO)

Sarah Guo (Conviction)

Konstantine Buhler (Sequoia)

and Tengyu Ma (MongoDB’s Chief AI Scientist).

Plus sessions from NVIDIA, AWS, Google Cloud, and several AI startups.

The focus is entirely practical on how to build and scale AI-powered applications with MongoDB. Topics include Vector Search, RAG implementations, and agentic systems.

$100 for a full-day pass (use code MDBBuilder for 50% off)

Thanks to MongoDB for partnering today!

Build Unified API Endpoints for Agents

There’s a pattern that keeps repeating in software.

First, everyone focuses on the “building” problem.

Frameworks emerge, mature, and become genuinely good. Then suddenly, the constraint flips to deployment.

We saw this with neural networks.

PyTorch, TensorFlow, and Caffe were all excellent for building models. But deploying them meant dealing with different formats and runtimes.

ONNX allowed devs to build in whatever framework they want, export to a standard format, and deploy anywhere.

We’re watching the same pattern unfold with Agents right now.

Frameworks like LangGraph, CrewAI, Agno, and Strands are mature enough that building an agent is no longer the hardest part.

Instead, it’s what happens after that: deployment, streaming, memory management, observability, and auto-scaling.

These aren’t agent problems but rather infra problems. And right now, every AI team we have talked to is solving them from scratch.

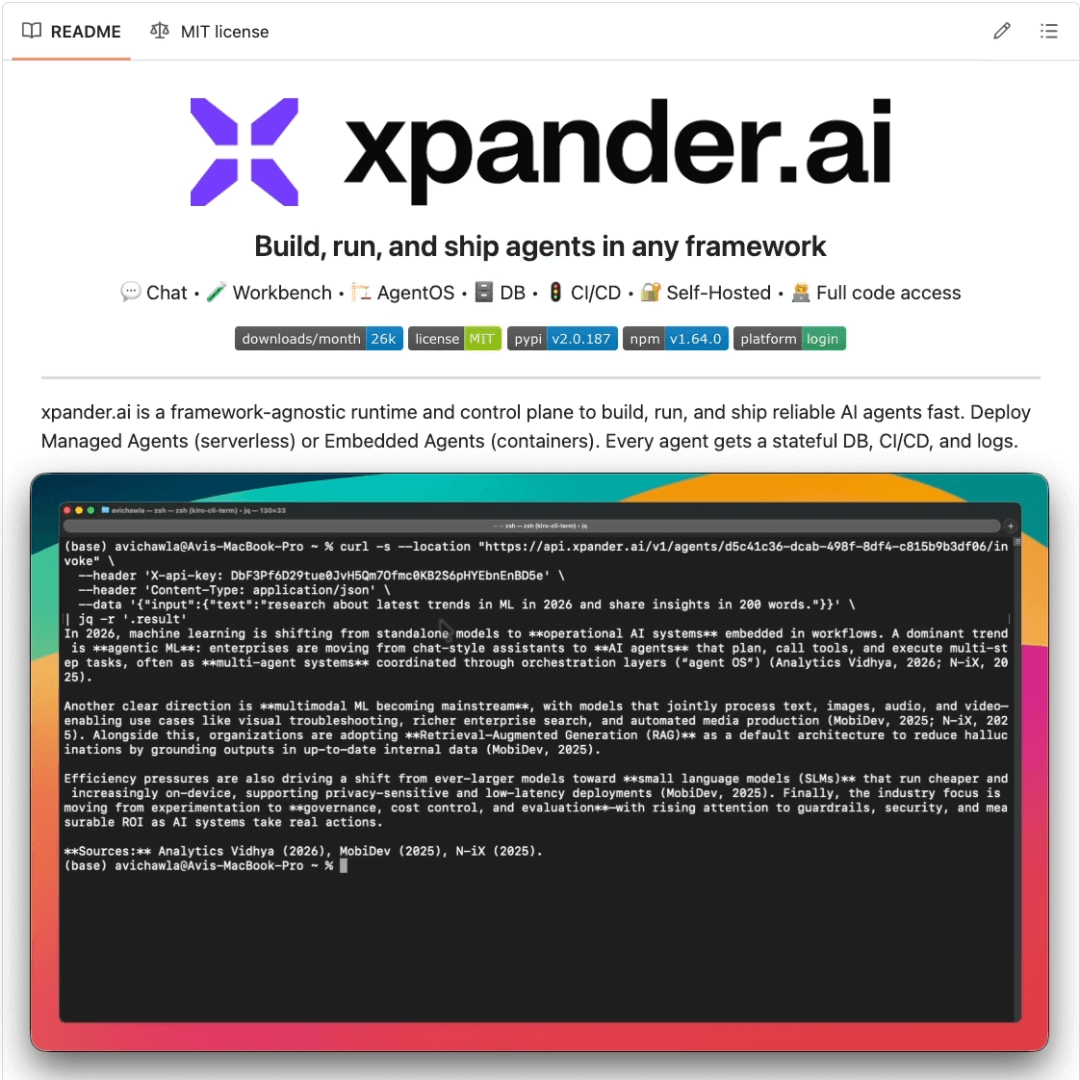

xpander (GitHub repo) is taking the ONNX approach to this problem, and it’s the right mental model.

The core idea is simple: bring your agent (built in any framework), deploy it through xpander, and get all the production infra.

This includes:

Deploy serverless in ~2 minutes

SSE streaming for real-time thinking UX

Memory management at session/user level

2,000+ connectors (Slack, GitHub, and more)

A unified API to invoke any agent, regardless of framework

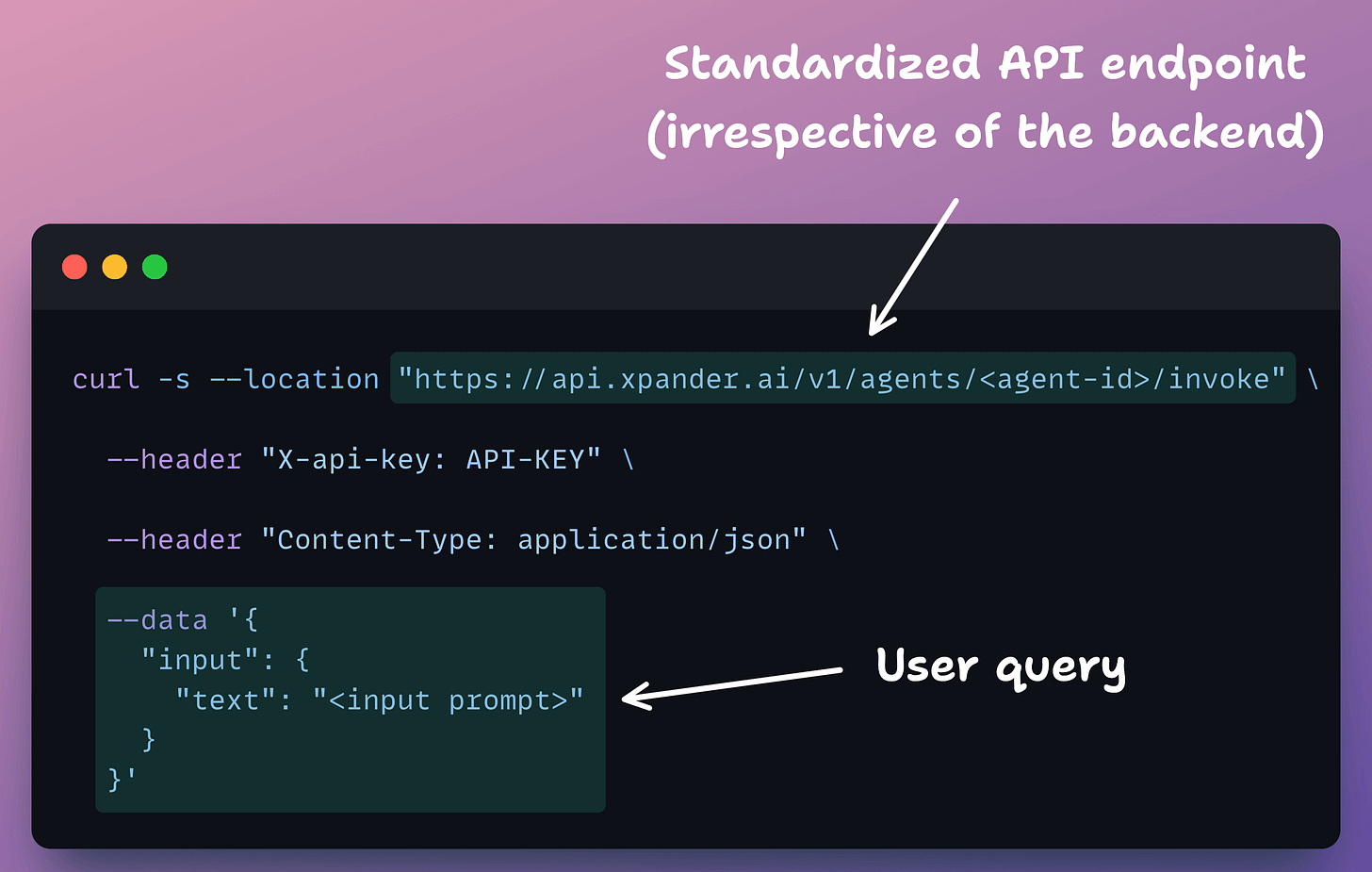

The Unified API is probably the most interesting part of this.

Essentially, any Agent you deploy (regardless of the framework) gets the same invocation endpoint, with the same payload structure, streaming format, and auth pattern.

This means your frontend doesn’t need to know if the agent was built with LangGraph, CrewAI, or something custom. It just hits the endpoint and gets a response.

This matters because in many orgs, different teams end up building agents with different frameworks based on their preferences or use cases.

Without a unified layer, they end up maintaining multiple integration patterns and streaming implementations.

xpander, however, provides one API contract over every agent, so the consuming app doesn’t need to know what framework the agent was built in.

The video below demonstrates xpander’s unified API in action.

You can extend the same standardized API endpoint format to any Agent, regardless of the framework, and get the same streaming, memory, observability, and scaling benefits without changing a single line of integration code.

The building stays decoupled from the deployment, which is exactly how it should be.

You can find the GitHub repo here →

If you learn more about Agent deployment, we also covered this in a hands-on demo recently.

Here’s the video:

You’ll learn how to build and deploy a Coding Agent using xpander that can scrape docs, write production-ready code, solve issues, and raise PRs, directly from Slack.

Thanks for reading/watching!