Conformal Predictions: Build Confidence in Your ML Model's Predictions

A step towards building and using ML models reliably.

Conformal prediction has gained quite traction in recent years, which is evident from the Google trends results:

The reason is quite apparent.

ML models are becoming increasingly democratized lately. However, not everyone can inspect its predictions, like doctors or financial professionals.

Thus, it is the responsibility of the ML team to provide a handy (and layman-oriented) way to communicate the risk with the prediction.

Conformal predictions solve this problem.

We are discussing it in this week’s deep dive: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Why care?

Blindly trusting an ML model’s predictions can be fatal at times.

This is especially true in high-stakes environments where decisions based on these predictions can affect human lives, financial stability, etc.

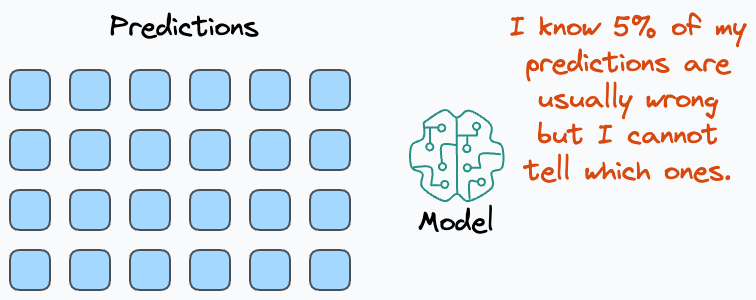

Also, while an accuracy of “95%” may look good on paper, the model will never tell which specific 5% of its predictions are incorrect:

To put it another way, although we may know that the model’s predictions are generally correct a certain percentage of the time, the model typically cannot tell much about a specific prediction.

As a result, in high-risk situations like medical diagnostics, for instance, blindly trusting every prediction can result in severe health outcomes.

Thus, to mitigate such risks, it is essential to not only solely rely on the predictions but also understand and quantify the associated uncertainty.

If we don’t have any way to understand the model’s confidence, then an accurate prediction and a guess can look the same.

For instance, if you are a doctor and you get this MRI, an output from the model that suggests that the person is normal and doesn’t need any treatment is likely pretty unuseful to you.

Instead, what doctors really care about is knowing if there’s a 10% percent chance that that person has cancer, based on the reports.

Conformal predictions are one elegant, handy, and intuitive way to build confidence in your ML model’s predictions.

To help you cultivate that skill, we discuss it in this week’s deep dive: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

A somewhat tricky thing about conformal prediction is that it requires a slight shift in making decisions based on model outputs.

Nonetheless, this field is definitely something I would recommend keeping an eye on, no matter where you are in your ML career.

Start by cultivating the skill here: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Thanks for reading!