Context engineering for Agents

...explained visually.

Connect any LLM to any MCP server!

mcp-use is the open source framework to connect any LLM to any MCP server and build custom agents that have tool access, without using closed source or application clients.

It lets you build 100% local MCP clients.

Find the GitHub repo here → (don’t forget to star)

Context engineering for Agents

Context engineering is getting important, but we feel that many people still struggle a bit to truly understand what it actually means.

Today, let’s cover everything you need to know about context engineering in a step-by-step manner!

Let's begin!

Simply put, context engineering is the art and science of delivering the right information, in the right format, at the right time, to your LLM.

Here's a quote by Andrej Karpathy on context engineering...

To understand context engineering, it's essential to first understand the meaning of context.

Agents today have evolved into much more than just chatbots.

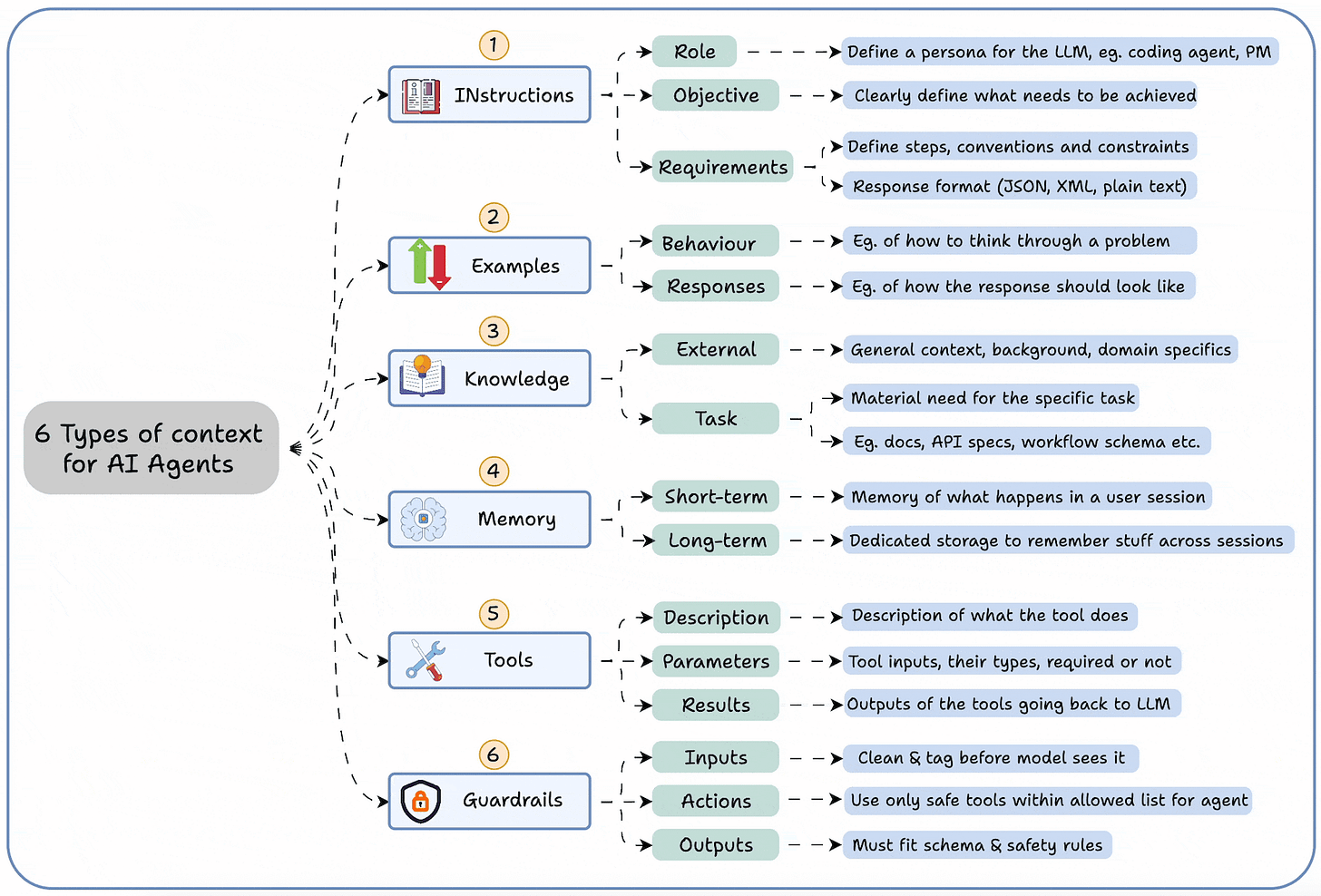

The graphic below summarizes the 6 types of contexts an agent needs to function properly, which are:

Instructions

Examples

Knowledge

Memory

Tools

Guardrails

This tells you that it's not enough to simply "prompt" the agents.

You must engineer the input (context).

Think of it this way:

If LLM is a CPU.

Then the context window is the RAM.

You're essentially programming the "RAM" with the perfect instructions for your AI.

How do we do it?

Context engineering can be broken down into 4 fundamental stages:

Writing Context

Selecting Context

Compressing Context

Isolating Context

Let's understand each, one-by-one...

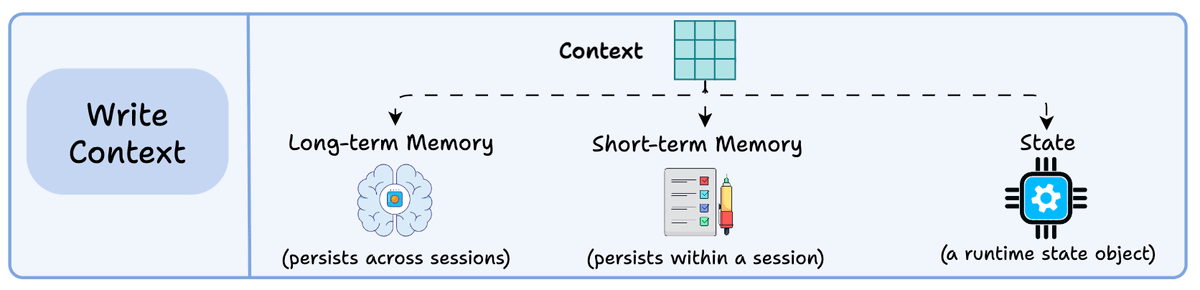

1) Writing context:

Writing context means saving it outside the context window to help an agent perform a task.

You can do so by writing it to:

Long-term memory (persists across sessions)

Short-term memory (persists within a session)

A state object

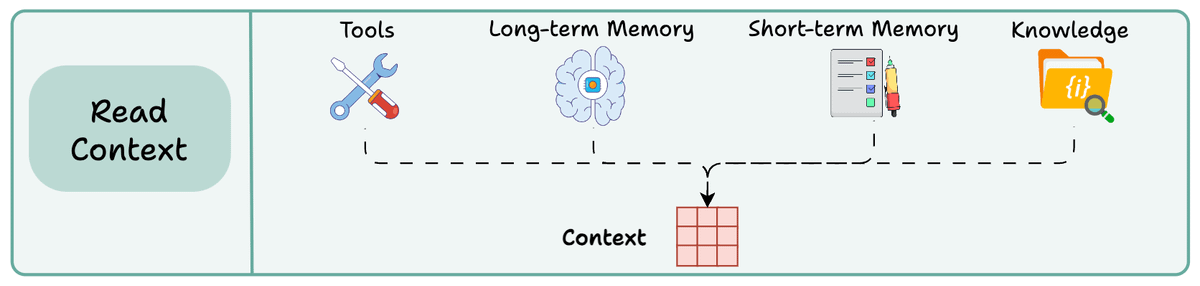

2) Read context:

Reading context means pulling it into the context window to help an agent perform a task.

Now this context can be pulled from:

A tool

Memory

Knowledge base (docs, vector DB)

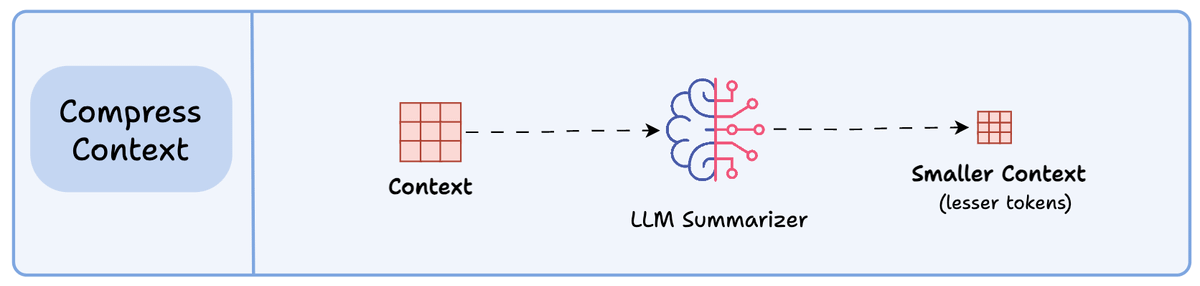

3) Compressing context

Compressing context means keeping only the tokens needed for a task.

The retrieved context may contain duplicate or redundant information (multi-turn tool calls), leading to extra tokens & increased cost.

Context summarization helps here.

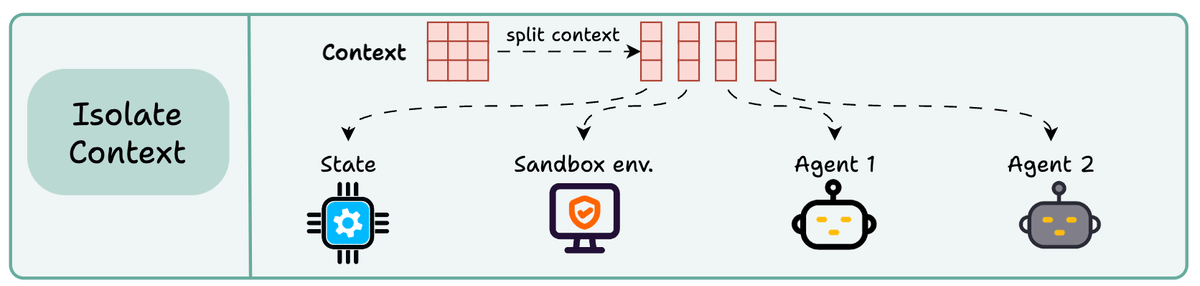

4) Isolating context

Isolating context involves splitting it up to help an agent perform a task.

Some popular ways to do so are:

Using multiple agents (or sub-agents), each with its own context

Using a sandbox environment for code storage and execution

And using a state object

So essentially, when you are building a context engineering workflow, you are engineering a “context” pipeline so that the LLM gets to see the right information, in the right format, at the right time.

This is exactly how context engineering works!

Nothing fancy.

👉 Over to you: What are your thoughts on context engineering? Have you built something with it yet?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.