CopilotKit CoAgents: Build Human-in-the-loop AI Agents With Ease

4 key features that make it 10x easier to develop AI Agents.

2024 has been the year of AI Agents.

What are they, why are they so popular, and what problems have we been running into while building them?

Let’s understand this today and how CoAgents, an entirely open-source development by CopilotKit, is solving those problems like no one else in the industry.

Before we begin…

Sign up for early access to CoAgents here:

Also, this Thursday (12th September), CopilotKit is organizing a webinar where you will learn how to build human-in-the-loop AI Agents with CoAgents. Register here: CoAgents event.

Let’s begin!

Background

Given the scale and capabilities of modern LLMs, it feels limiting to use them as “standalone generative models” for pretty ordinary tasks like text summarization, text completion, code completion, etc.

Instead, their true potential is only realized when you build systems around these models, where they are allowed to:

access, retrieve, and filter data from relevant sources,

analyze and process this data to make real-time decisions and more.

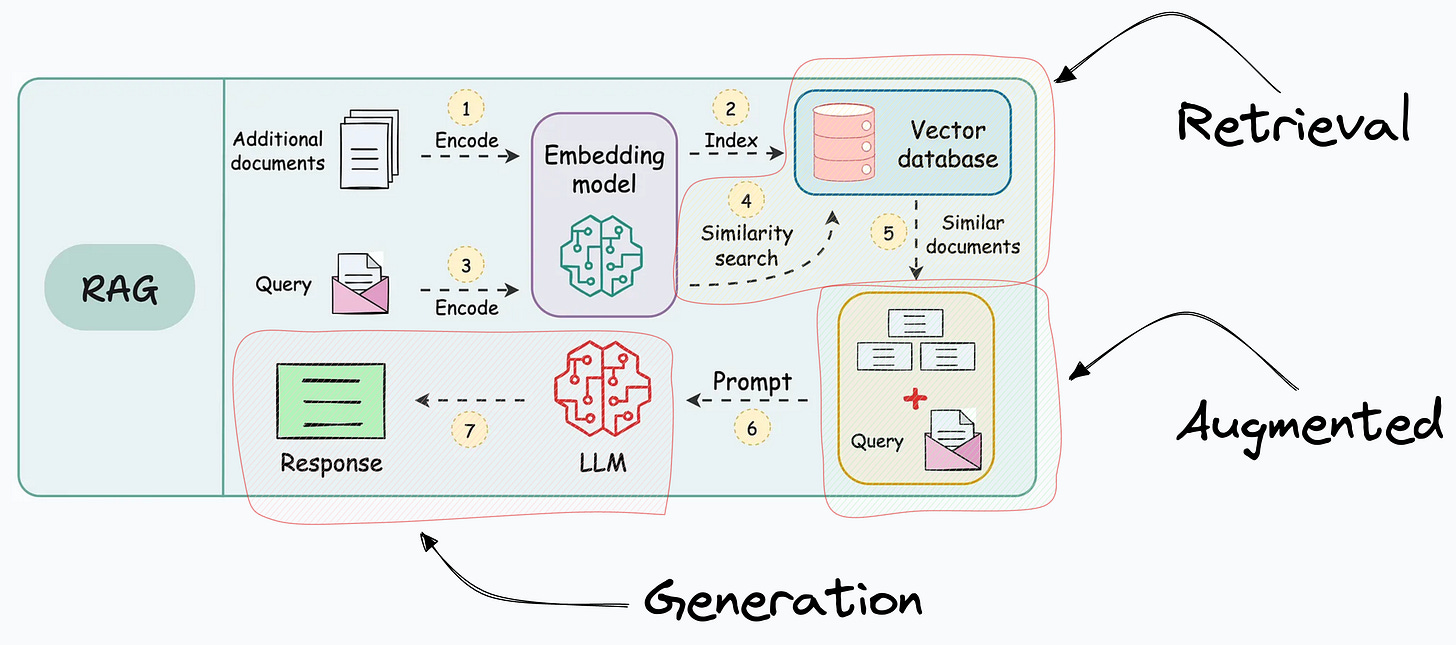

RAG was a pretty successful step towards building such compound AI systems:

But since RAG follows a programmatic flow (you as a programmer define the steps, the database to search for, the context to retrieve, etc.), it does not unlock the full autonomy one may expect these compound AI systems to possess.

That is why the primary focus in 2024 has been on building AI Agents—autonomous systems that can reason, think, plan, figure out the relevant sources and extract information from them when needed, take actions, and even correct themselves if something goes wrong.

While this sounds promising, in their current state, the issue is that:

We are far from building fully autonomous AI agents.

More specifically, when an agent needs to perform a complex task with multiple steps, one mistake in the process derails the entire operation.

To avoid this, they need feedback mechanisms, such as human-in-the-loop (HITL), to guide them through the steps (IBM talks about this extensively in this blog).

As the name suggests, human-in-the-loop workflows combine the power of AI Agents with humans.

CoAgents is an entirely open-source development by CopilotKit that provides all the necessary infrastructure to build such workflows.

I have talked about CopilotKit before in this newsletter. It’s an open-source copilot platform that lets us integrate AI into ANY application with very little code and without caring about the typical integration challenges.

This newsletter is specifically about CoAgents and everything you can do with it.

All About CoAgents

At its core, CoAgents is heavily driven by LangGraph, a framework for defining, coordinating, and executing LLM agents in a structured manner using graphs.

CoAgents takes it a step forward by providing all the functionalities that connect LangGraph with human-in-the-loop workflows to build much more reliable AI Agents than one can build with LangGraph alone.

Here are some key features.

For the demo, I will use this Perplexity-style search engine the team built using CopilotKit and LangGraph.

There are four key features of CoAgents that streamlines the process of building AI Agents:

1) Stream intermediate agent state

With CoAgents, one can stream the agent’s intermediate states to the application UI as the agent executes the prompt.

This way, the user can see what the agent is doing on the backend and verify if it is taking the right steps rather than just staring at a loading spinner.

For instance, I asked the search engine this: “Winner of the FIFA World Cup from 1980 to 2024” and look what it outputs:

As shown above, with the help of CoAgents, we can stream the intermediate states of the AI Agent to the user to verify if it is taking the right steps.

Compare it to using, say, ChatGPT (not an agent though but just for the sake of comparison), wherein, you have no idea how it generated a particular response.

2) Shared state between the agent & the application

Consider the above feature and think about it for a second.

Streaming the AI Agent’s intermediate states isn’t entirely helpful, is it? I mean, of course, it is beneficial to communicate what’s going on, but humans should be allowed to engage with those states if needed.

Thus, the states must be bi-directionally synced between the application state (visible to the human) and the agent state (the internal state of the agent) to allow an agent and a human to collaborate on a task.

This feature of CoAgents allows us to do that.

3) Agent Q&A

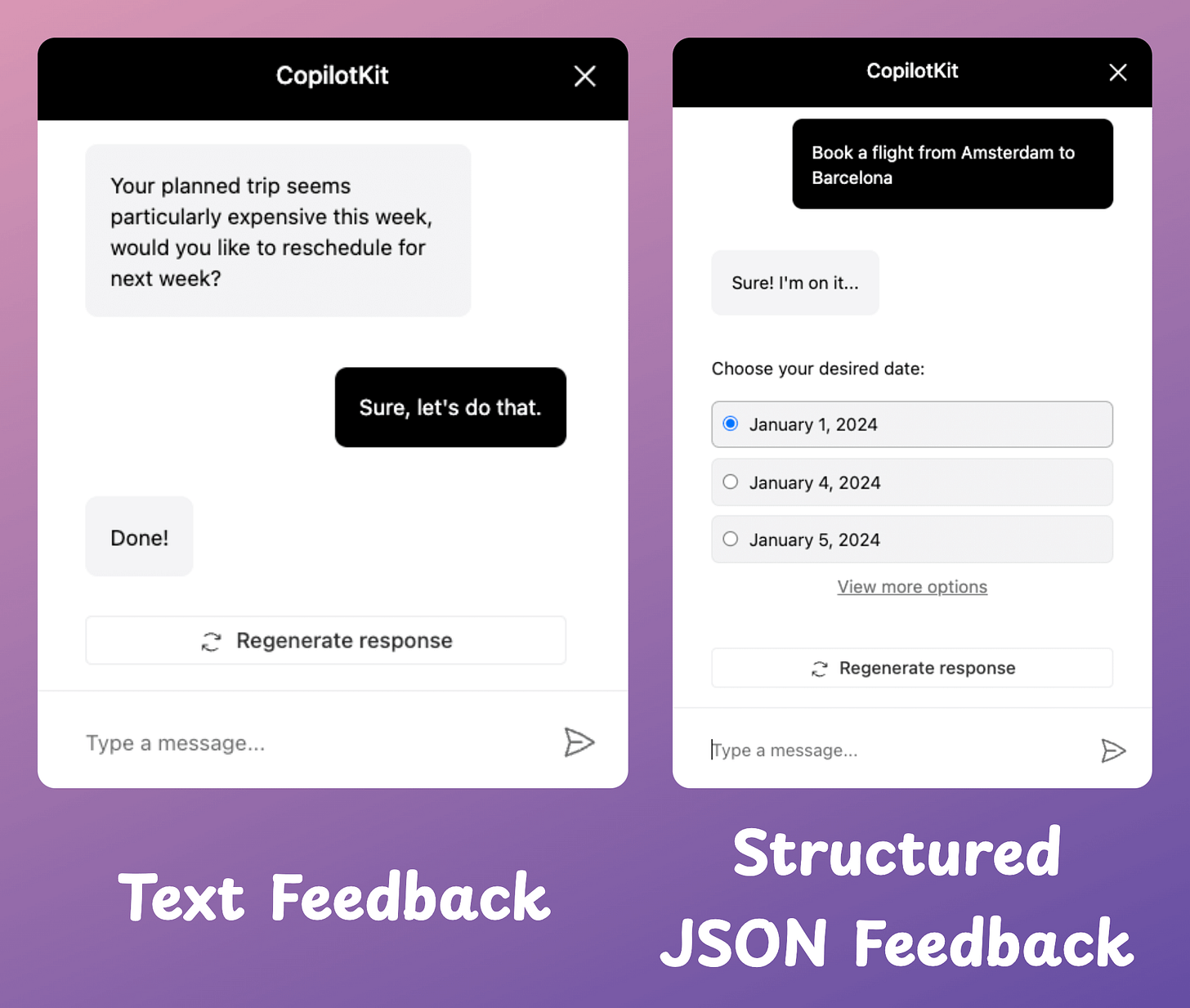

One obvious expectation from any human-in-the-loop-driven agent is that if it is unsure about something or needs some additional details from the user to reach the final state, it should be able to pose questions to the user.

Using this feature, any AI agent can easily ask such questions in two ways, as depicted below:

With a text feedback.

With a structured (JSON) feedback

4) Agent steering (Coming soon)

Once the agent has produced its state, it might be needed that we may want to go back to an intermediate state, correct some things, and rerun from that particular checkpoint.

This feature, which will be released soon (join early access to stay updated), will let us do that:

There are some pretty cool updates, aren’t they?

This is a pretty cool demo released by the CopilotKit team if you want to learn more about CoAgents:

A departing note

With today’s technology and techniques, any of those existing GPTs or LLaMAs or Mistrals can be (almost) reliably:

Fine-tuned to additional information using LoRA/QLoRA, etc.

Augmented with additional information using RAG-based techniques.

Moreover, with techniques like Tree-of-thoughts, Reflexion, etc., we have been able to introduce “planning/reasoning hacks” into the model to leverage more agentic behaviors.

Then why are we still training larger and larger models? Why not make the existing ones more powerful? Why not work more on such techniques? What is the motivation here?

People typically do not think about this often.

But clearly, the key motivation here isn’t to create or build models that know everything.

Instead, it’s expected (or believed) that larger models may unlock more profound reasoning abilities to make these “standalone generative models” or “next-token predictors” more applicable to complex and compound systems we intend to leverage them for.

But until we get there, AI Agents driven by human-in-the-loop workflows will continue to lead this space.

Learning these skills will put you towards an exceptional career in AI if you intend to build one.

I love CopilotKit’s mission of supporting software engineers, data scientists, and machine learning engineers in building AI copilots and agents easily, while CopilotKit takes care of all core functionalities and challenges.

They are solving a big problem with existing AI agents, and I’m happy to see how they have progressed over the last 6-7 months.

Sign up for early access to CoAgents here:

This Thursday (12th September), the CopilotKit team is also organizing a webinar about building human-in-the-loop CoAgents. Register below:

Do star CopilotKit repo to support their work: CopilotKit GitHub.

🙌 Also, a big thanks to the CopilotKit team, who very kindly partnered with me on today’s newsletter and let me share my thoughts openly.

👉 Over to you: What problems will you use CopilotKit for?

Thanks for reading!