[Demo] Build Production-grade Agentic Workflows in Plain English

...in under a minute.

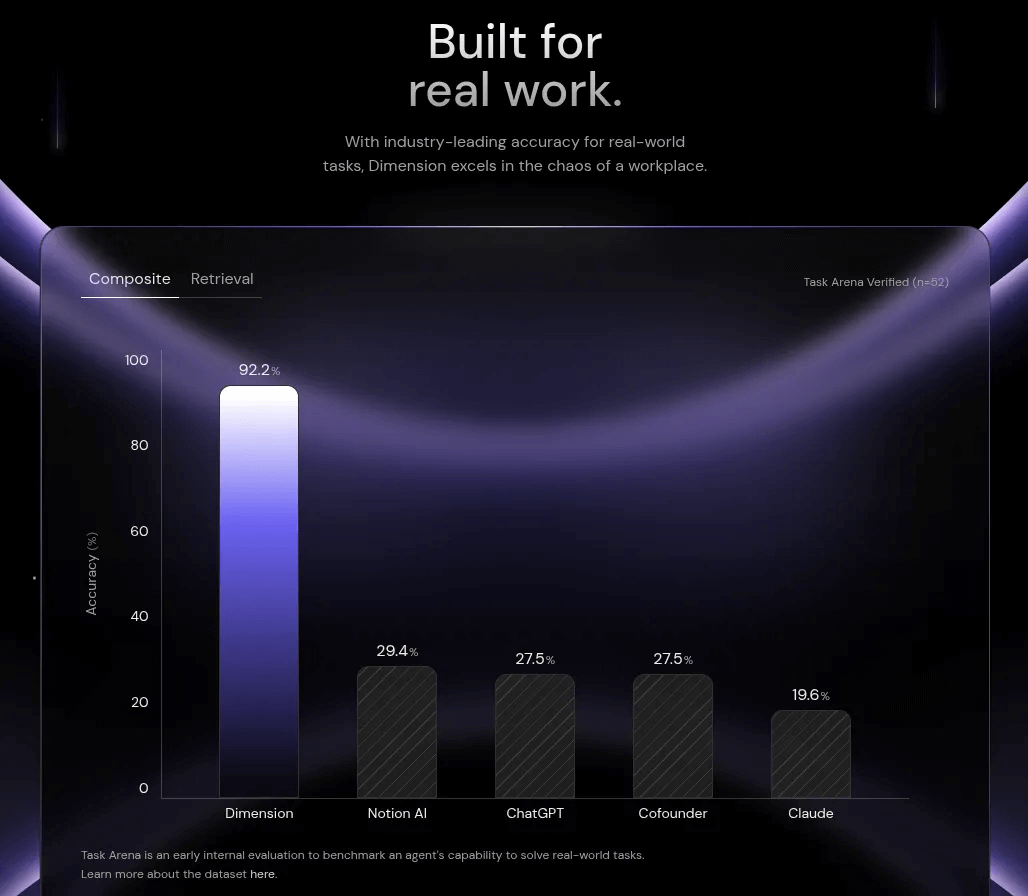

Dimension: The first AI coworker that actually gets things done

Most AI tools still force you to jump between chats, inboxes, docs, and dashboards.

Dimension removes that constant context switching between chat windows and office tools [Check out their launch demo video]

It plugs into Gmail, Notion, calendars, Linear, Vercel, and quietly takes over the repetitive parts of your day. Planning, emails, docs, meeting follow-ups, and engineering chores. All handled through dependable multi-step workflows.

It reads inboxes, sorts urgency, drafts replies, creates tickets, updates docs, and produces finished outputs rather than loose suggestions. Any action can be triggered in simple language, and the agent follows through across accounts with clear confirmations.

Its orchestration layer keeps context about teams, tasks, and past work, making it smart enough to route items, pick owners, and fill templates with the right details.

If you want to see it in action, their launch demo is worth a look.

You also get 50k free credits when you sign up to test this out.

Start using your AI coworker here →

Thanks to Dimension for partnering today!

[Demo] Build Agentic workflows in plain English

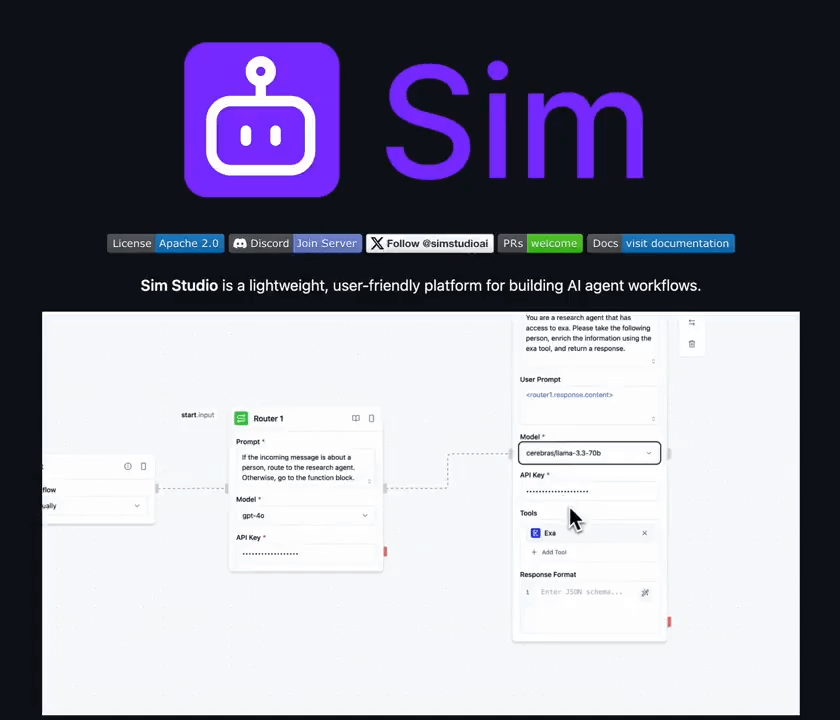

Sim’s Copilot is like Cursor for agentic workflows!

Here’s how it works:

Describe what you want

Watch everything build in real-time

Easy to debug and refine

It requires no complex frameworks, just plain English prompts.

We recorded a live demo to show you how this works:

You can try it for free here →

You should also check Sim’s open-source GitHub repo.

It offers a drag-and-drop, user-friendly platform that makes creating AI agent workflows accessible to everyone.

A powerful, 100% open-source alternative to n8n.

Function calling & MCP for LLMs

Before MCPs became mainstream (or popular like they are right now), most AI workflows relied on traditional Function Calling.

Now, MCP (Model Context Protocol) is introducing a shift in how developers structure tool access and orchestration for Agents.

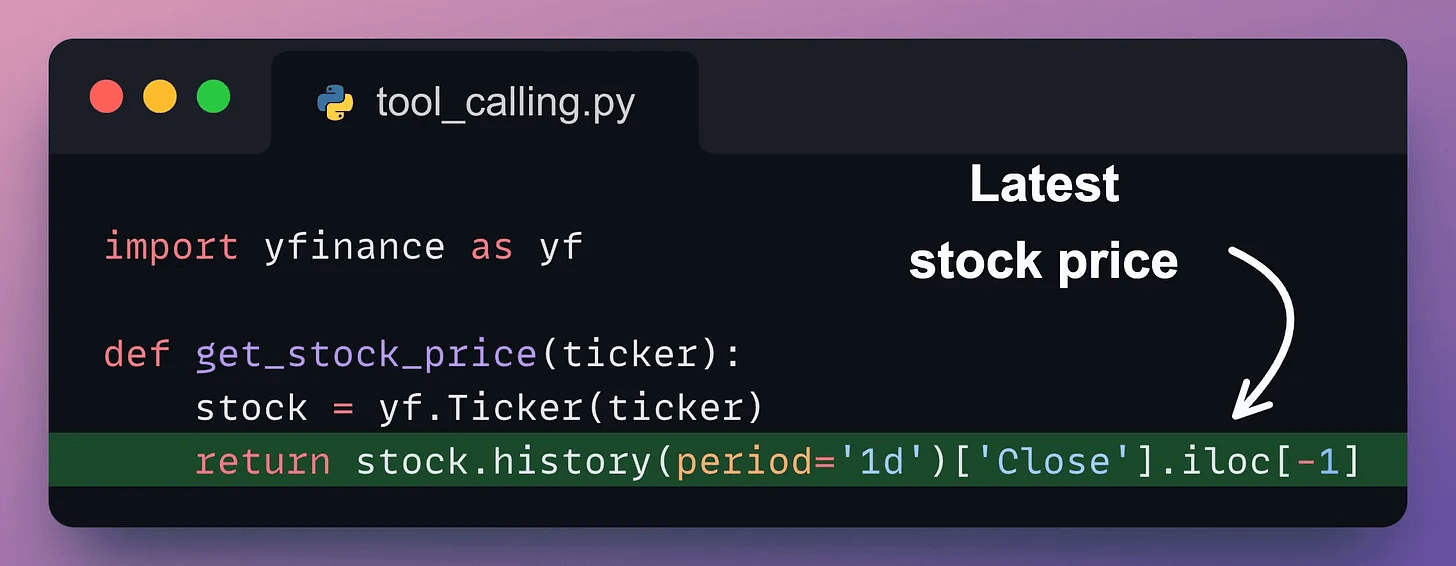

Here’s a visual that explains Function calling & MCP:

Let’s dive in to learn more!

What is Function Calling?

Function Calling is a mechanism that allows an LLM to recognize what tool it needs based on the user’s input and when to invoke it.

Here’s how it typically works:

The LLM receives a prompt from the user.

The LLM decides the tool it needs.

The programmer implements procedures to accept a tool call request from the LLM and prepares a function call.

The function call (with parameters) is passed to a backend service that would handle the actual execution.

Let’s understand this in action real quick!

First, let’s define a tool function, get_stock_price. It uses the yfinance library to fetch the latest closing price for a specified stock ticker:

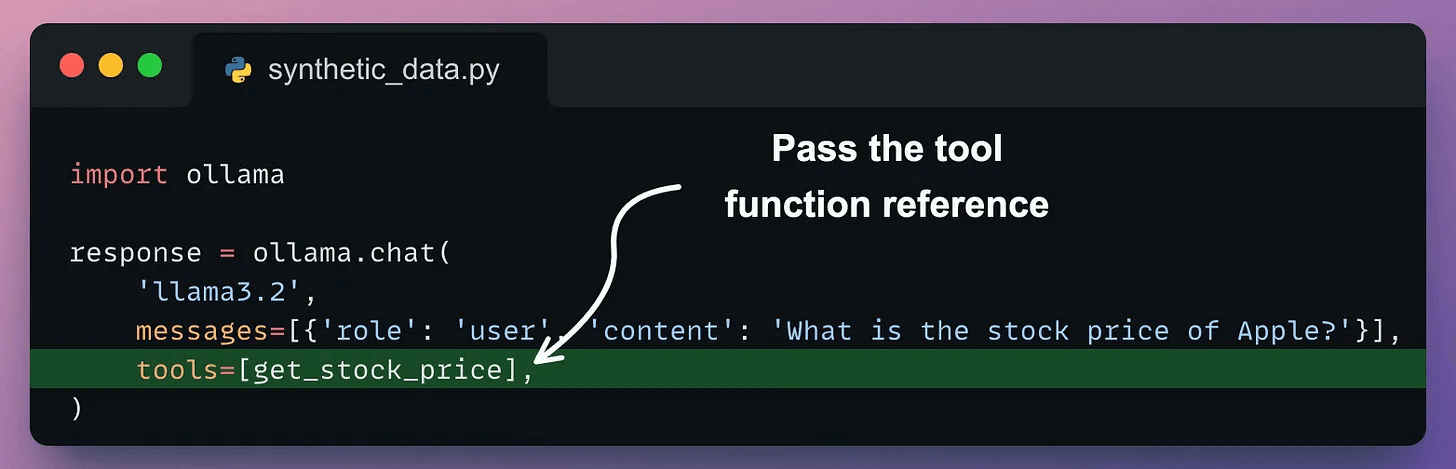

Next, we prompt an LLM (served with Ollama) and pass the tools the model can access for external information (if needed):

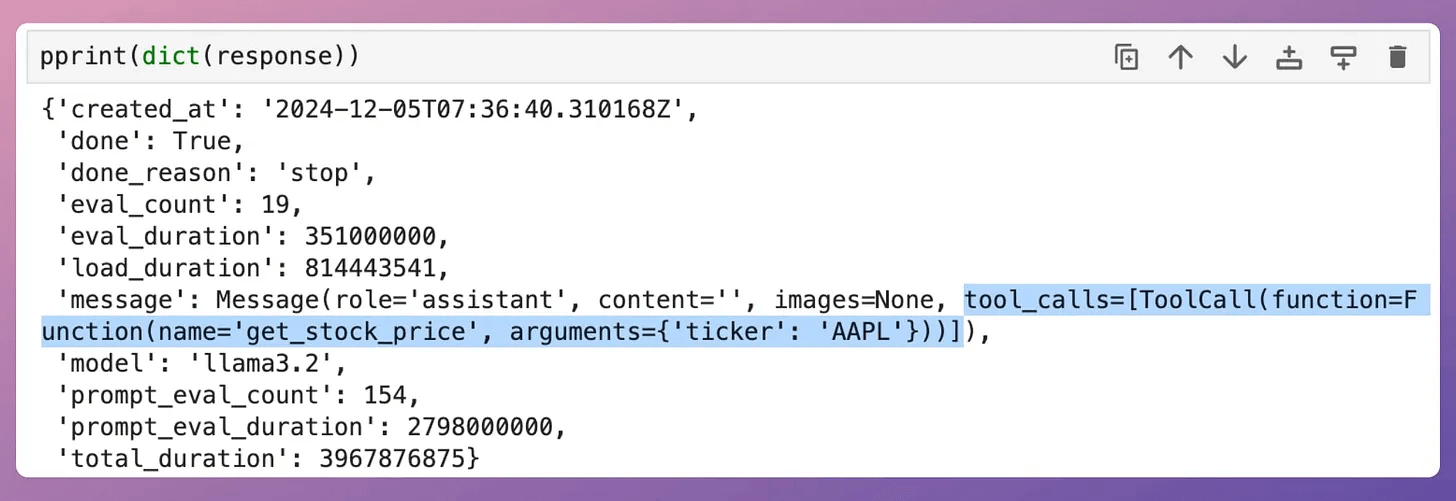

Printing the response, we get:

Notice that the message key in the above response object has tool_calls, which includes relevant details, such as:

tool.function.name: The name of the tool to be called.tool.function.arguments: The arguments required by the tool.

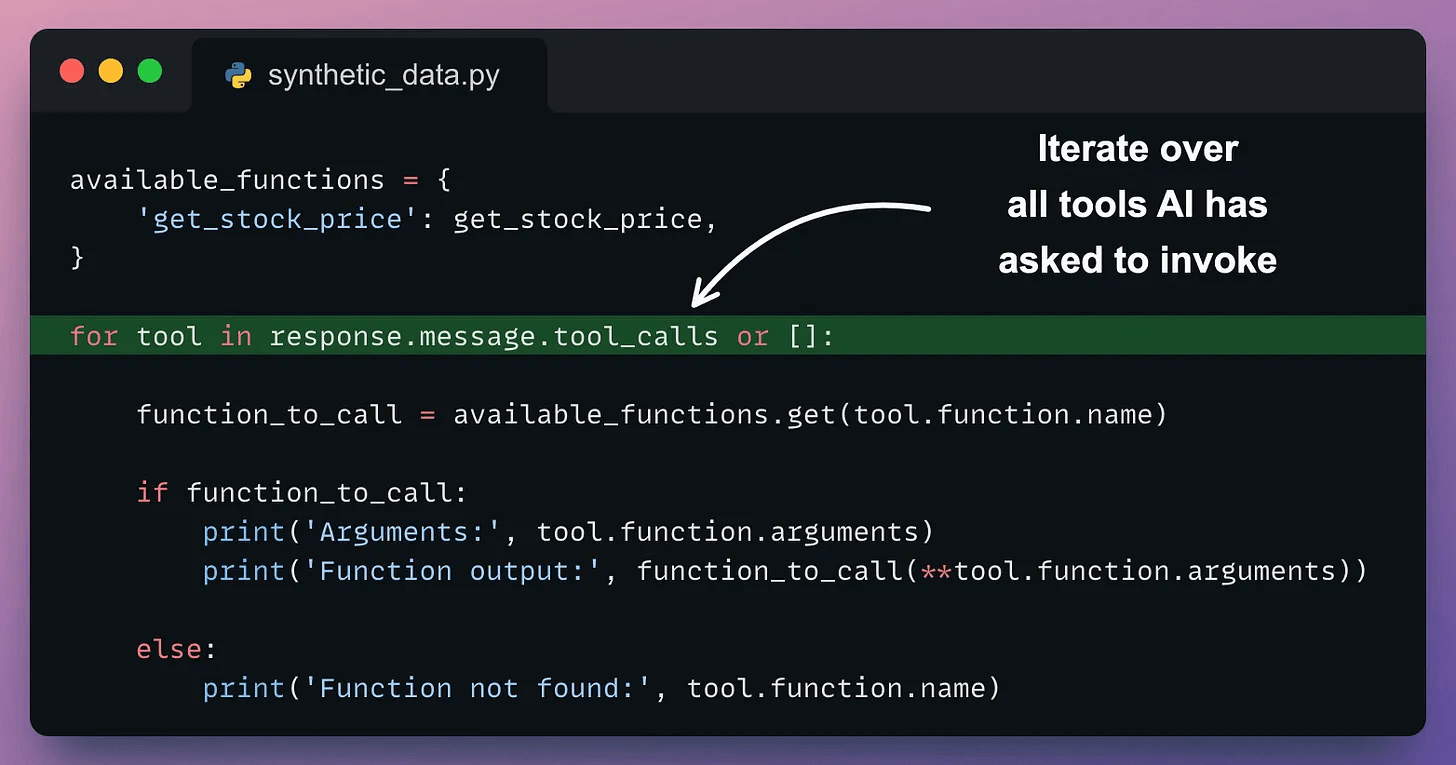

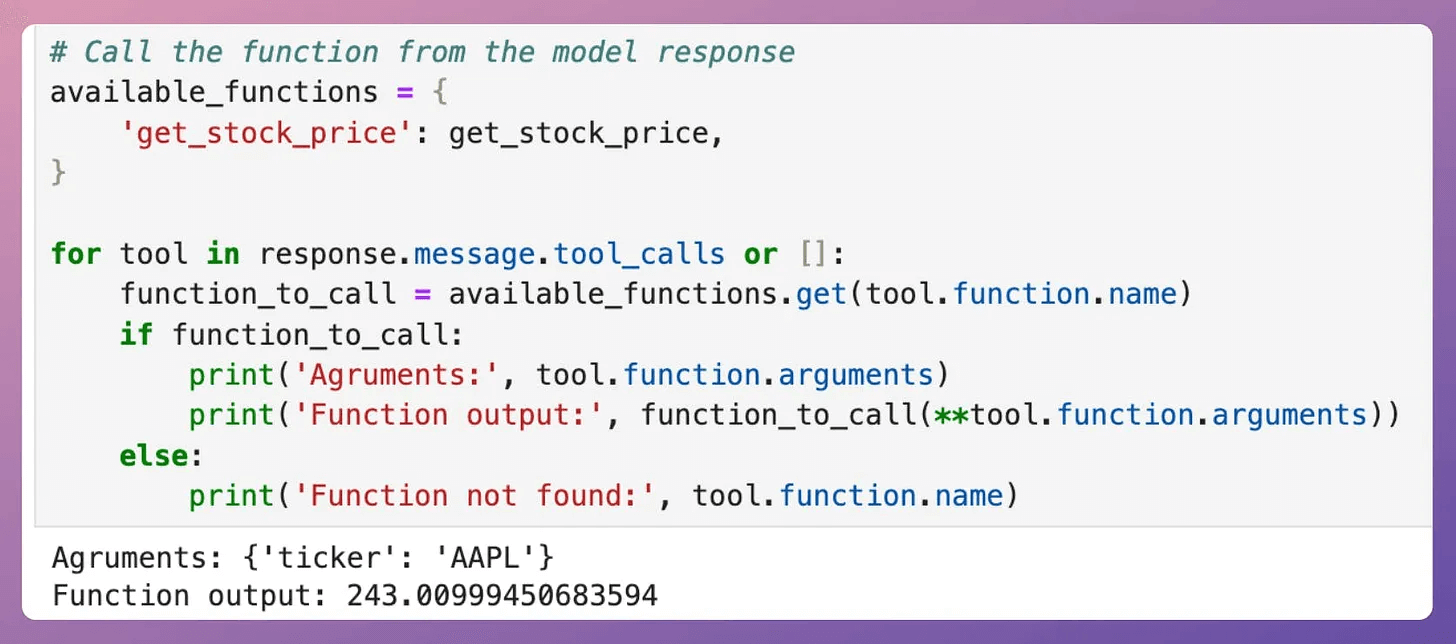

Thus, we can utilize this info to produce a response as follows:

This produces the following output:

Notice that the entire process happened within our application context. We were responsible for:

Hosting and maintaining the tools/APIs.

Implementing a logic to determine which tool should be invoked and what its parameters are.

Handling the tool execution and scaling it if needed.

Managing authentication and error handling.

In short, Function Calling is about enabling dynamic tool use within your own stack, but it still requires you to wire everything manually.

What is MCP?

MCP, or Model Context Protocol, attempts to standardize this process.

While Function Calling focuses on what the model wants to do, MCP focuses on how tools are made discoverable and consumable, especially across multiple agents, models, or platforms.

Instead of hard-wiring tools inside every app or agent, MCP:

Standardizes how tools are defined, hosted, and exposed to LLMs.

Makes it easy for an LLM to discover available tools, understand their schemas, and use them.

Provides approval and audit workflows before tools are invoked.

Separates the concern of tool implementation from consumption.

Let’s understand this in action real quick by integrating Firecrawl’s MCP server to utilize scraping tools within Cursor IDE.

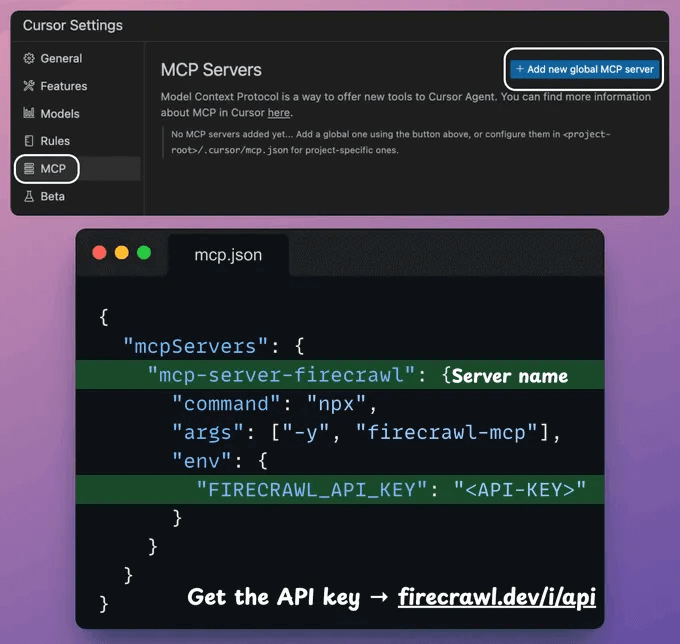

To do this, go to Settings → MCP → Add new global MCP server.

In the JSON file, add what’s shown below👇

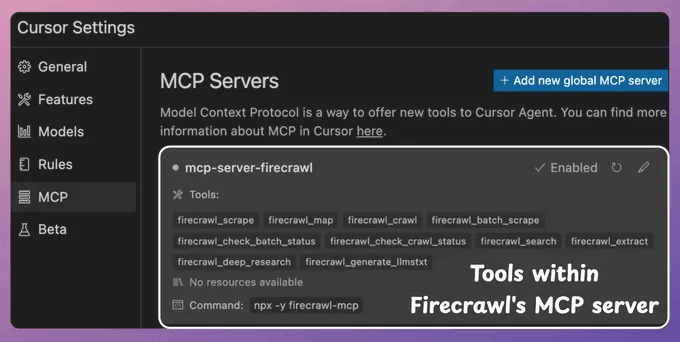

Once done, you will find all the tools exposed by Firecrawl’s MCP server that your Agents can use!

Notice that we didn’t write a line of Python code to integrate Firecrawl’s tools. Instead, we just integrated the MCP server.

Next, let’s interact with this MCP server.

As shown in the video, when asked to list the imports of CrewAI tools listed in my blog:

It identified the MCP tool (scraper).

Prepared the input argument.

Invoked the scraping tool.

Used the tool’s output to generate a response.

So to put it another way, think of MCP as infrastructure.

It creates a shared ecosystem where tools are treated like standardized services, similar to how REST APIs or gRPC endpoints work in traditional software engineering.

Here’s the key point: MCP and Function Calling are not in conflict. They’re two sides of the same workflow.

Function Calling helps an LLM decide what it wants to do.

MCP ensures that tools are reliably available, discoverable, and executable, without you needing to custom-integrate everything.

For example, an agent might say, “I need to search the web,” using function calling.

That request can be routed through MCP to select from available web search tools, invoke the correct one, and return the result in a standard format.

If you don’t know about MCPs, we covered all these details (with implementations) in the MCP crash course.

Part 1 covered MCP fundamentals, the architecture, context management, etc. →

Part 2 covered core capabilities, JSON-RPC communication, etc. →

Part 4 built a full-fledged MCP workflow using tools, resources, and prompts →

Part 5 taught how to integrate Sampling into MCP workflows →

Part 6 covered testing, security, and sandboxing in MCP Workflows →

Part 7 covered testing, security, and sandboxing in MCP Workflows →

Part 8 integrated MCPs with the most widely used agentic frameworks: LangGraph, LlamaIndex, CrewAI, and PydanticAI →

Part 9 covered using LangGraph MCP workflows to build a comprehensive real-world use case→

👉 Over to you: What is your perspective on Function Calling and MCPs?

Thanks for reading!