Deploy ML Models from Your Jupyter Notebook

Deployment has possibly never been so simple.

The core objective of model deployment is to obtain an API endpoint that can be used for inference purposes:

While this sounds simple, deployment is typically quite a tedious and time-consuming process.

One must maintain environment files, configure various settings, ensure all dependencies are correctly installed, and many more.

So, today, I want to help you simplify this process.

More specifically, we shall learn how to deploy any ML model right from a Jupyter Notebook in just three simple steps using the Modelbit API.

Note: This is NOT a sponsored post. I have genuinely found their service to be pretty elegant and simple to use.

Modelbit lets us seamlessly deploy ML models directly from our Python notebooks (or git) to Snowflake, Redshift, and REST.

Today’s newsletter is a part of the complete deep dive I wrote on Modelbit: Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit.

Deployment with Modelbit

Assume we have already trained our model.

For simplicity, let’s assume it to be a linear regression model trained using sklearn, but it can be any other model as well:

Let’s see how we can deploy this model with Modelbit!

First, we install the Modelbit package via

pip:

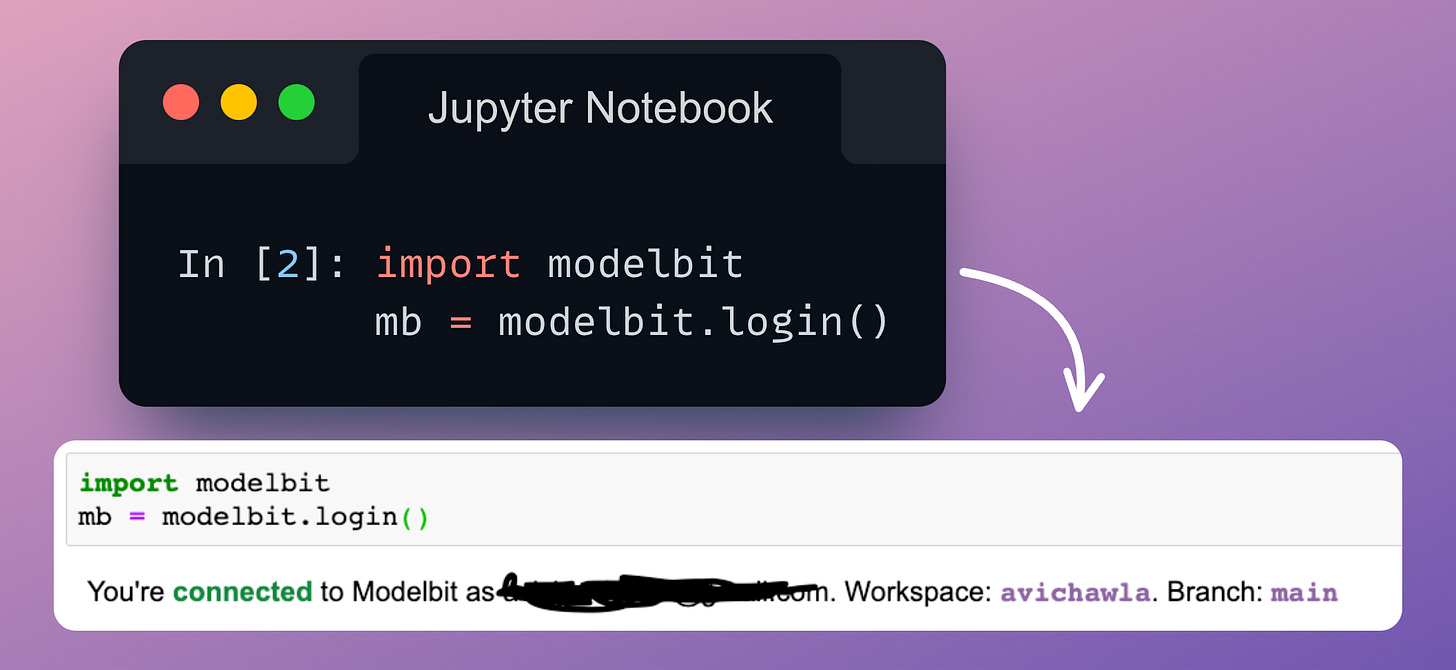

Next, we log in to Modelbit from our Jupyter Notebook (make sure you have created an account here: Modelbit)

Finally, we deploy it, but here’s an important point to note:

To deploy a model using Modelbit, we must define an inference function.

Simply put, this function contains the code that will be executed at inference. Thus, it will be responsible for returning the prediction.

We must specify the input parameters required by the model in this method. Also, we can name it anything we want.

For our linear regression case, the inference function can be as follows:

We define a function

my_lr_deployment().Next, we specify the input of the model as a parameter of this method.

We validate the input for its data type.

Finally, we return the prediction.

One good thing about Modelbit is that every dependency of the function (the

modelobject in this case) is pickled and sent to production automatically along with the function. Thus, we can reference any object in this method.

Once we have defined the function, we can proceed with deployment as follows:

Done!

We have successfully deployed the model in three simple steps, that too, right from the Jupyter Notebook!

Once our model has been successfully deployed, it will appear in our Modelbit dashboard.

As shown above, Modelbit provides an API endpoint. We can use it for inference purposes as follows:

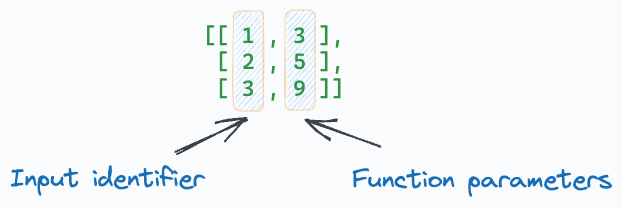

In the above request, data passed to the endpoint is a list of lists.

The first number in the list is the input ID. All entries following the ID in a list are the function parameters.

Lastly, we can also specify specific versions of the libraries or Python used while deploying our model. This is depicted below:

Isn’t that cool, simple, and elegant over traditional deployment approaches?

To learn more, read the complete beginner-friendly article here: Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit. Some of the topics we discuss here are:

Challenges during deployment.

The post-deployment considerations and how to address them.

Model logging to identify challenges like:

Performance drift

Concept drift

Covariate shift

Non-stationarity, etc.

Version controlling ML deployments.

Maintaining a model registry, what it is, its purpose, advantages, etc.

Full implementations using Modelbit even if you have never used it before.

After that, read the article on reliably testing ML models in production here: 5 Must-Know Ways to Test ML Models in Production (Implementation Included). We used Modelbit in this article for implementations.

👉 Over to you: What are some other ways to simplify deployment?

Thanks for reading!

1 Referral: Unlock 450+ practice questions on NumPy, Pandas, and SQL.

2 Referrals: Get access to advanced Python OOP deep dive.

3 Referrals: Get access to the PySpark deep dive for big-data mastery.

Get your unique referral link:

👉 If you love reading this newsletter, share it with friends!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

Thanks for this useful issue. I have designed a GUI interface for my model, but I have some problem in executable this GUI to use in other computers. Can I use modelbit to excecute the GUI? or is there useful and deployability method to execute the GUI?