Feature Discretization

A linear model can learn non-linear patterns.

During model development, one of the techniques that many don’t experiment with is feature discretization.

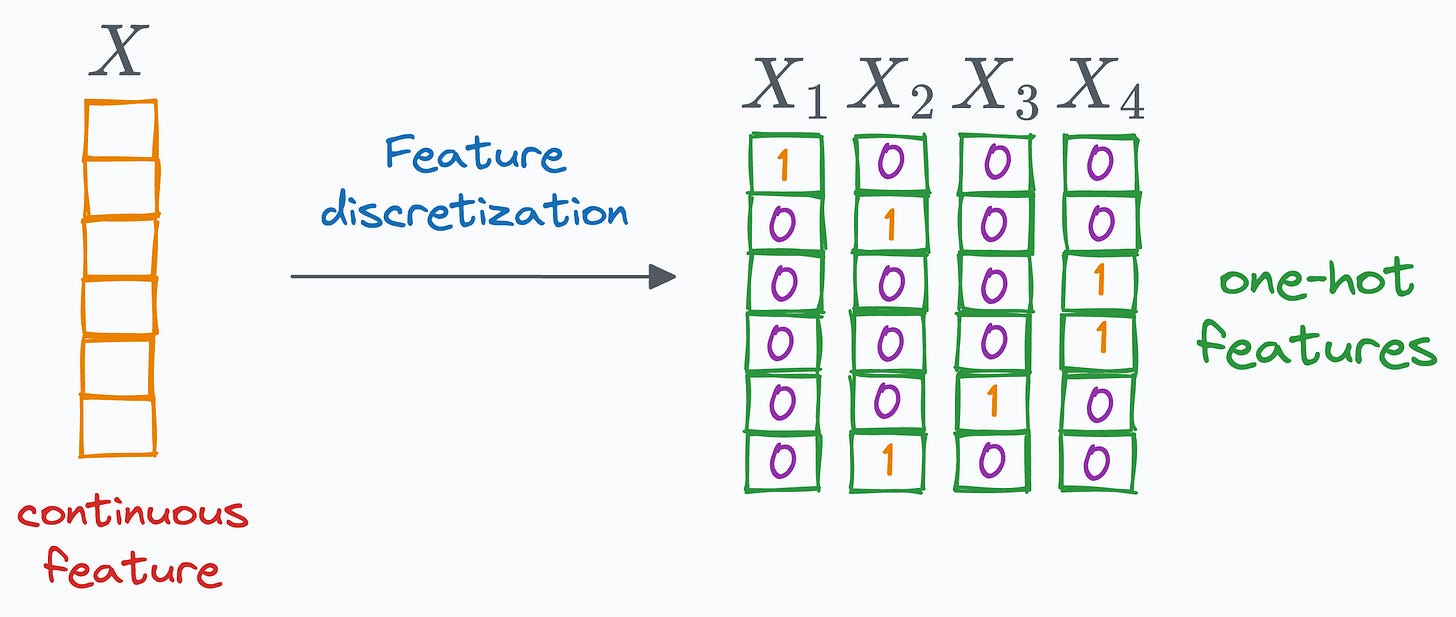

As the name suggests, the idea behind discretization is to transform a continuous feature into discrete features.

Why, when, and how would you do that?

Let’s understand this today.

Motivation for feature discretization

My rationale for using feature discretization has almost always been simple: “It just makes sense to discretize a feature.”

For instance, consider your dataset has an age feature:

In many use cases, like understanding spending behavior based on transaction history, such continuous variables are better understood when they are discretized into meaningful groups → youngsters, adults, and seniors.

For instance, say we model this transaction dataset without discretization.

This would result in some coefficients for each feature, which would tell us the influence of each feature on the final prediction.

But if you think again, in our goal of understanding spending behavior, are we really interested in learning the correlation between exact age and spending behavior?

It makes very little sense to do that.

Instead, it makes more sense to learn the correlation between different age groups and spending behavior.

As a result, discretizing the age feature can potentially unveil much more valuable insights than using it as a raw feature.

2 most common techniques for feature discretization

Now that we understand the rationale, there are 2 techniques that are widely preferred.

One way of discretizing features involves decomposing a feature into equally sized bins.

Another technique involves decomposing a feature into equal frequency bins:

After that, the discrete values are one-hot encoded.

One advantage of feature discretization is that it enables non-linear behavior even though the model is linear.

This can potentially lead to better accuracy, which is also evident from the image below:

A linear model with feature discretization results in a:

non-linear decision boundary.

better test accuracy.

So, in a way, we get to use a simple linear model but still get to learn non-linear patterns.

Isn’t that simple yet effective?

Another advantage of discretizing continuous features is that it helps us improve the signal-to-noise ratio.

Simply put, “signal” refers to the meaningful or valuable information in the data.

Binnng a feature helps us mitigate the influence of minor fluctuations, which are often mere noise.

Each bin acts as a means of “smoothing” out the noise within specific data segments.

Before I conclude, do remember that feature discretization with one-hot encoding increases the number of features → thereby increasing the data dimensionality.

And typically, as we progress towards higher dimensions, data become more easily linearly separable. Thus, feature discretization can lead to overfitting.

To avoid this, don’t overly discretize all features.

Instead, use it when it makes intuitive sense, as we saw earlier.

Of course, its utility can vastly vary from one application to another, but at times, I have found that:

Discretizing geospatial data like latitude and longitude can be useful.

Discretizing age/weight-related data can be useful.

Features that are typically constrained between a range makes sense, like savings/income (practically speaking), etc.

As further reading, learn about:

👉 Over to you: What are some other things to take care of when using feature discretization?

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

A Beginner-friendly Introduction to Kolmogorov Arnold Networks (KANs).

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing.

How To (Immensely) Optimize Your Machine Learning Development and Operations with MLflow.

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of more than 76,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.