Function calling & MCP for LLMs

...explained visually.

Important announcement (in case you missed it)

We have started sending the newsletter through our new platform.

Switch for free here: https://switch.dailydoseofds.com.

You will receive an email to confirm your account.

Due to deliverability concerns, we can’t move everyone ourselves in one go, so this transition will be slow. But you can join yourself to avoid waiting.

To upgrade your reading experience, please join below:

Let’s get to today’s post now!

Function calling & MCP for LLMs

Before MCPs became mainstream (or popular like they are right now), most AI workflows relied on traditional Function Calling.

Now, MCP (Model Context Protocol) is introducing a shift in how developers structure tool access and orchestration for Agents.

Here’s a visual that explains Function calling & MCP:

Let’s dive in to learn more!

What is Function Calling?

Function Calling is a mechanism that allows an LLM to recognize what tool it needs based on the user's input and when to invoke it.

Here’s how it typically works:

The LLM receives a prompt from the user.

The LLM decides the tool it needs.

The programmer implements procedures to accept a tool call request from the LLM and prepares a function call.

The function call (with parameters) is passed to a backend service that would handle the actual execution.

Let’s understand this in action real quick!

First, let’s define a tool function, get_stock_price. It uses the yfinance library to fetch the latest closing price for a specified stock ticker:

Next, we prompt an LLM (served with Ollama) and pass the tools the model can access for external information (if needed):

Printing the response, we get:

Notice that the message key in the above response object has tool_calls, which includes relevant details, such as:

tool.function.name: The name of the tool to be called.tool.function.arguments: The arguments required by the tool.

Thus, we can utilize this info to produce a response as follows:

This produces the following output:

Notice that the entire process happened within our application context. We were responsible for:

Hosting and maintaining the tools/APIs.

Implementing a logic to determine which tool(s) should be invoked and what their parameters are.

Handling the tool execution and scaling it if needed.

Managing authentication and error handling.

In short, Function Calling is about enabling dynamic tool use within your own stack—but it still requires you to wire everything manually.

What is MCP?

MCP, or Model Context Protocol, attempts to standardize this process.

While Function Calling focuses on what the model wants to do, MCP focuses on how tools are made discoverable and consumable—especially across multiple agents, models, or platforms.

Instead of hard-wiring tools inside every app or agent, MCP:

Standardizes how tools are defined, hosted, and exposed to LLMs.

Makes it easy for an LLM to discover available tools, understand their schemas, and use them.

Provides approval and audit workflows before tools are invoked.

Separates the concern of tool implementation from consumption.

Let’s understand this in action real quick by integrating Firecrawl's MCP server to utilize scraping tools within Cursor IDE.

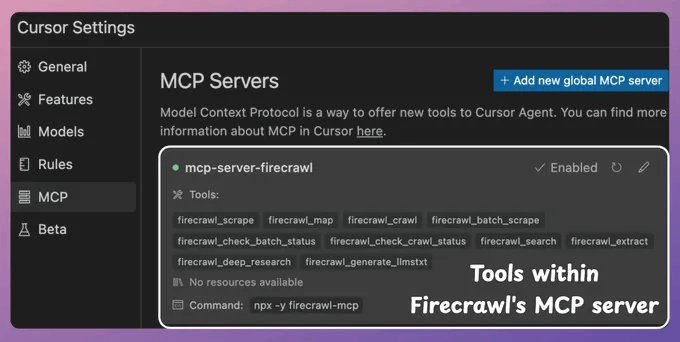

To do this, go to Settings → MCP → Add new global MCP server.

In the JSON file, add what's shown below👇

Once done, you will find all the tools exposed by Firecrawl's MCP server your Agents can use!

Notice that we didn't write a line of Python code to integrate Firecrawl's tools. Instead, we just integrated the MCP server.

Next, let's interact with this MCP server.

As shown in the video, when asked to list the imports of CrewAI tools listed in my blog:

It identified the MCP tool (scraper).

Prepared the input argument.

Invoked the scraping tool.

Used the tool’s output to generate a response.

So to put it another way—think of MCP as infrastructure.

It creates a shared ecosystem where tools are treated like standardized services—similar to how REST APIs or gRPC endpoints work in traditional software engineering.

Here’s the key point: MCP and Function Calling are not in conflict. They’re two sides of the same workflow.

Function Calling helps an LLM decide what it wants to do.

MCP ensures that tools are reliably available, discoverable, and executable—without you needing to custom-integrate everything.

For example, an agent might say, “I need to search the web,” using function calling.

That request can be routed through MCP to select from available web search tools, invoke the correct one, and return the result in a standard format.

Here’s the visual again for your reference:

If you don't know about MCP servers, we covered them recently in the newsletter here:

Over to you: What is your perspective on Function Calling and MCPs?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn how to build Agentic systems in an ongoing crash course with 6 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data in this crash course.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

you are the original author ? accept transfer ?

you are the original author ?