[Hands-on] RAG Over GitHub Repos

...using GitIngest and Llama-3.2 (100% local).

Ragie Connect: Build RAG Apps Over Users' Data in No Time

Setting up a RAG infra from scratch can be an absolute nightmare. Here’s what you must build:

OAuth for third-party data integrations.

Designing UIs for ingesting user data.

Continuous sync for data updates.

Enabling user data partitioning

Ragie Connect provides the complete infrastructure to handle authentication, authorization, and syncing for your users’ data from several sources:

Google Drive

Salesforce

Notion

Jira

etc.

If you’re looking to integrate RAG into your platform in no time, start with Ragie.

Thanks to Ragie for partnering today.

RAG over GitHub repos

Continuing the discussion from Ragie...

Today, let's show you how we built a RAG app over GitHub repos using:

GitIngest to parse the entire repo in markdown.

Llama-3.2 as the LLM.

Let's build it now.

Step 1) Parse GitHub

GitIngest turns any Git repository into a simple text digest of its codebase. It provides the summary, the directory structure and parses all files to markdown.

We use this as follows:

We also save the above content to a local file, which is then loaded with LlamaIndex's SimpleDirectoryReader as follows:

Step 2) Set up LLM and embedding model

Since our knowledge base is ready, we set up the LLM and embedding model.

We use Ollama to run the LLM locally.

And an embedding model from HuggingFace.

Step 3) Embed data, create an index and chat

We pass the documents loaded documents (docs = loader.load_data()), create a vector store below, and then chat with the repo as follows:

Done!

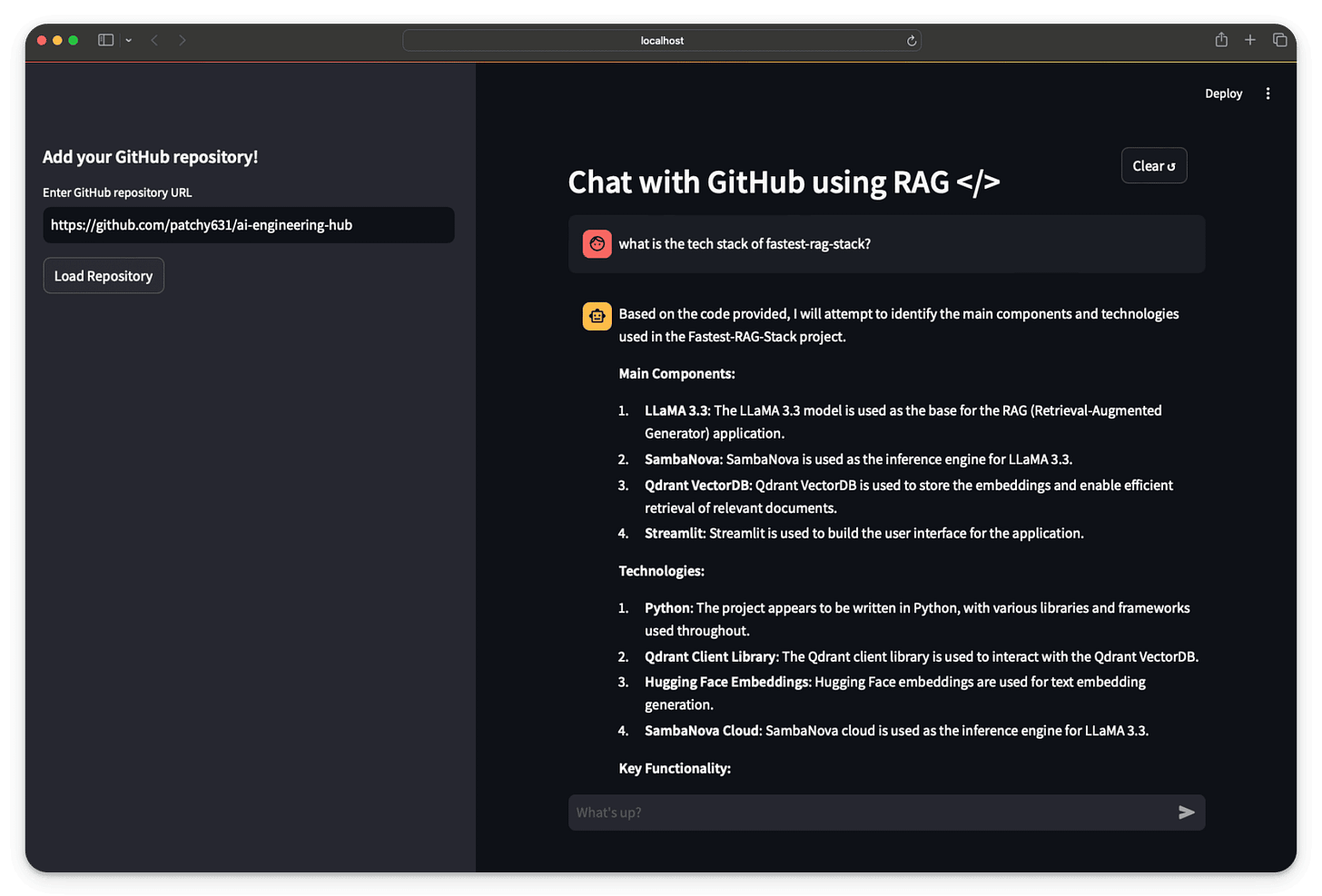

There’s some streamlit part (full code here) we haven't shown here, but after building it, we get this clear and neat interface:

We hope this was a good place to start with RAG over complex docs!

We'll cover more advanced techniques soon in our RAG crash course, specifically around Agentic RAG, etc.

In the meantime, don't forget to check out Ragie to easily integrate RAG into your apps in no time.

Thanks for reading!