[Hands-on] Tool calling in LLMs

Integrate specialized tools with LLMs.

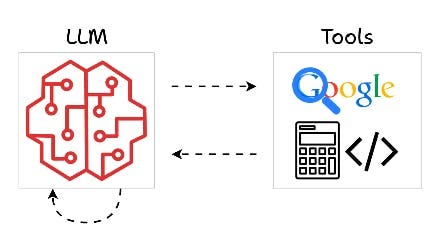

When generating text, like in RAG systems, the LLM may need to invoke external tools or APIs to perform specific tasks beyond their built-in capabilities.

This is known as tool calling, and it turns the AI into more like a coordinator, which delegates tasks it cannot handle internally to specialized tools.

The process is:

Recognize when a task requires external assistance.

Invoke the appropriate tool or API for that task.

Process the tool's output and integrate it into its response.

Today, let us show you how to implement tool calling.

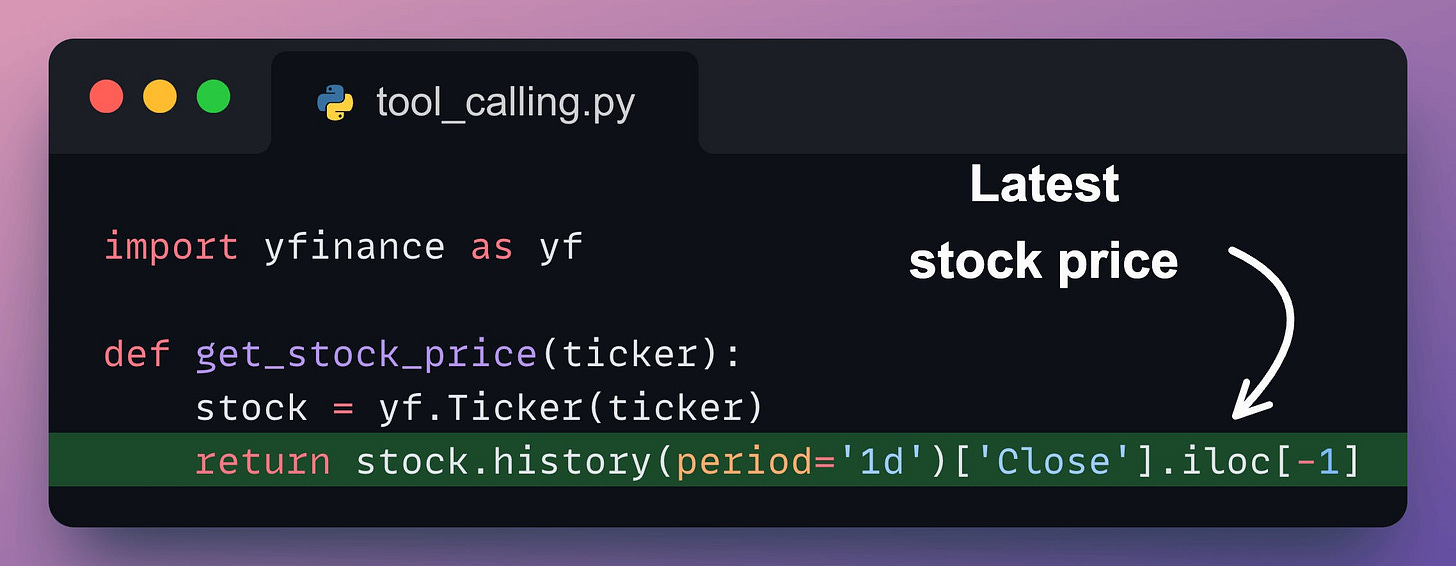

We’ll build a stock price retrieval assistant that combines the natural language capabilities of an LLM with the real-time data retrieval capabilities of the yfinance library.

Also, talking of LLMs, we started a beginner-friendly crash course on RAGs recently with implementations.

Read the first five parts below:

Demo

First, we define a tool function, get_stock_price. It uses the yfinance library to fetch the latest closing price for a specified stock ticker:

Next, we prompt the LLM using Ollama and pass the tools the model can access for external information (if needed):

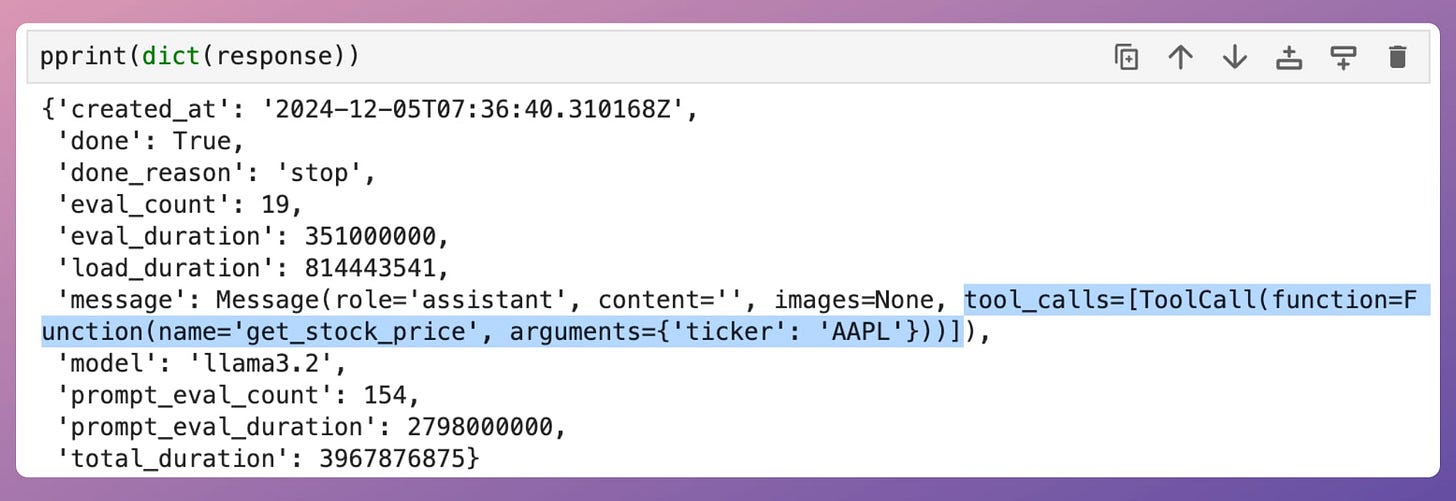

Printing the response, we get:

Notice that the message key in the above response object has tool_calls, which includes relevant details, such as:

tool.function.name: The name of the tool to be called.tool.function.arguments: The arguments required by the tool.

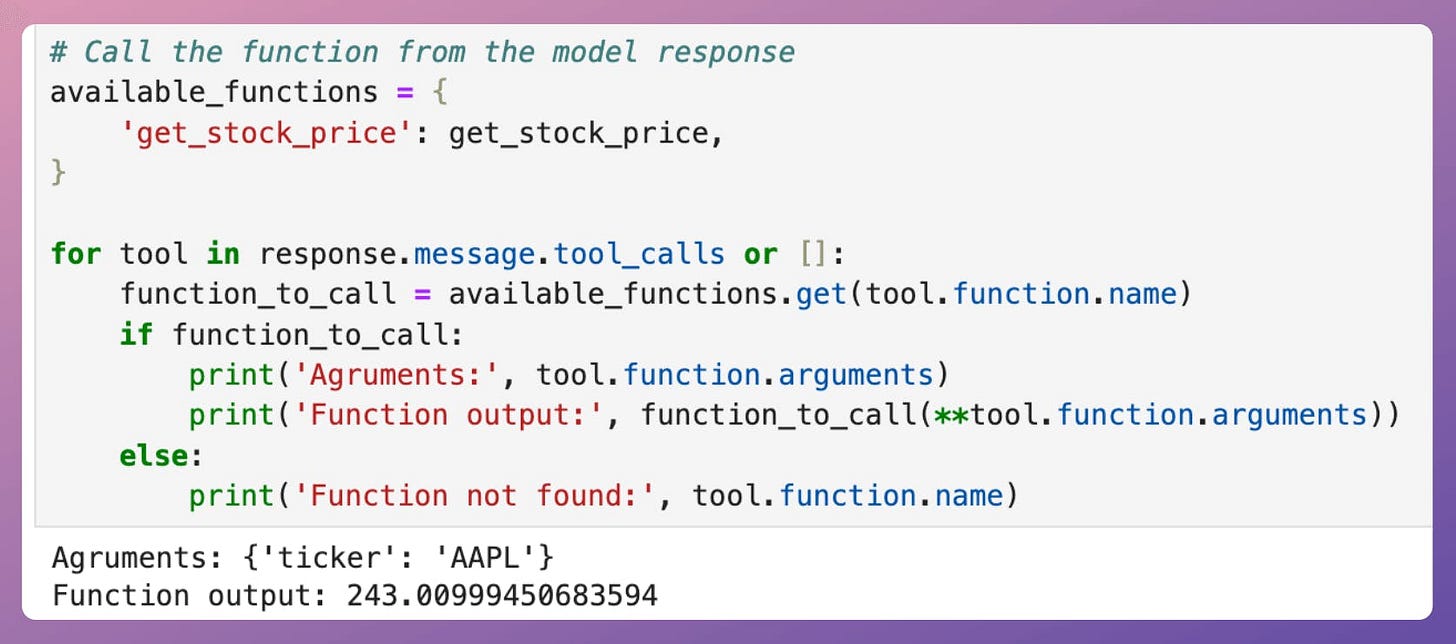

Thus, we can utilize this info to produce a response as follows:

This produces the following output.

This produces the expected output.

Of course, the above output can also be passed back to the AI to generate a more vivid response, which we haven't shown here.

But this simple demo shows that with tool calling, the assistant can be made more flexible and powerful to handle diverse user needs.

If you want to upskill in this LLM/RAG/Agent space, here are some beginner-friendly resources (with implementations):

RAG crash course:

👉 Over to you: What else would you like to learn about in LLMs?