JSON prompting for LLMs

...explained visually.

Speech-to-text at unmatched accuracy with AssemblyAI

There are two techniques to identify and generate speaker-labeled transcripts from speech files:

Multichannel transcription: Each speaker has a dedicated channel (conference calls, zoom meetings, etc.), leading to better accuracy.

Speaker diarization: All speakers belong to the same channel, which requires sophisticated techniques.

AssemblyAI’s SOTA transcription models can tackle both of them seamlessly in just six lines of code:

For multichannel transcription, set

multichannel=True:

For speaker diarization, set

speaker_labels=Trueandspeakers_expected:

AssemblyAI’s speech-to-text models rank top across all major industry benchmarks. You can transcribe 1 hour of audio in ~35 seconds at an industry-leading accuracy of >93%.

Get your API key and get up to 330 free hours of speech-to-text →

Thanks to AssemblyAI for partnering with us on today’s issue.

JSON prompting for LLMs

Today, let us show you exactly what JSON prompting is and how it can drastically improve your AI outputs!

Natural language is powerful yet vague!

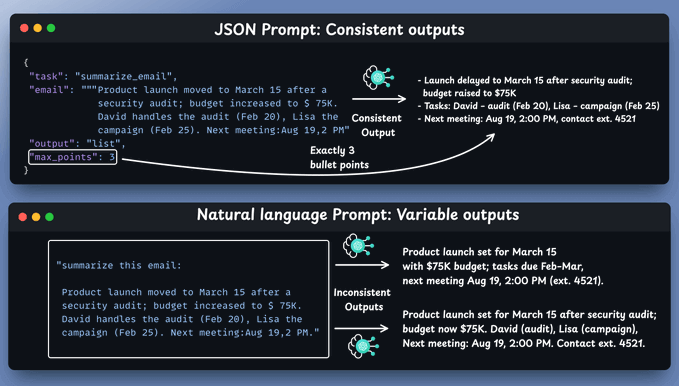

When you give instructions like "summarize this email" or "give me key takeaways," you leave room for interpretation, which can lead to hallucinations.

And if you try JSON prompts, you get consistent outputs:

The reason JSON is so effective is that AI models are trained on massive amounts of structured data from APIs and web applications.

When you speak their "native language," they respond with laser precision!

Let's understand this a bit more...

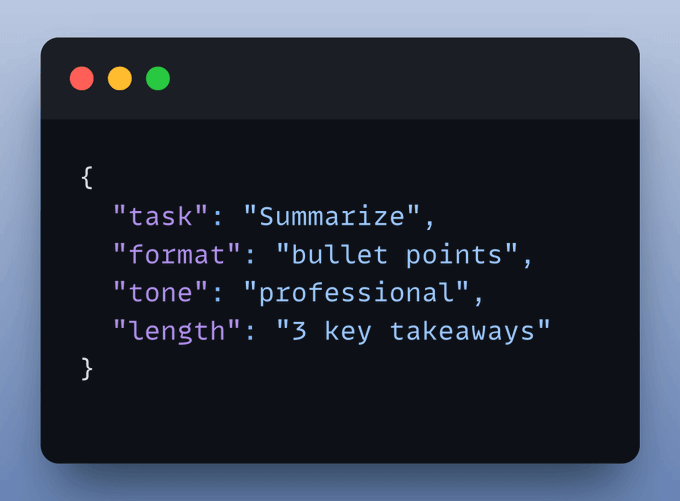

1️⃣ Structure means certainty

JSON forces you to think in terms of fields and values, which is a gift.

It eliminates gray areas and guesswork.

Here's a simple example:

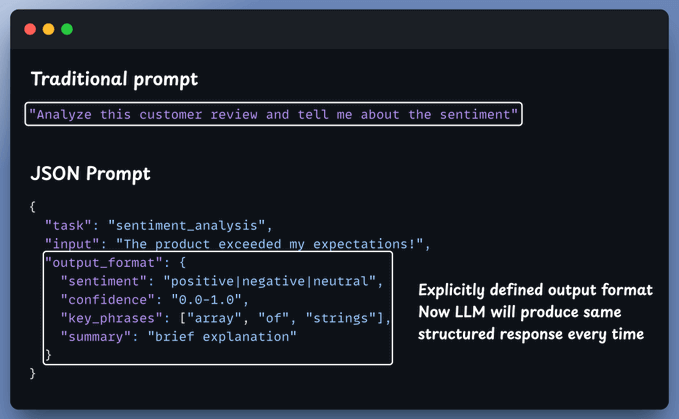

2️⃣ You control the outputs

Prompting isn't just about what you ask; it's about what you expect back.

And this works irrespective of what you are doing, like generating content, reports, or insights. JSON prompts ensure a consistent structure every time.

No more surprises, just predictable results!

3️⃣ Reusable templates → Scalability, Speed & Clean handoffs

You can turn JSON prompts into shareable templates for consistent outputs.

Teams can plug results directly into APIs, databases, and apps; no manual formatting, so work stays reliable and moves much faster.

We did something similar when we built a full-fledged MCP workflow using tools, resources, and prompts here →

So, are json prompts the best option?

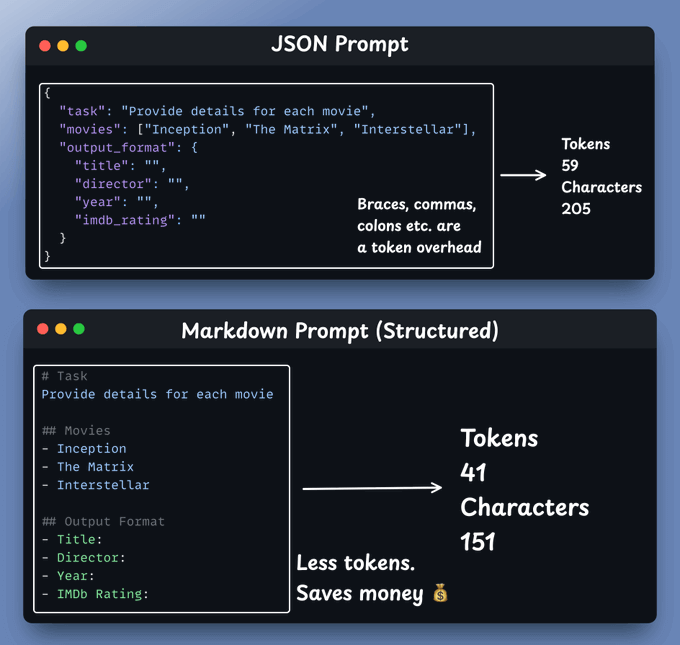

Well, good alternatives exist!

Many models excel at other formats:

Claude handles XML exceptionally well

Markdown provides structure without overhead

So it's mainly about structure rather than syntax as depicted below:

To summarise:

Structured JSON prompting for LLMs is like writing modular code; it brings clarity of thought, makes adding new requirements effortless, & creates better communication with AI.

It's not just a technique, but rather evolving towards a habit worth developing for cleaner AI interactions.

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.

just use https://jsonprompt.it to create those structured prompts automatically