Label Smoothing for Regularization

Making models less overconfident.

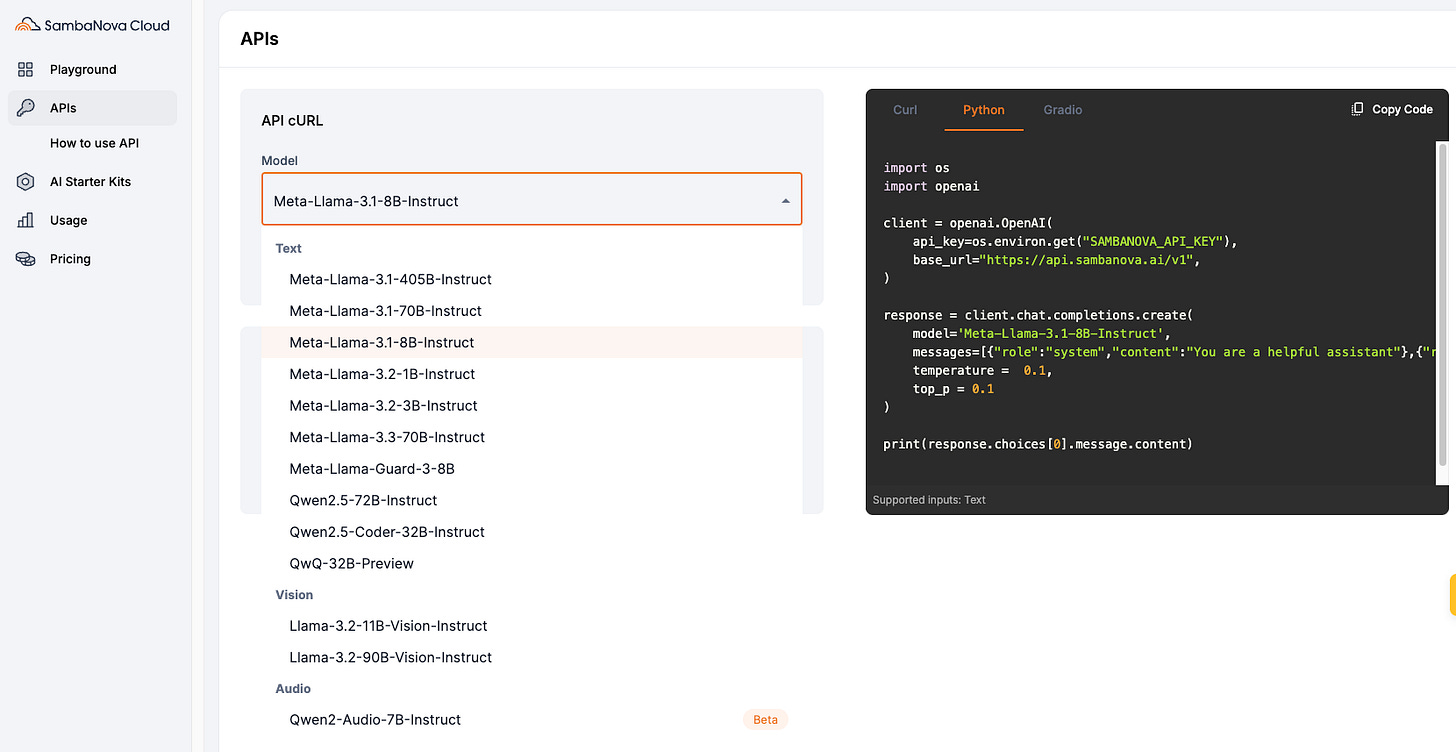

SambaNova Cloud: 10x faster alternative to GPUs for LLM Inference →

GPUs are not fully efficient for AI workloads.

In fact, GPUs were not even originally designed for AI/ML workloads.

SambaNova Systems built the world’s fastest AI inference using its specialized hardware stack (RDUs)—a 10x faster alternative to GPU.

In fact, their specialized SN40L chip can load models as big as trillions of parameters.

Check it out here: SambaNova Systems.

With SambaNova:

Llama 3.1-8B generates 1k+ tokens/s

Llama 3.1-70B generates 700+ tokens/s

Llama 3.1-405B generates 200+ tokens/s

This inference speed matters when:

you need real-time inference.

you need production readiness.

you need cost efficiency at scale.

Start using SambaNova’s fastest inference engine here →

Thanks to SambaNova for partnering with us on today's issue.

Label Smoothing for Regularization

The entire probability mass belongs to just one class in typical classification problems, and the rest are zero:

This can sometimes impact its generalization capabilities since it can excessively motivate the model to learn the true class for every sample.

Regularising with Label smoothing addresses this issue by reducing the probability mass of the true class and distributing it to other classes:

In the experiment below, I trained two neural networks on the Fashion MNIST dataset with the same weight initialization.

One without label smoothing.

Another with label smoothing.

The model with label smoothing (right) resulted in better test accuracy, i.e., better generalization.

When not to use label smoothing?

Label smoothing is recommended only if you only care about getting the final prediction correct.

But don't use it if you also care about the model’s confidence since label smoothing directs the model to become “less overconfident” in its predictions—resulting in a drop in the confidence values for every prediction:

That said, L2 regularization is another common way to regularize models. Here’s a guide that explains its probabilistic origin: The Probabilistic Origin of Regularization.

Also, we discussed 11 techniques to supercharge ML models here: 11 Powerful Techniques To Supercharge Your ML Models.

👉 Over to you: What other things need to be taken care of when using label smoothing?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.