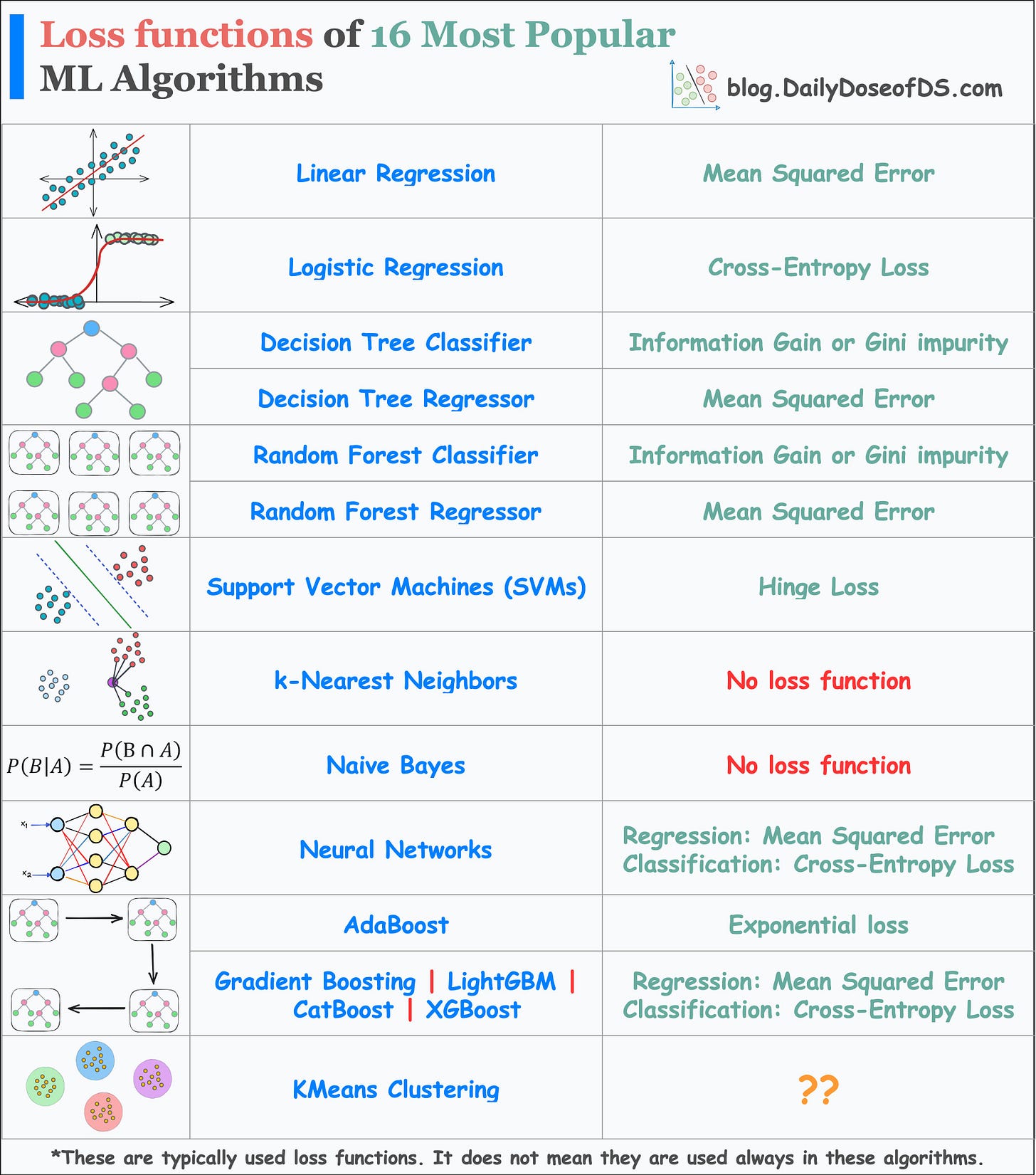

Loss Function of 16 ML Algos

An Algorithm-wise summary of loss functions.

Loss functions are a vital component of ML algorithms.

They specify the objective an algorithm should aim to optimize during its training.

In other words, loss functions explicitly tell the algorithm what it should minimize to improve its performance.

Therefore, knowing which loss functions are (typically) best suited for specific ML algorithms is extremely crucial.

The below visual depicts the most commonly used loss functions by various ML algorithms.

Linear Regression: Mean Squared Error (MSE). This can be used with and without regularization, depending on the situation.

Why MSE? We covered it here: MSE post.

Logistic regression: Cross-entropy loss or Log Loss, with and without regularization.

Why log loss? We covered its origin here: Why Do We Use log-loss to Train Logistic Regression?

Also, do you know Logistic regression can be trained without specifying a learning rate? We covered it here: Why Sklearn’s Logistic Regression Has no Learning Rate Hyperparameter?

Decision Tree and Random Forest:

Classification: Gini impurity or information gain.

Regressor: Mean Squared Error (MSE)

Further reading on Random Forest: Why Bagging is So Ridiculously Effective At Variance Reduction?

Support Vector Machines (SVMs): Hinge loss. It penalizes both wrong and right (but less confident) predictions. Best suited for creating max-margin classifiers, like in SVMs.

k-Nearest Neighbors (kNN): No loss function. kNN is a non-parametric lazy learning algorithm. It works by retrieving instances from the training data, and making predictions based on the k nearest neighbors to the test data instance.

Naive Bayes: No loss function. Can you answer why?

Neural Networks: They can use a variety of loss functions depending on the type of problem. The most common ones are:

Regression: Mean Squared Error (MSE).

Classification: Cross-Entropy Loss.

AdaBoost: Exponential loss function. AdaBoost is an ensemble learning algorithm. It combines multiple weak classifiers to form a strong classifier. In each iteration of the algorithm, AdaBoost assigns weights to the misclassified instances from the previous iteration. Next, it trains a new weak classifier and minimizes the weighted exponential loss. We covered it here in detail: A Visual and Overly Simplified Guide to The AdaBoost Algorithm.

Other Boosting Algorithms:

Regression: Mean Squared Error (MSE).

Classification: Cross-Entropy Loss.

👉 Over to you: Can you tell which loss function is used in KMeans?

Thanks for reading!

Whenever you are ready, here’s one more way I can help you:

Every week, I publish 1-2 in-depth deep dives (typically 20+ mins long). Here are some of the latest ones that you will surely like:

[FREE] A Beginner-friendly and Comprehensive Deep Dive on Vector Databases.

A Detailed and Beginner-Friendly Introduction to PyTorch Lightning: The Supercharged PyTorch

You Are Probably Building Inconsistent Classification Models Without Even Realizing

PyTorch Models Are Not Deployment-Friendly! Supercharge Them With TorchScript.

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

You Cannot Build Large Data Projects Until You Learn Data Version Control!

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 If you love reading this newsletter, feel free to share it with friends!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)