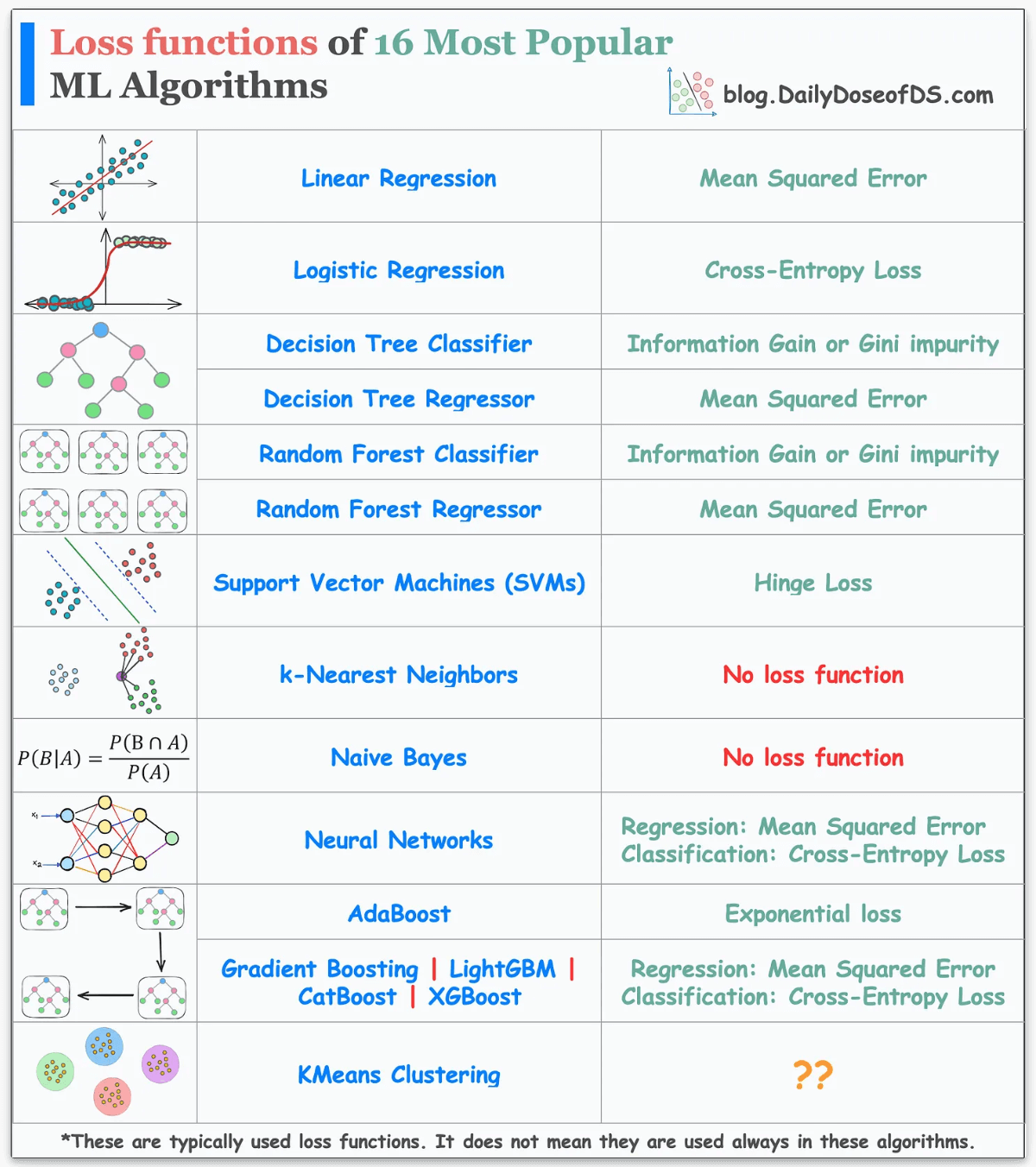

Loss Function of 16 ML Algos

A single frame summary.

Prepared this visual which depicts the most commonly used loss functions by various ML algorithms.

Since loss functions are a vital component of ML algorithms, knowing which loss functions are (typically) best suited for specific ML algorithms is extremely crucial.

1) Linear Regression: Mean Squared Error (MSE). This can be used with and without regularization, depending on the situation.

2) Logistic regression: Cross-entropy loss or Log Loss, with and without regularization.

Why log loss? We covered its origin here: Why Do We Use log-loss to Train Logistic Regression?

Also, do you know Logistic regression can be trained without specifying a learning rate? We covered it here: Why Sklearn’s Logistic Regression Has no Learning Rate Hyperparameter?

3) Decision Tree and Random Forest:

Classification: Gini impurity or information gain.

Regressor: Mean Squared Error (MSE).

Further reading on Random Forest: Why Bagging is So Ridiculously Effective At Variance Reduction?

4) Support Vector Machines (SVMs): Hinge loss. It penalizes both wrong and right (but less confident) predictions. Best suited for creating max-margin classifiers, like in SVMs.

5) k-Nearest Neighbors (kNN): No loss function. kNN is a non-parametric lazy learning algorithm. It works by retrieving instances from the training data, and making predictions based on the k nearest neighbors to the test data instance.

6) Naive Bayes: No loss function. Can you answer why?

7) Neural Networks: They can use a variety of loss functions depending on the type of problem. The most common ones are:

Regression: Mean Squared Error (MSE).

Classification: Cross-Entropy Loss.

8) AdaBoost: Exponential loss function. AdaBoost is an ensemble learning algorithm. It combines multiple weak classifiers to form a strong classifier. In each iteration of the algorithm, AdaBoost assigns weights to the misclassified instances from the previous iteration. Next, it trains a new weak classifier and minimizes the weighted exponential loss.

9) Other Boosting Algorithms:

Regression: Mean Squared Error (MSE).

Classification: Cross-Entropy Loss.

More about XGBoost below 👇

Formulating and Implementing XGBoost From Scratch

If you consider the last decade (or 12-13 years) in ML, neural networks have dominated the narrative in most discussions.

In contrast, tree-based methods tend to be perceived as more straightforward, and as a result, they don't always receive the same level of admiration.

However, in practice, tree-based methods frequently outperform neural networks, particularly in structured data tasks.

This is a well-known fact among Kaggle competitors, where XGBoost has become the tool of choice for top-performing submissions.

One would spend a fraction of the time they would otherwise spend on models like linear/logistic regression, SVMs, etc., to achieve the same performance as XGBoost.

Learn about its internal details by formulating and implementing it from scratch here →

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.