MCP Moment for Reinforcement Learning

...shared with hands-on code.

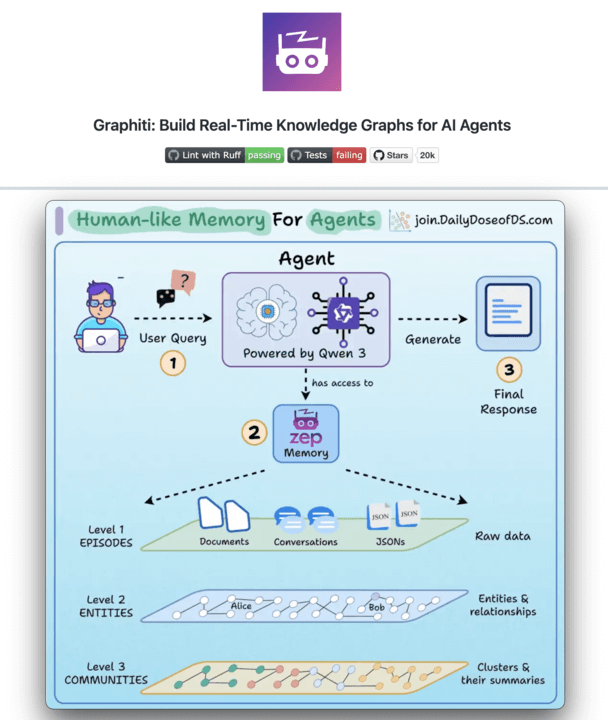

Graphiti MCP v1.0: Local Knowledge Graph Memory for Agents

Graphiti MCP is an open-source temporal knowledge graph that provides AI agents with persistent relationship-aware memory (20k+ stars).

Unlike naive RAG, it tracks how information evolves over time, building a living memory across all your conversations and tools.

What’s new in v1.0:

Multi-provider support: OpenAI, Anthropic, Gemini, Groq, Azure LLMs; FalkorDB or Neo4j databases

Default entity ontology: 9 pre-configured entity types (Preference, Requirement, Procedure, Location, Event, Organization, Document, Topic, Object)

One-command deployment: All-in-one Docker image or connect to existing databases

MCP Moment for Reinforcement Learning

The hardest part of reinforcement learning isn’t training.

It’s managing the environment: the virtual world where your agent learns by trial and error, and receives rewards from.

With no standard way to build these worlds, each project starts from scratch with new APIs, new rules, and new feedback loops.

This results in Agents that can’t move across tasks, and leads researchers to spend more time wiring environments than improving behavior.

This is exactly what the recently trending framework PyTorch OpenEnv solves.

Think of it as the MCP but for RL training.

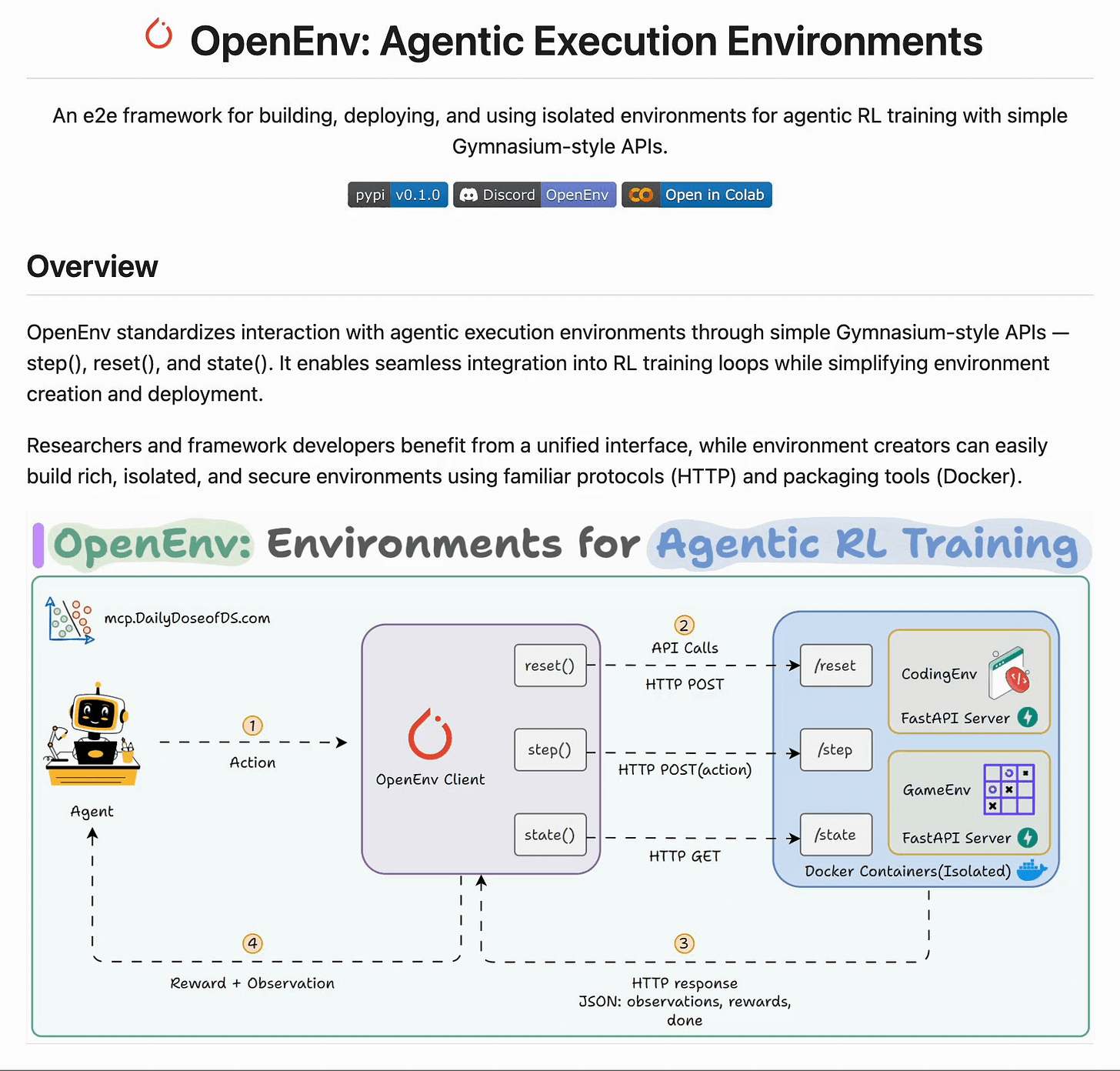

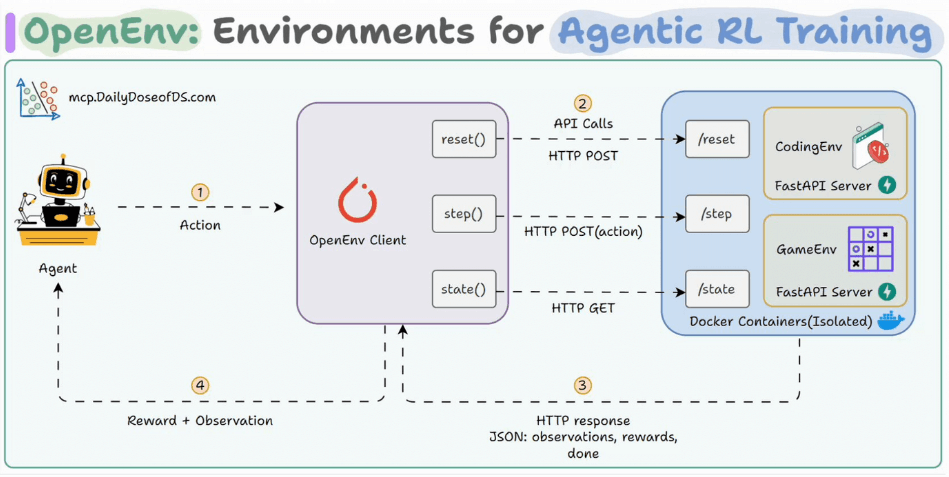

OpenEnv standardizes how agents train with reinforcement learning.

It gives every RL system a shared, modular world. A containerized environment built on Gymnasium-inspired APIs that speak a common language:

reset()→ start a new episodestep(action)→ take an action and get feedbackstate()→ observe progress

Each environment runs in isolation over HTTP: simple, type-safe, and reproducible.

Here’s the flow in practice:

An agent connects through the OpenEnv client.

The client routes actions to a FastAPI environment running in Docker.

The environment processes, updates state, and returns feedback.

The loop continues

The same pattern can be replicated across problems, whether it’s a toy game, a coding environment, or any custom world you want your agents to interact with.

Just like the MCP standardized tool calling for agents, OpenEnv standardizes how agents interact with RL training environments.

And it’s 100% open-source!

Refer to the OpenEnv_Basic_to_Advanced.ipynb notebook above.

Also, here’s the link to Meta’s open-source OpenEnv GitHub repo →

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.