MLE vs. EM — How Do They Differ?

A popular interview question.

Final Announcement

In just 9 hours, lifetime access to Daily Dose of DS will increase to 3x the annual price (currently, it is 2x the annual price).

You can join below before it increases:

It gives you lifetime access to the no-fluff, industry-relevant, and practical DS and ML resources that truly matter to your skills for succeeding and staying relevant in these roles.

I can’t wait to help you more. Join below:

Let’s get to today’s post now!

MLE vs. EM

Maximum likelihood estimation (MLE) and expectation maximization (EM) are two popular techniques to determine the parameters of statistical models.

Due to its applicability in MANY statistical models, I have seen it being asked in plenty of data science interviews as well, especially the distinction between the two.

So today, let’s understand them in detail, how they work, and their differences.

Let’s begin!

Maximum likelihood estimation (MLE)

MLE starts with a labeled dataset and aims to determine the parameters of the statistical model we are trying to fit.

The process is pretty simple and straightforward.

In MLE, we:

Start by assuming a data generation process. Simply put, this data generation process reflects our belief about the distribution of the output label (

y), given the input (X).

Next, we define the likelihood of observing the data. As each observation is independent, the likelihood of observing the entire data is the same as the product of observing individual observations:

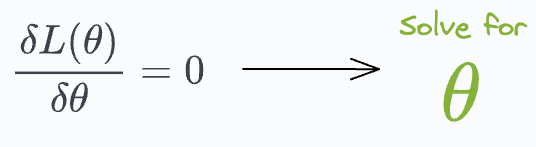

The likelihood function above depends on parameter values (θ). Our objective is to determine those specific parameter values that maximize the likelihood function. We do this as follows:

This gives our parameter estimates that would have most likely generated the given data.

That was pretty simple, wasn’t it?

But what do we do if we don’t have true labels?

We still want to estimate the parameters, don’t we?

MLE, as you may have guessed, will not be applicable. The true label (y), being unobserved, makes it impossible to define a likelihood function like we did earlier.

In such cases, advanced techniques like expectation maximization are pretty helpful.

Expectation maximization (EM)

EM is an iterative optimization technique to estimate the parameters of statistical models. It is particularly useful when we have an unobserved (or hidden) label.

We covered a practical explanation of expectation maximization and also implemnted it from scratch in Python (using only NumPy) here: Gaussian Mixture Models: The Flexible Twin of KMeans.

One example situation could be as follows:

As depicted above, we assume that the data was generated from multiple distributions (a mixture). However, the observed/complete data does not contain that information.

In other words, the observed dataset does not have information about whether a specific row was generated from distribution 1 or distribution 2.

Had it contained the label (

y) information, we would have already used MLE.

EM helps us with parameter estimates of such datasets.

The core idea behind EM is as follows:

Make a guess about the initial parameters (θ).

Expectation (E) step: Compute the posterior probabilities of the unobserved label (let’s call it ‘

z’) using the above parameters.Here, ‘

z’ is also called a latent variable, which means hidden or unobserved.Relating it to our case, we know that the true label exists in nature. But we don’t know what it is.

Thus, we replace it with a latent variable ‘

z’ and estimate its posterior probabilities using the guessed parameters.

Given that we now have a proxy (not precise, though) for the true label, we can define an “expected likelihood” function. Thus, we use the above posterior probabilities to do so:

Maximization (M) step: So now we have a likelihood function to work with. Maximizing it with respect to the parameters will give us a new estimate for the parameters (θ`).

Next, we use the updated parameters (θ`) to recompute the posterior probabilities we defined in the expectation step.

We will update the likelihood function (

L) using the new posterior probabilities.Again, maximizing it will give us a new estimate for the parameters (θ).

And this process goes on and on until convergence.

The point is that in expectation maximization, we repeatedly iterate between the E and the M steps until the parameters converge.

At times, however, it might converge to a local extrema.

I prepared the following visual, which neatly summarizes the differences between MLE and EM:

MLE vs. EM is a popular question asked in many data science interviews.

While MLE is relatively easy to understand, EM is a bit complicated.

If you are interested in practically learning about expectation maximization and programming it from scratch in Python (using only NumPy)...

….then we covered it in detail in this deep dive: Gaussian Mixture Models: The Flexible Twin of KMeans.

👉 Over to you: In the above visual summary, can you add more models for each MLE and EM?

For those wanting to develop “Industry ML” expertise:

All businesses care about impact.

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with “Industry ML.” Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

Being able to code is a skill that’s diluting day by day.

Thus, the ability to make decisions, guide strategy, and build solutions that solve real business problems and have a business impact will separate practitioners from experts.

SPONSOR US

Get your product in front of ~90,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.