Model Development and Optimization for Production (With Implementation)

...covered from a system design perspective.

Part 10 of the MLOps and LLMOps crash course is now available, which continues the model compression techniques we discussed in Part 9.

Read here: MLOps and LLMOps crash course Part 10 →

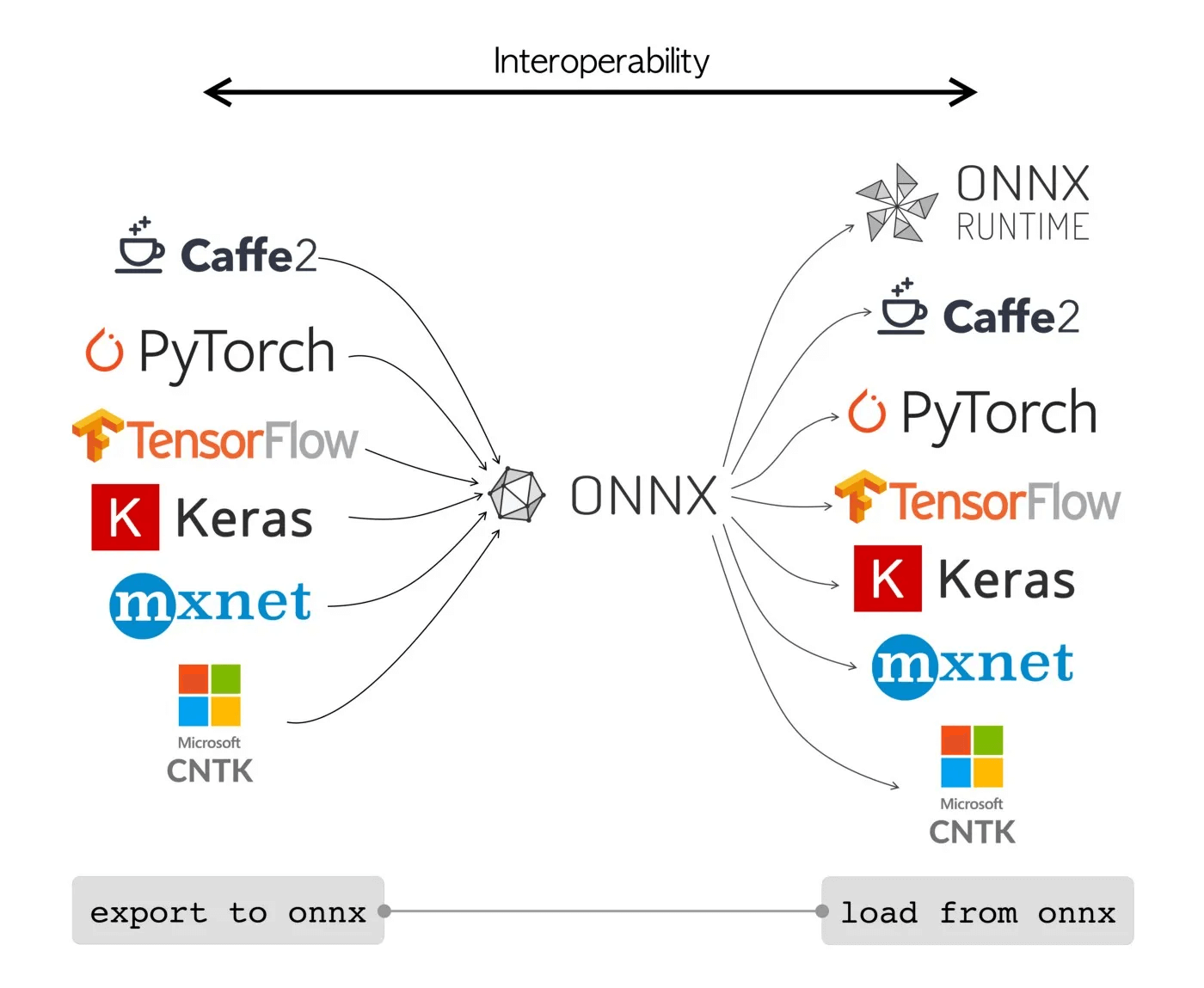

One more important thing we cover (with implementation) is ONNX (Open Neural Network Exchange), which provides a framework-agnostic intermediate representation (IR) for neural networks so that models trained in one framework (say PyTorch or TensorFlow) can be consumed, optimized, and executed in another environment or runtime.

We'll cover:

Knowledge distillation

Low-Rank Factorization

Quantization

ONNX

Just like all our past series on MCP, RAG, and AI Agents, this series is both foundational and implementation-heavy, walking you through everything that a real-world ML system entails:

Part 3 covered reproducibility and versioning for ML systems →

Part 4 also covered reproducibility and versioning for ML systems →

Part 7 covered Spark, and orchestration + workflow management →

Part 8 covered the modeling phase of the MLOps lifecycle from a system perspective →

Part 9 covered fine-tuning and model compression/optimization →

This MLOps and LLMOps crash course provides a thorough explanation and systems-level thinking to build AI models for production settings.

Just like the MCP crash course, each chapter will clearly explain necessary concepts, provide examples, diagrams, and implementations.

Thanks for reading!