Prompting vs. RAG vs. Finetuning

Which one is best for you?

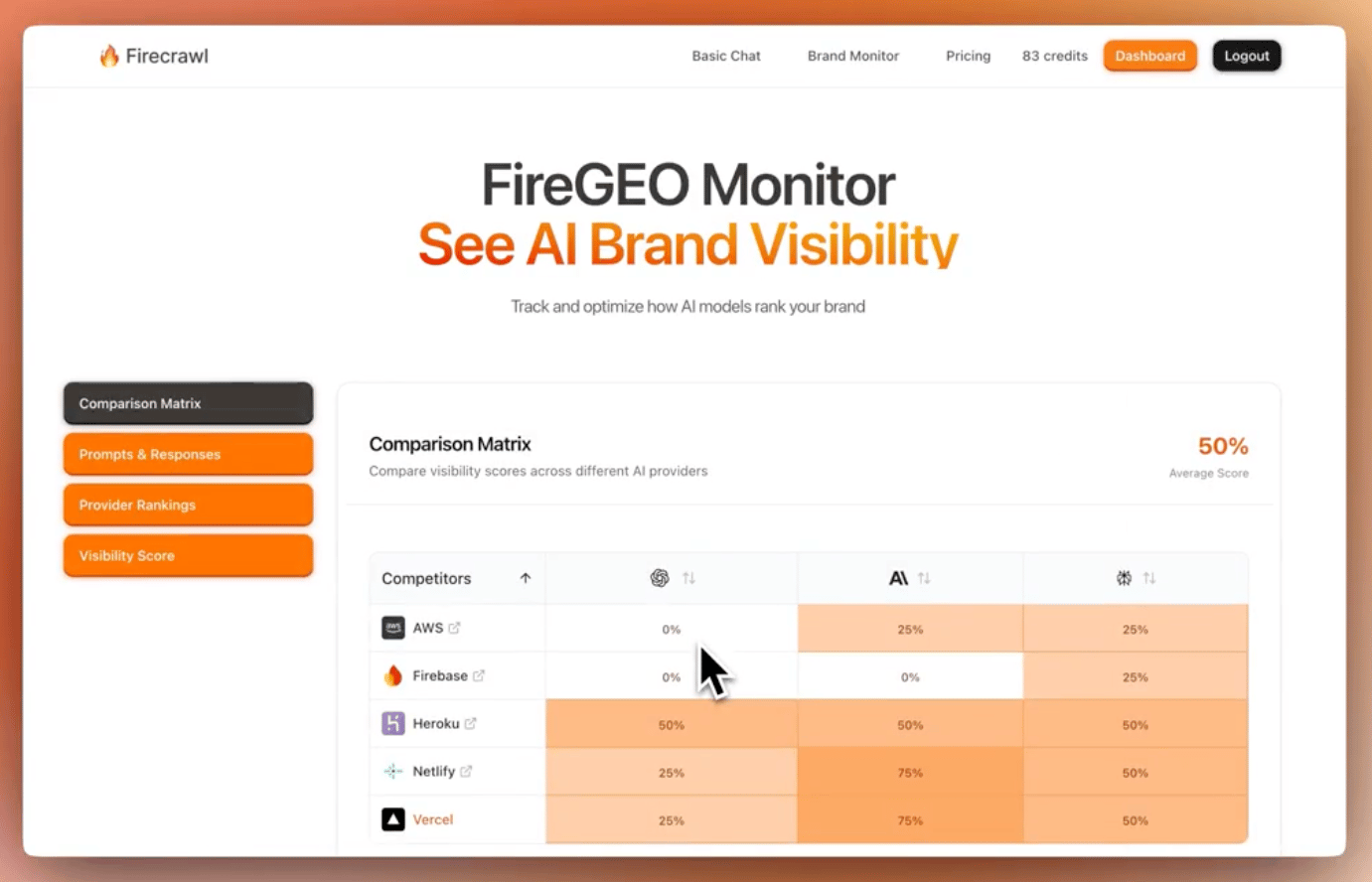

FireGEO: An open-source Semrush for AI

Firecrawl open-sourced FireGEO, an app that lets you monitor your website's presence on the leading AI search platforms and compare it to all your competitors.

Thanks to Firecrawl for partnering today!

Prompting vs. RAG vs. Finetuning?

If you are building real-world LLM-based apps, it is unlikely you can start using the model right away without adjustments. To maintain high utility, you either need:

Prompt engineering

Fine-tuning

RAG

Or a hybrid approach (RAG + fine-tuning)

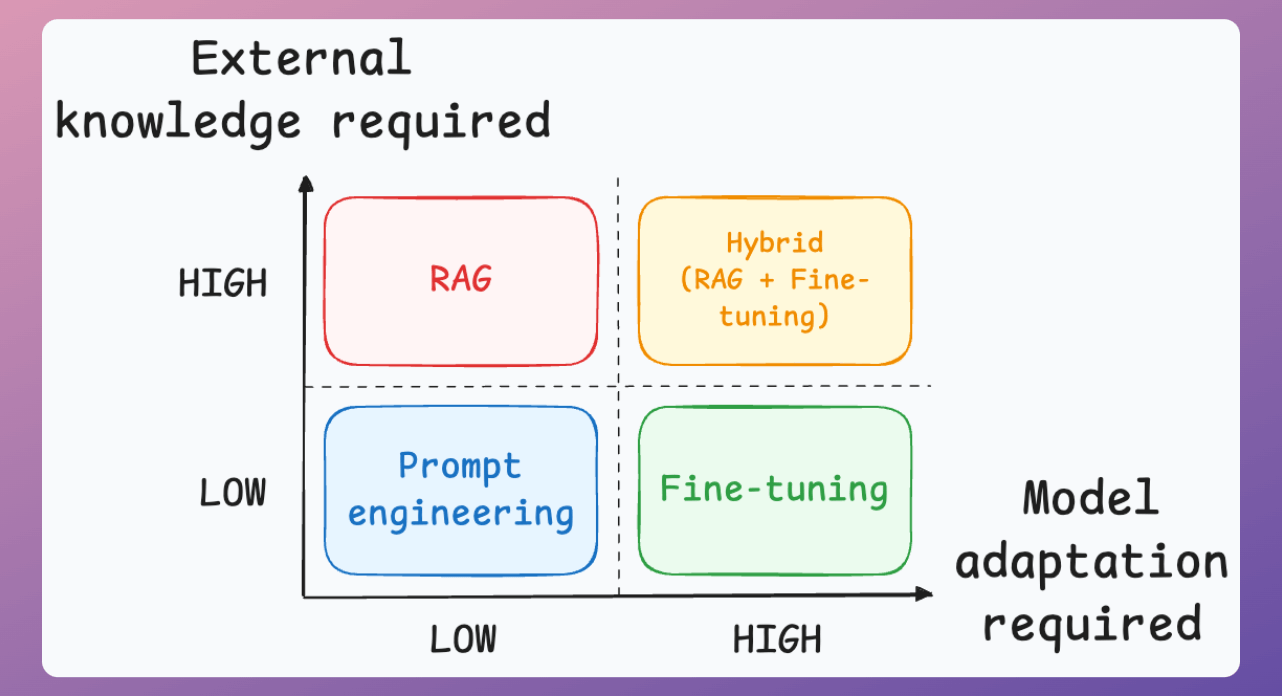

The following visual will help you decide which one is best for you:

Two important parameters guide this decision:

The amount of external knowledge required for your task.

The amount of adaptation you need. Adaptation, in this case, means changing the behavior of the model, its vocabulary, writing style, etc.

For instance, an LLM might find it challenging to summarize the transcripts of company meetings because speakers might be using some internal vocabulary in their discussions.

So here's the simple takeaway:

Use RAGs to generate outputs based on a custom knowledge base if the vocabulary & writing style of the LLM remains the same.

Use fine-tuning to change the structure (behaviour) of the model than knowledge.

Prompt engineering is sufficient if you don't have a custom knowledge base and don't want to change the behavior.

And finally, if your application demands a custom knowledge base and a change in the model's behavior, use a hybrid (RAG + Fine-tuning) approach.

That's it!

If you want to dive into building LLM apps, our full RAG crash course discusses RAG from basics to beyond:

👉 Over to you: How do you decide between prompting, RAG, and fine-tuning?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.