RAG vs. CAG, Explained Visually!

...with must-know design considerations.

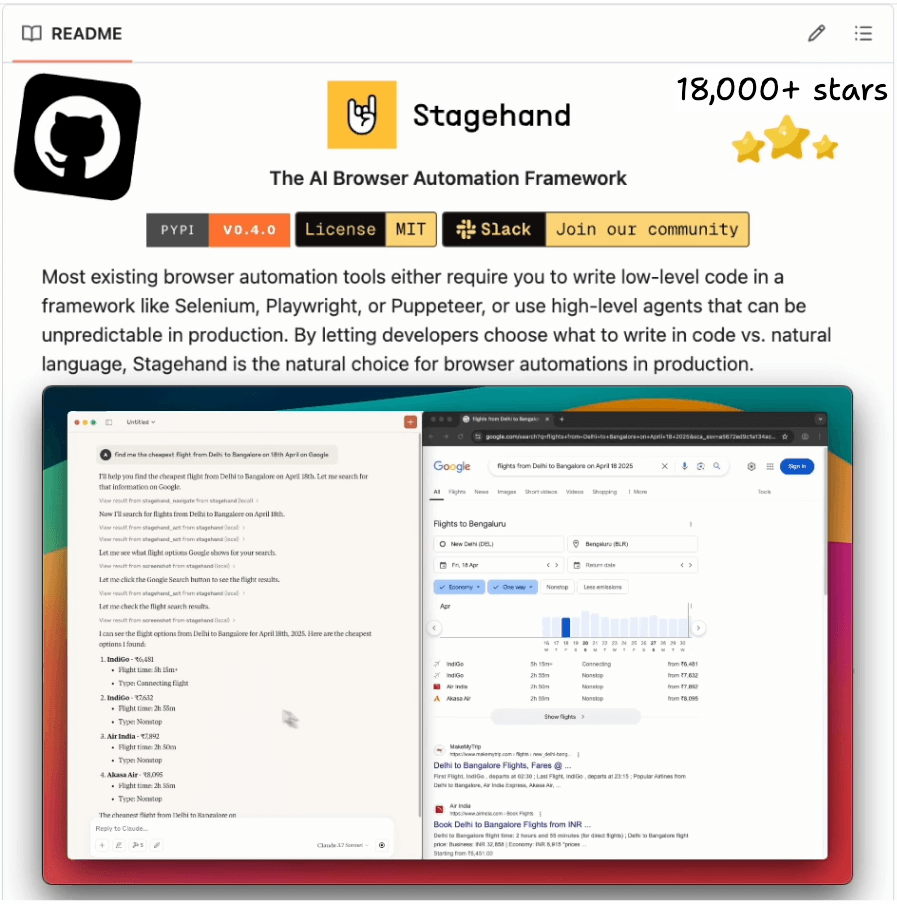

A production-grade browser automation framework for Agents (open-source)!

Typical browser automation tools like Selenium, Playwright, or Puppeteer require you to hard-code your automations.

This makes them brittle since one change in the website can disrupt the entire automation.

On the other hand, high-level Agents like OpenAI Operator can be unpredictable in production.

Stagehand is an open-source framework that bridges the gap between:

brittle traditional automation like Playwright, Selenium, etc., and

unpredictable full-agent solutions like OpenAI Operator.

Key features:

Use AI when you want to navigate unfamiliar pages, and use code (Playwright) when you know exactly what you want to do.

Preview AI actions before running them, and cache repeatable actions to save tokens.

Compatible with SOTA computer use models with just one line of code.

Available in both Python and Typescript SDK.

Stagehand also has an open-source MCP server.

You can find the GitHub repo here →

RAG vs. CAG, Explained Visually!

RAG changed how we build knowledge-grounded systems, but it still has a weakness.

Every time a query comes in, the model often re-fetches the same context from the vector DB, which can be expensive, redundant, and slow.

Cache-Augmented Generation (CAG) fixes this.

It lets the model “remember” stable information by caching it directly in the model’s key-value memory.

And you can take this one step ahead by fusing RAG and CAG as depicted below:

Here’s how it works in simple terms:

In a regular RAG setup, your query goes to the vector database, retrieves relevant chunks, and feeds them to the LLM.

But in RAG + CAG, you divide your knowledge into two layers.

The static, rarely changing data, like company policies or reference guides, gets cached once inside the model’s KV memory.

The dynamic, frequently updated data, like recent customer interactions or live documents, continues to be fetched via retrieval.

This way, the model doesn’t have to reprocess the same static information every time.

It uses it instantly from cache, and supplements it with whatever’s new via retrieval to give faster inference.

The key here is to be selective about what you cache.

You should only include stable, high-value knowledge that doesn’t change often.

If you cache everything, you’ll hit context limits, so separating “cold” (cacheable) and “hot” (retrievable) data is what keeps this system reliable.

You can also see this in practice above.

Many APIs like OpenAI and Anthropic already support prompt caching, so you can start experimenting right away.

If you want to dive into building LLM apps, our full RAG crash course discusses RAG from basics to beyond:

👉 Over to you: Have you ever used CAG?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.