Regularize Neural Networks Using Label Smoothing

Make your model less overconfident.

For every instance in single-label classification datasets, the entire probability mass belongs to a single class, and the rest are zero.

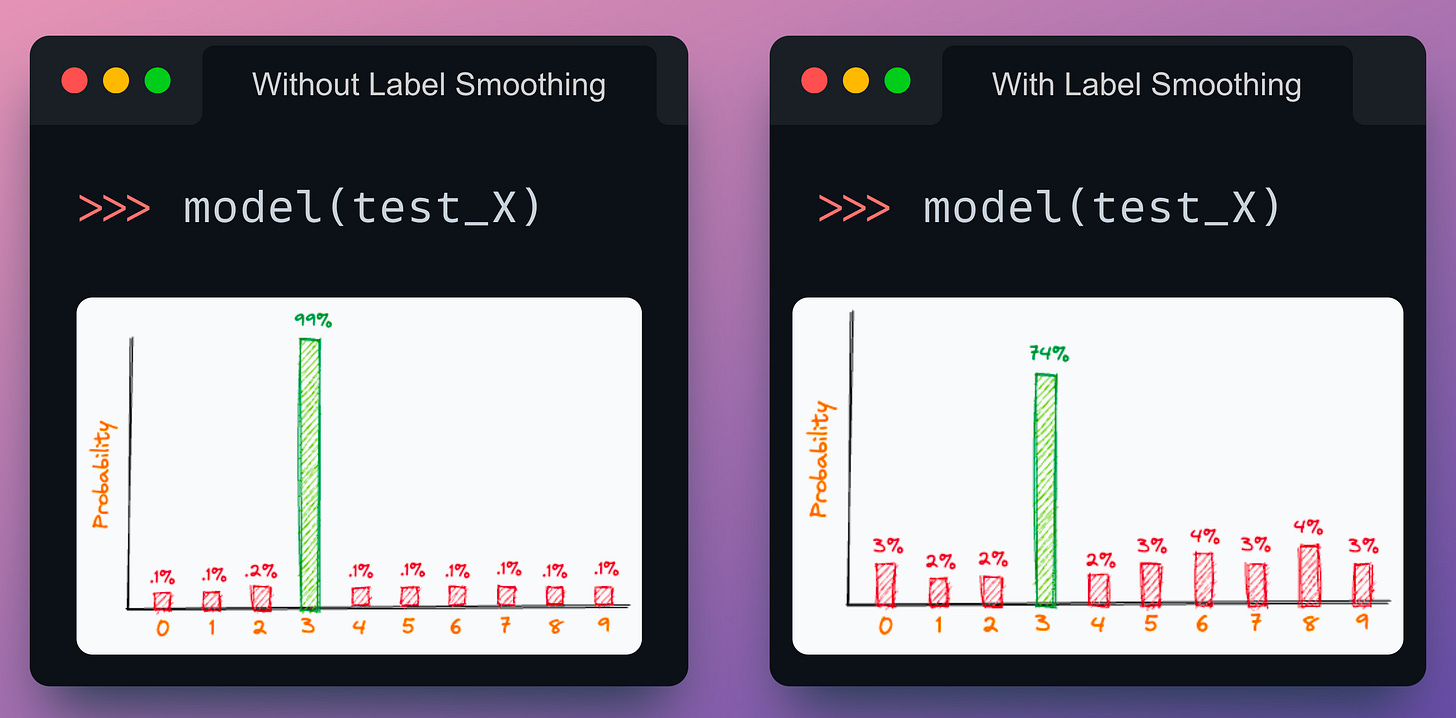

This is depicted below:

The issue is that, at times, such label distributions excessively motivate the model to learn the true class for every sample with pretty high confidence.

This can impact its generalization capabilities.

Label smoothing is a lesser-talked regularisation technique that elegantly addresses this issue.

As depicted above, with label smoothing:

We intentionally reduce the probability mass of the true class slightly.

The reduced probability mass is uniformly distributed to all other classes.

Simply put, this can be thought of as asking the model to be “less overconfident” during training and prediction while still attempting to make accurate predictions.

This makes intuitive sense as well.

The efficacy of this technique is evident from the image below:

In this experiment, I trained two neural networks on the Fashion MNIST dataset with the exact same weight initialization.

One without label smoothing.

Another with label smoothing.

The model with label smoothing resulted in a better test accuracy, i.e., better generalization.

Pretty handy, isn’t it?

When not to use label smoothing?

After using label smoothing for many of my projects, I have also realized that it is not well suited for all use cases.

So it’s important to know when you should not use it.

See, if you only care about getting the final prediction correct and improving generalization, label smoothing will be a pretty handy technique.

However, I wouldn’t recommend utilizing it if you care about:

Getting the prediction correct.

And understanding the model’s confidence in generating a prediction.

This is because as we discussed above, label smoothing guides the model to become “less overconfident” about its predictions.

Thus, we typically notice a drop in the confidence values for every prediction, as depicted below:

On a specific test instance:

The model without label smoothing outputs 99% probability for class 3.

With label smoothing, although the prediction is still correct, the confidence drops to 74%.

This is something to keep in mind when using label smoothing.

Nonetheless, the technique is indeed pretty promising for regularizing deep learning models.

That said, L2 regularization is another common way to regularize models. Here’s a guide that explains its probabilistic origin: The Probabilistic Origin of Regularization.

Also, we discussed 11 techniques to supercharge ML models here: 11 Powerful Techniques To Supercharge Your ML Models.

You can download the code notebook for today’s issue here: Label Smoothing Notebook.

👉 Over to you: What other things need to be taken care of when using label smoothing?

Thanks for reading!

1 Referral: Unlock 450+ practice questions on NumPy, Pandas, and SQL.

2 Referrals: Get access to advanced Python OOP deep dive.

3 Referrals: Get access to the PySpark deep dive for big-data mastery.

Get your unique referral link:

Are you preparing for ML/DS interviews or want to upskill at your current job?

Every week, I publish in-depth ML deep dives. The topics align with the practical skills that typical ML/DS roles demand.

Join below to unlock all full articles:

Here are some of the top articles:

[FREE] A Beginner-friendly and Comprehensive Deep Dive on Vector Databases.

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science.

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

A Detailed and Beginner-Friendly Introduction to PyTorch Lightning: The Supercharged PyTorch

Don’t Stop at Pandas and Sklearn! Get Started with Spark DataFrames and Big Data ML using PySpark.

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Join below to unlock all full articles:

👉 If you love reading this newsletter, share it with friends!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)