Robustify Linear Regression

...to outliers with thresholding.

AI isn’t magic. It’s math.

Understand the concepts powering technology like ChatGPT in minutes a day with Brilliant.

Thousands of quick, interactive lessons in AI, programming, logic, data science, and more make it easy.

Try it free for 30 days here →

Thanks to Brilliant for partnering with us today!

Robustify Linear Regression

Today, we are discussing a technique to robustify linear regression models to outliers. We have already covered 11 actionable techniques to supercharge ML models here →

Let's begin!

Even a few outliers can significantly impact Linear Regression's performance, as shown below:

Huber loss (used by Huber Regression) addresses this as follows:

If the residual is smaller than a specified threshold (δ), use MSE (no change here).

Otherwise, use a loss function with a smaller output than MSE—linear, for instance.

Mathematically, Huber loss is defined as follows:

Its effectiveness is evident from the image below

Linear Regression is affected by outliers.

Huber Regression is more robust.

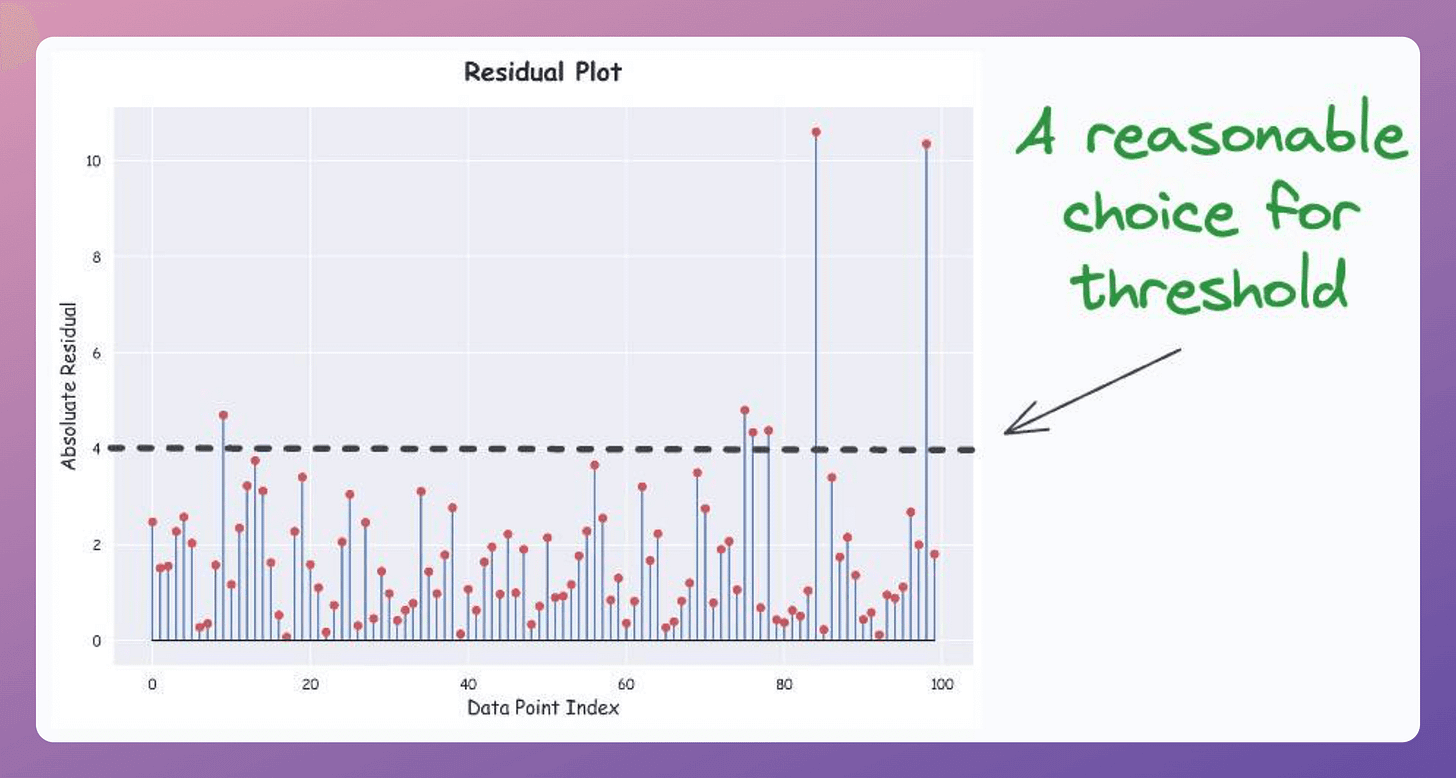

To determine the threshold (δ):

Train a linear regression model as you usually would.

Compute the residuals (=true-predicted) on the training data.

Plot the absolute residuals for every data point.

This is depicted below:

Now you can decide an appropriate threshold value δ.

One good thing is that we can create this plot for any dimensional dataset. The objective is just to plot (true-predicted) values, which will always be 1D.

Many ML engineers quickly pivot to building a different model when they don't get satisfying results with one kind of model.

They do not fully exploit the possibilities of existing models and continue to move towards complex ones.

But after building so many ML models, I have learned various techniques that uncover nuances and optimizations we could apply to significantly enhance model performance without necessarily increasing the model complexity.

I have put together 11 such high-utility and actionable techniques in a recent article here: 11 Powerful Techniques To Supercharge Your ML Models.

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) in the past that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.

I am impressed with this 👏👏👏👏