Shuffle Feature Importance

An intuitive and reliable technique to measure feature importance.

There are so many techniques to measure feature importance.

I often find “Shuffle Feature Importance” to be a handy and intuitive technique to measure feature importance.

Let’s understand this today!

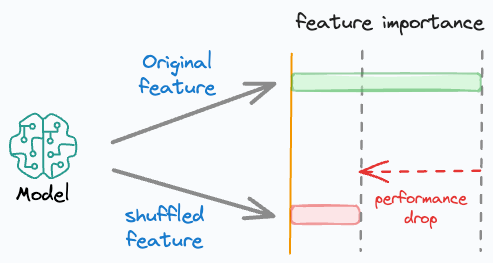

As the name suggests, it observes how shuffling a feature influences the model performance.

The visual below illustrates this technique in four simple steps:

Here’s how it works:

Train the model and measure its performance →

P1.Shuffle one feature randomly and measure performance again →

P2(model is NOT trained again).Measure feature importance using performance drop = (

P1-P2).Repeat for all features.

This makes intuitive sense as well, doesn’t it?

Simply put, if we randomly shuffle just one feature and everything else stays the same, then the performance drop will indicate how important that feature is.

If the performance drop is low → This means the feature has a very low influence on the model’s predictions.

If the performance drop is high → This means that the feature has a very high influence on the model’s predictions.

Do note that to eliminate any potential effects of randomness during feature shuffling, it is recommended to:

Shuffle the same feature multiple times

Measure average performance drop.

A few things that I love about this technique are:

It requires no repetitive model training. Just train the model once and measure the feature importance.

It is pretty simple to use and quite intuitive to interpret.

This technique can be used for all ML models that can be evaluated.

Of course, there is one caveat as well.

Say two features are highly correlated, and one of them is permuted/shuffled.

In this case, the model will still have access to the feature through its correlated feature.

This will result in a lower importance value for both features.

One way to handle this is to cluster highly correlated features and only keep one feature from each cluster.

👉 Over to you: What other reliable feature importance techniques do you use frequently?

Are you overwhelmed with the amount of information in ML/DS?

Every week, I publish no-fluff deep dives on topics that truly matter to your skills for ML/DS roles.

For instance:

A Beginner-friendly Introduction to Kolmogorov Arnold Networks (KANs).

5 Must-Know Ways to Test ML Models in Production (Implementation Included).

Understanding LoRA-derived Techniques for Optimal LLM Fine-tuning

8 Fatal (Yet Non-obvious) Pitfalls and Cautionary Measures in Data Science

Implementing Parallelized CUDA Programs From Scratch Using CUDA Programming

You Are Probably Building Inconsistent Classification Models Without Even Realizing.

How To (Immensely) Optimize Your Machine Learning Development and Operations with MLflow.

And many many more.

Join below to unlock all full articles:

SPONSOR US

Get your product in front of 78,000 data scientists and other tech professionals.

Our newsletter puts your products and services directly in front of an audience that matters — thousands of leaders, senior data scientists, machine learning engineers, data analysts, etc., who have influence over significant tech decisions and big purchases.

To ensure your product reaches this influential audience, reserve your space here or reply to this email to ensure your product reaches this influential audience.

what is the difference between Recursive Feature Engineering vs Shuffle Feature Importance ?

I know RFE we should give number of features to be selected !! , is their any different way both happens ?

If we shuffle one feature , for a particular example the actual value for the shuffled feature is wrong and other features values are correct. Does it have any effect on this because of the wrong value?