Simplify Python Imports with Explicit Packaging

A step towards better Python project development.

When developing in Python, it is always recommended to package your project.

Simply put, if a project is packaged, you can import stuff from it.

Some terminology before proceeding ahead:

Module: A Python file.

Package: A collection of Python files in a directory.

Library: A collection of Packages.

We can package a project by adding an __init__.py file inside a directory.

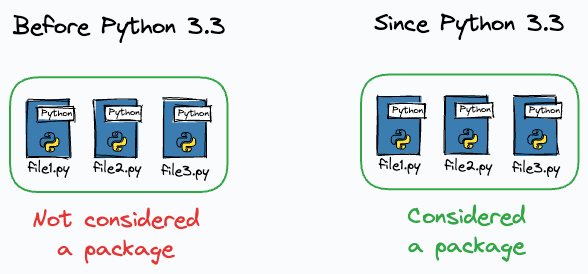

While Python 3.3+ provides Implicit Namespace Packages — a directory with modules is considered a package by default, it is still advised to create an explicit __init__.py file.

A couple of major benefits of doing this are that it helps in:

Explicitly specifying which classes/functions can be imported from the package.

Avoiding redundant imports.

Let’s understand!

Consider this is our directory structure (and we are using Python 3.3+):

train.pyhas aTrainingclass.test.pyhas aTestingclass.

As we are using Python 3.3+, we can directly import the Training and Testing class in pipeline.py as follows:

While this will work as expected, the problem is that we have to explicitly import the specific class from each of the modules.

This creates redundant imports.

Defining the __init__.py file can simplify this.

Let’s see how.

As depicted above:

We first explicitly package the directory by creating an

__init__.pyfile.Next, we specify the imports directly in this file.

Now, instead of writing redundant imports, you can directly import the intended classes from the “model” package, as shown below:

In other words, specifying the __init__.py file lets you treat your package like a module.

This simplifies your imports.

Also, as discussed earlier, an __init__.py file lets you explicitly specify which classes/functions can be imported from the package, which, otherwise, will not be evident.

This simplifies things for other users of your project.

Isn’t that cool?

👉 Over to you: What are some other cool Python project development tips you are aware of?

Thanks for reading!

Whenever you are ready, here’s one more way I can help you:

Every week, I publish 1-2 in-depth deep dives (typically 20+ mins long). Here are some of the latest ones that you will surely like:

[FREE] A Beginner-friendly and Comprehensive Deep Dive on Vector Databases.

You Are Probably Building Inconsistent Classification Models Without Even Realizing

Why Sklearn’s Logistic Regression Has no Learning Rate Hyperparameter?

PyTorch Models Are Not Deployment-Friendly! Supercharge Them With TorchScript.

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering.

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

You Cannot Build Large Data Projects Until You Learn Data Version Control!

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 If you love reading this newsletter, feel free to share it with friends!

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

Great explanation! Thank you!