Sync vs. Async in Python

...explained with code and misconceptions.

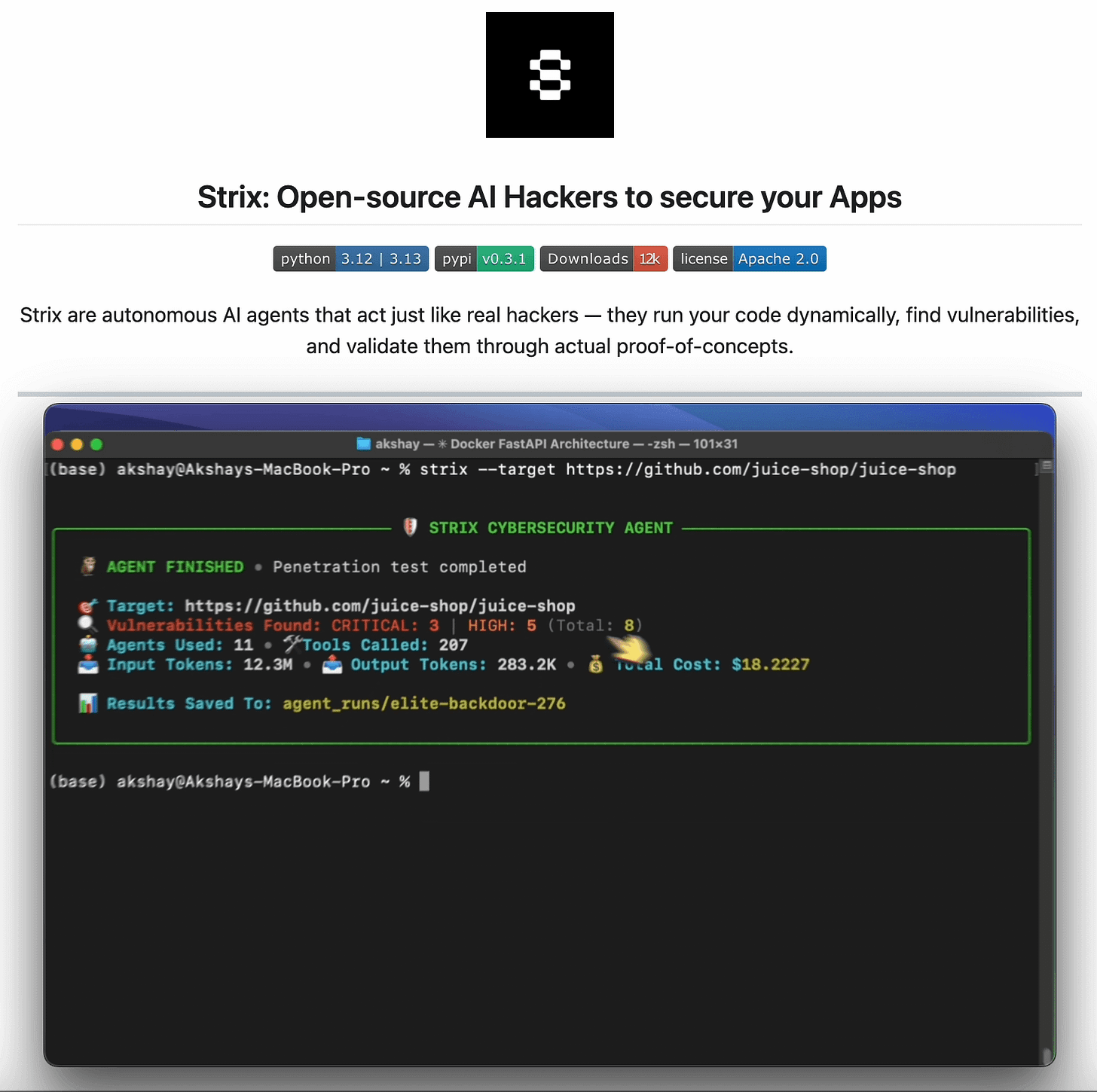

Agent hackers to test your AI apps (open-source)!

Strix is an open-source framework that deploys autonomous AI agents that act like real hackers.

They run your code dynamically, find vulnerabilities, and validate them through actual proof-of-concepts.

Why it matters:

The biggest problem with traditional security testing is that it doesn’t keep up with development speed.

Strix solves this by integrating directly into your workflow:

Run it in CI/CD to catch vulnerabilities before production.

Get real proof-of-concepts, not false positives from static analysis.

Test everything: injection attacks, access control, business logic flaws.

You don’t need to be a security expert. Strix includes a complete hacker toolkit - HTTP proxy, browser automation, and Python runtime for exploit development.

It’s like having a security team that works at the speed of your CI/CD pipeline.

The best part is that the tool runs locally in Docker containers, so your code never leaves your environment.

Getting started is simple:

pipx install strix-agent

Point it at your codebase (app, repo, or directory)

Everything is 100% open-source!

You can find the GitHub repo here → (don’t forget to star it)

Sync vs. Async in Python

Imagine you are fetching data from an API, and it takes a few seconds to respond.

During this time, the CPU is sitting idle, and ideally, it could have started another task if feasible.

Python’s asyncio framework is built for concurrent execution, which lets you pause a task that’s waiting and start another task immediately to maximize resource utilization.

Sync and Async are explained in this visual:

Let’s dive in to learn more about how asyncio works in practice!

Code demo

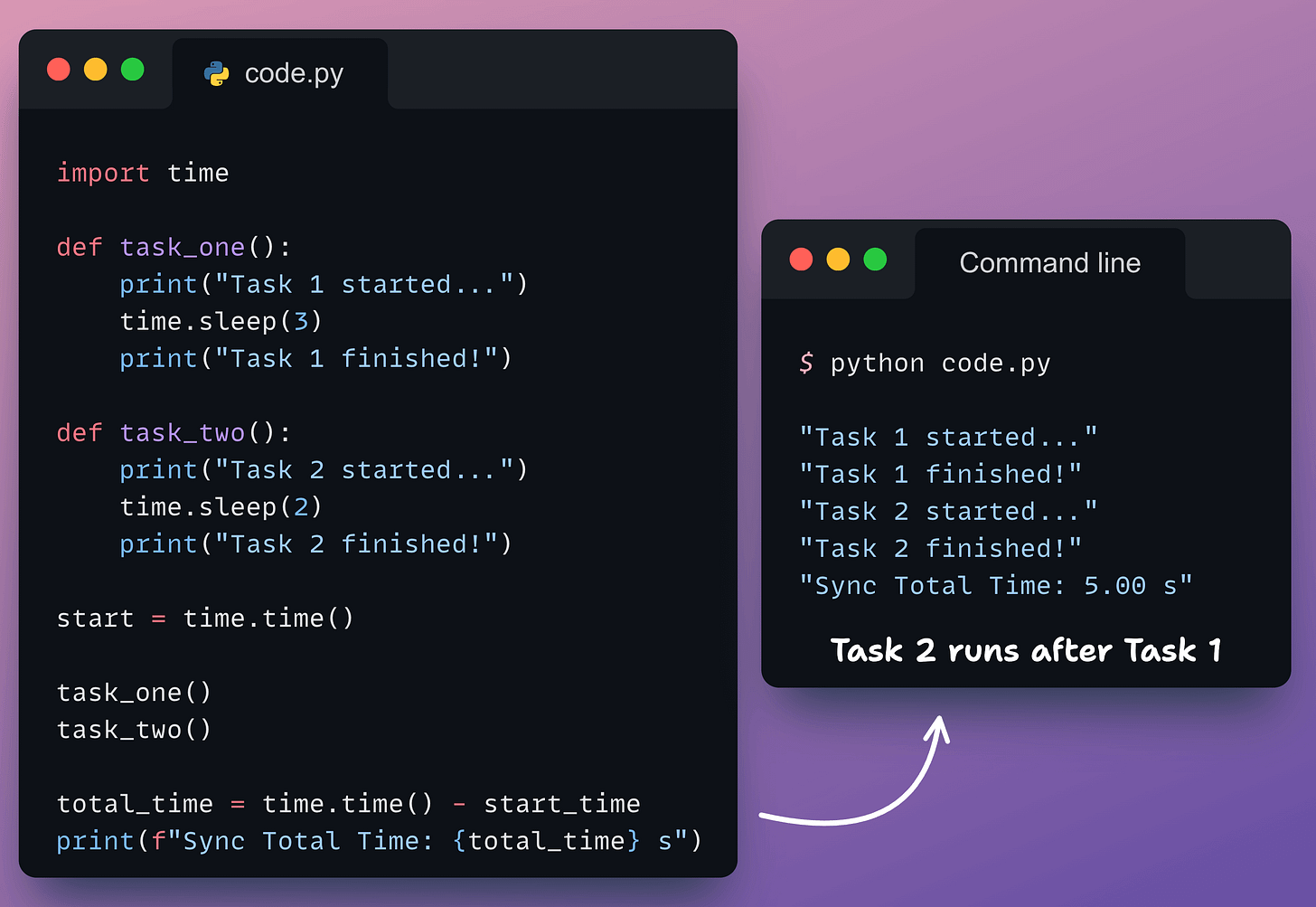

Typically, implementations in Python run synchronously (one after the other). So one task must completely finish before the next one can begin, leading to a waste of resources.

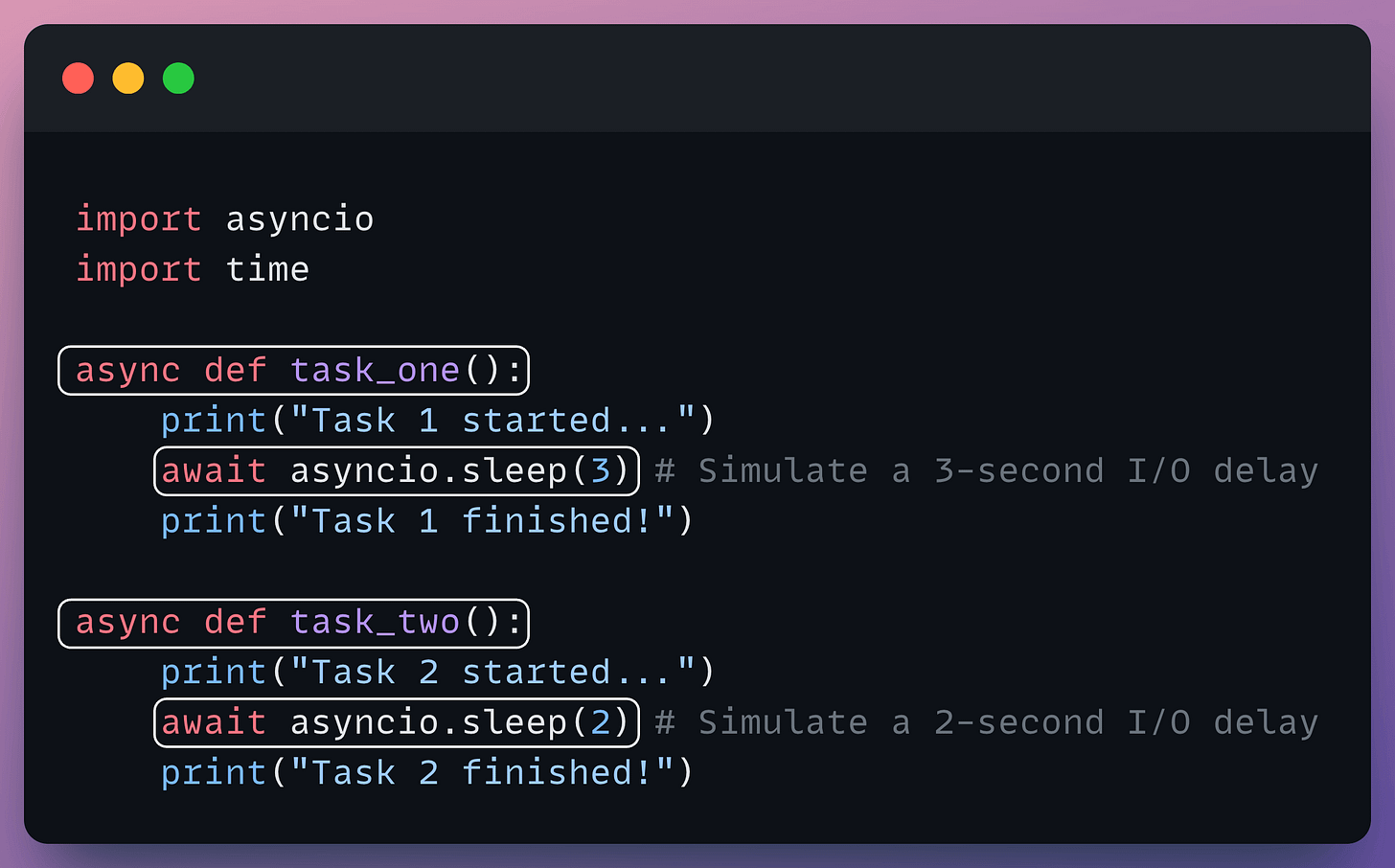

Python asyncio utilizes two keywords: async and await to solve this.

Let’s look at an example to understand them:

async def: This defines a function as a coroutine. It’s a special type of function that can be paused and resumed. It doesn’t run immediately when called. Coroutines are the fundamental building blocks of asynchronous code.await: This keyword can only be used inside anasyncfunction. It tells the Python interpreter: “I’m about to wait for this result. Instead of blocking, hand control back to the Event Loop so it can run other pending tasks.”

Next, we have an Event Loop, which acts as a scheduler that manages all the coroutines, deciding which task to run next, which to pause, and which to resume.

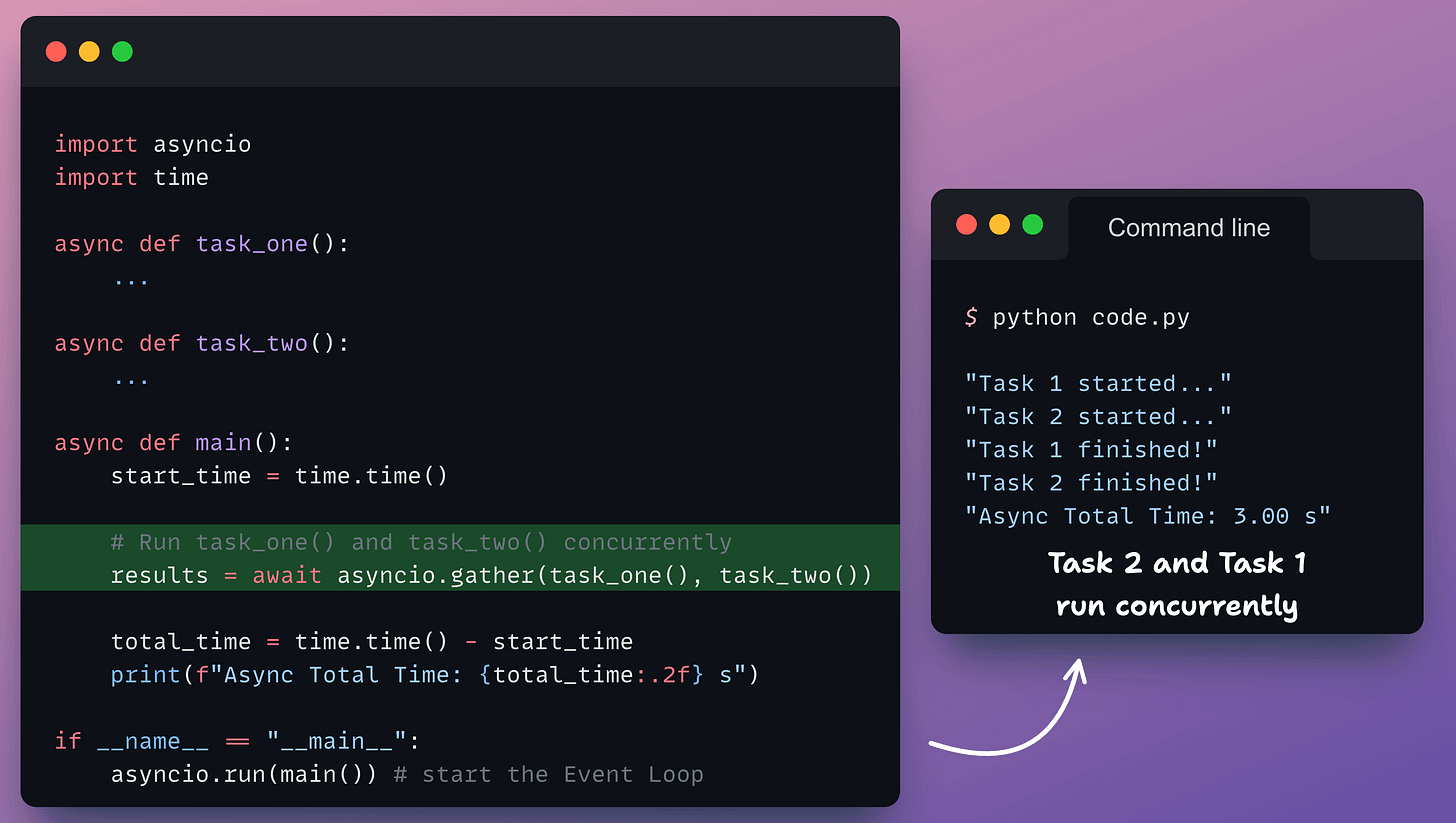

To actually run coroutines concurrently, we must schedule them on the Event Loop using asyncio.gather(), as demonstrated below:

asyncio.run(main()) is the entry point for our asynchronous program. It sets up and manages the event loop, runs the main coroutine, and closes the loop when finished.

The output above shows the total time to be roughly the time of the longer task (3s), unlike the sync case, where the total was the sum of both tasks (5s).

This is how async works in Python.

A common misconception

Async operations make your code faster when you are waiting, not when you are calculating.

So when your task is CPU-bound, i.e., busy doing heavy computation like matrix multiplication, sorting large datasets, or image processing, async won’t help, because there’s nothing to “wait” for.

The CPU is actively working, not idle.

That’s why asyncio shines in I/O-bound scenarios where the program spends most of its time waiting for an external device or network, like:

Network Requests: Making multiple concurrent API calls (e.g., using

httpxoraiohttp).Database Queries: Running several independent queries concurrently.

Message Queues: Waiting for new messages on a queue.

If your task is CPU-bound, asyncio won’t help. You need multiprocessing to leverage multiple CPU cores (or disable GIL, which Python 3.14 now supports).

Also, asyncio runs on a single CPU core, managed by the Global Interpreter Lock (GIL). It achieves concurrency (interleaving tasks) but not true parallelism (running tasks simultaneously on different cores).

That said, the takeaway is simple.

Anytime your CPU is sitting idle by waiting on an external resource, async gives it a job. It enables you to write code that fully utilizes your system.

👉 Over to you: How do you use asyncio in Python?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.