The Most Common Mistake That PyTorch Users Make When Creating Tensors on GPUs

A hidden reason for training failure.

PyTorch is the most popular library for training deep learning models, not just on CPUs but also on GPUs.

Despite its popularity, I have noticed that many PyTorch users don’t create tensors optimally, specifically when using GPUs for model training.

As a result, it drastically increases memory usage, which, in turn, kills the training process.

Essentially, most users create PyTorch tensors on a GPU this way:

The problem is that it first creates a tensor on the CPU, and then PyTorch transfers it to the GPU.

This is slow because we unnecessarily created a tensor on the CPU, which was unnecessary.

In fact, this also increases memory usage.

Instead, the best way is to create a tensor directly on a GPU as follows:

This way, we avoid creating an unwanted tensor on the CPU.

The effectiveness is evident from the following run-time comparison:

Of course, you may argue that the run-time for the CPU→GPU approach is still tiny (in milliseconds), which is not pretty significant.

But a model may need to create millions of tensors (specifically data-related) on GPU during training.

So this difference in run-time scales pretty quickly.

In fact, this is not just about run-time.

The CPU→GPU approach also introduces memory overheads, which could have been totally avoided.

I vividly remember when I was mentoring a student, they committed the exact same mistake.

This resulted in training failures, and they could not identify the mistake.

At that time, even I was not aware of this.

Finally, some memory profiling revealed the cause of training failure.

The point is that this could be one of those hidden reasons for model training failure.

You may never catch this mistake if you are not aware of it.

And when you are training a memory-intensive model, it is totally worth it to fight for even a few MBs of memory.

So is this the only way to optimize neural networks?

Of course not!

We discussed a couple of more techniques in this newsletter before:

Gradient Accumulation: Increase Batch Size Without Explicitly Increasing Batch Size

Under memory constraints, it is always recommended to train the neural network with a small batch size. Despite that, there’s a technique called gradient accumulation, which lets us (logically) increase batch size without explicitly increasing the batch size.

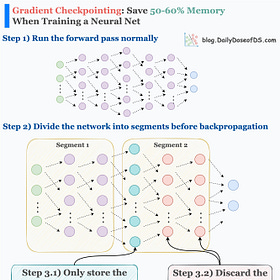

Gradient Checkpointing: Save 50-60% Memory When Training a Neural Network

Neural networks primarily use memory in two ways: Storing model weights During training: Forward pass to compute and store activations of all layers Backward pass to compute gradients at each layer This restricts us from training larger models and also limits the max batch size that can potentially fit into memory.

There’s also a full deep dive, which discusses four more actively used techniques in the industry to optimize deep learning models: Model Compression: A Critical Step Towards Efficient Machine Learning

👉 Over to you: What are some other ways to optimize neural network training?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed last month:

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Why Bagging is So Ridiculously Effective At Variance Reduction?

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!

Wow, that's an awesome hint. So many stuff you write an deliberatly share which one would not find in other sources. Thanks!