The Most Overlooked Problem With Imputing Missing Values Using Zero (or Mean)

...and here's what you should try instead.

Replacing (imputing) missing values with mean or zero or any other fixed value:

alters summary statistics

changes the distribution

inflates the presence of a specific value

This can lead to:

inaccurate modeling

incorrect conclusions, and more.

Instead, always try to impute missing values with more precision.

kNN imputer is often a great choice if your data is missing at random (MAR).

It imputes missing values using the k-Nearest Neighbors algorithm.

Missing features are imputed by running a kNN on non-missing feature values.

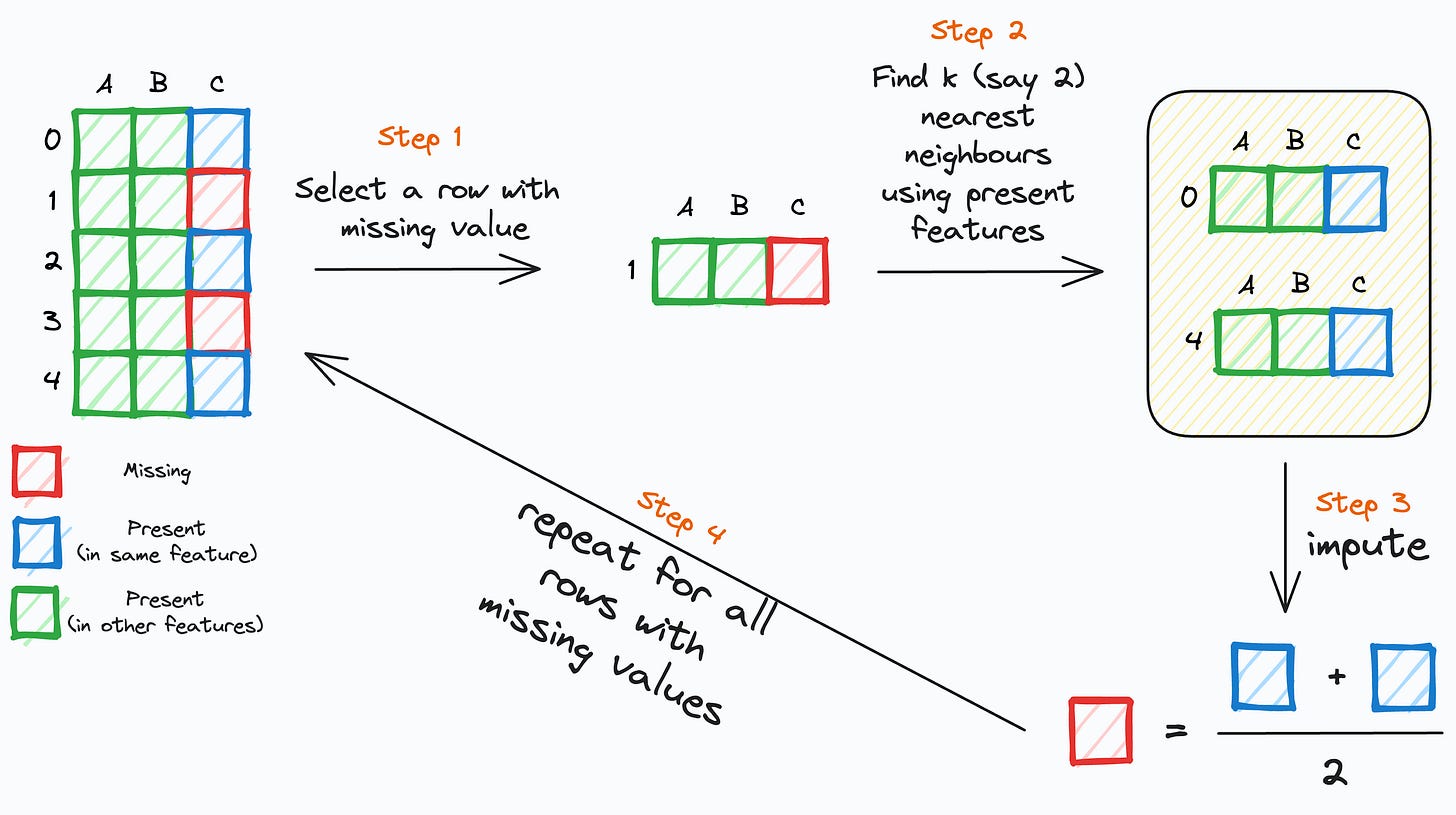

The following depicts how it works:

Step 1: Select a row (

r) with a missing value.Step 2: Find its k nearest neighbors using the non-missing feature values.

Step 3: Impute the missing feature of the row (

r) using the corresponding non-missing values of k nearest neighbor rows.Step 4: Repeat for all rows with missing values.

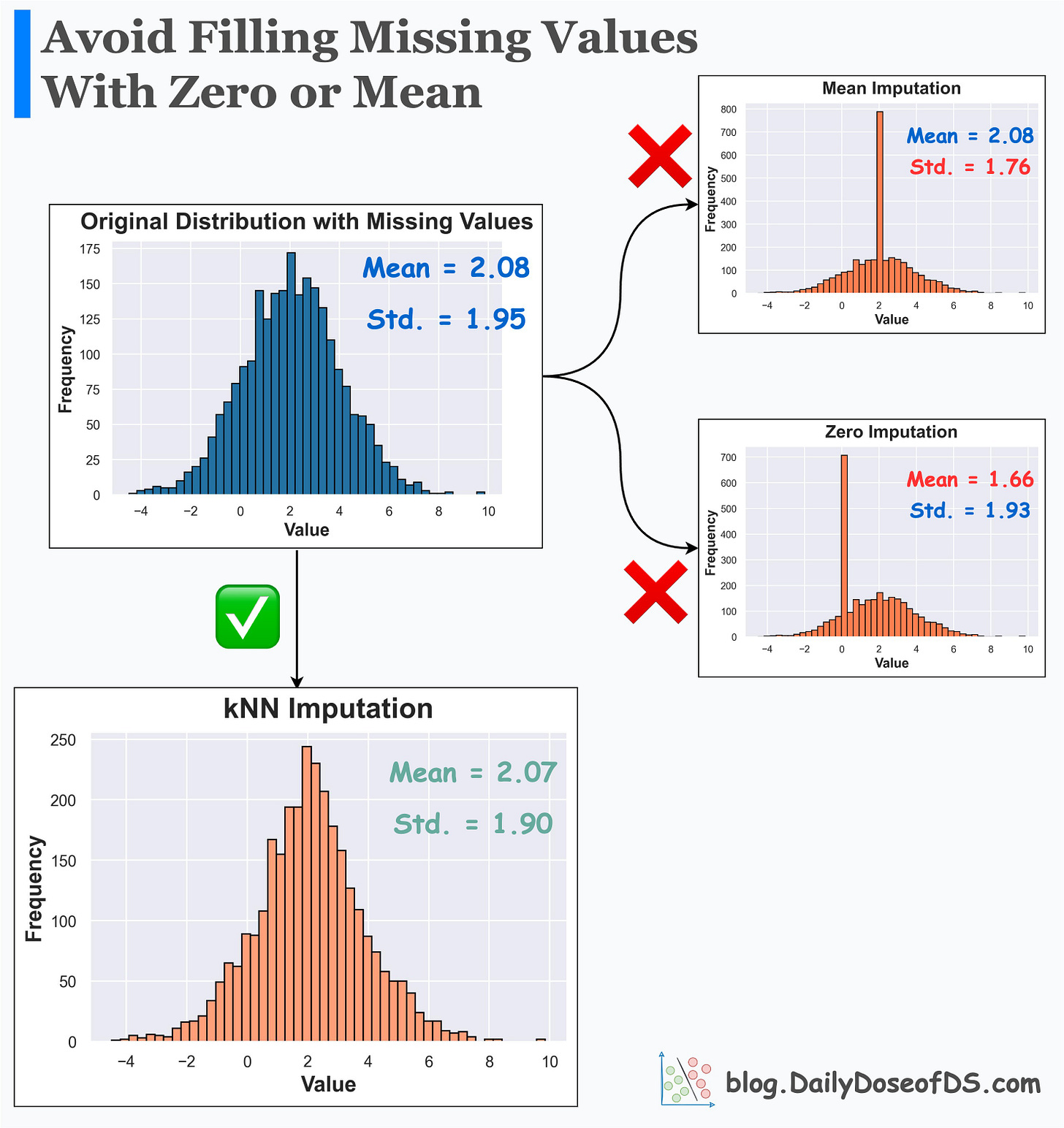

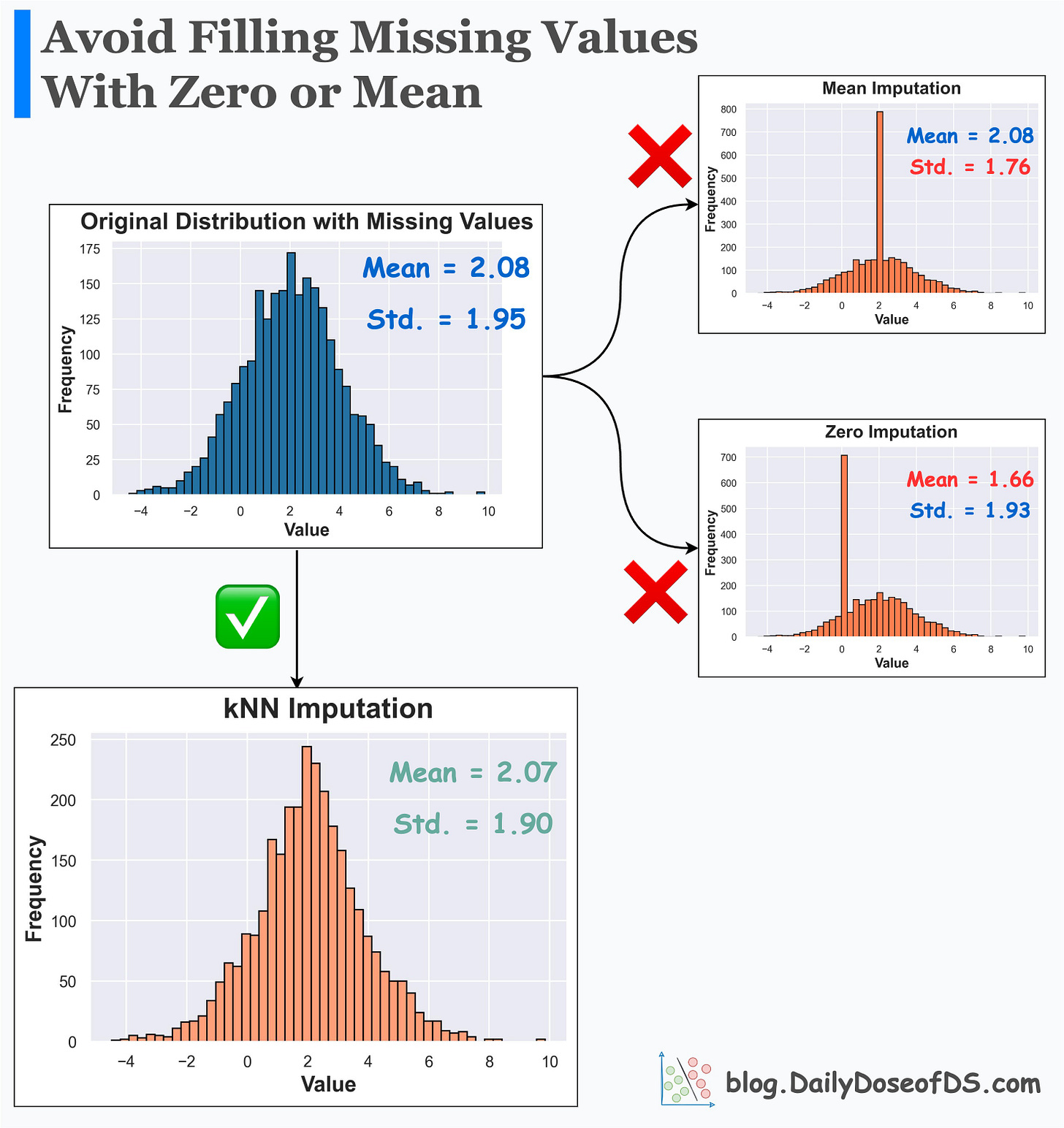

Its effectiveness over Mean/Zero imputation is evident from the image below.

Mean/Zero alters the summary statistics and distribution.

kNN imputer preserves them.

Get started with kNN imputer: Sklearn Docs.

👉 Over to you: What are some other better ways to impute missing values?

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights.

The button is located towards the bottom of this email.

Thanks for reading!

Latest full articles

If you’re not a full subscriber, here’s what you missed last month:

DBSCAN++: The Faster and Scalable Alternative to DBSCAN Clustering

Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning

You Cannot Build Large Data Projects Until You Learn Data Version Control!

Sklearn Models are Not Deployment Friendly! Supercharge Them With Tensor Computations.

Deploy, Version Control, and Manage ML Models Right From Your Jupyter Notebook with Modelbit

Gaussian Mixture Models (GMMs): The Flexible Twin of KMeans.

To receive all full articles and support the Daily Dose of Data Science, consider subscribing:

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you love reading this newsletter, feel free to share it with friends!