Try This If Your Linear Regression Model is Underperforming

Change the model or alter the data.

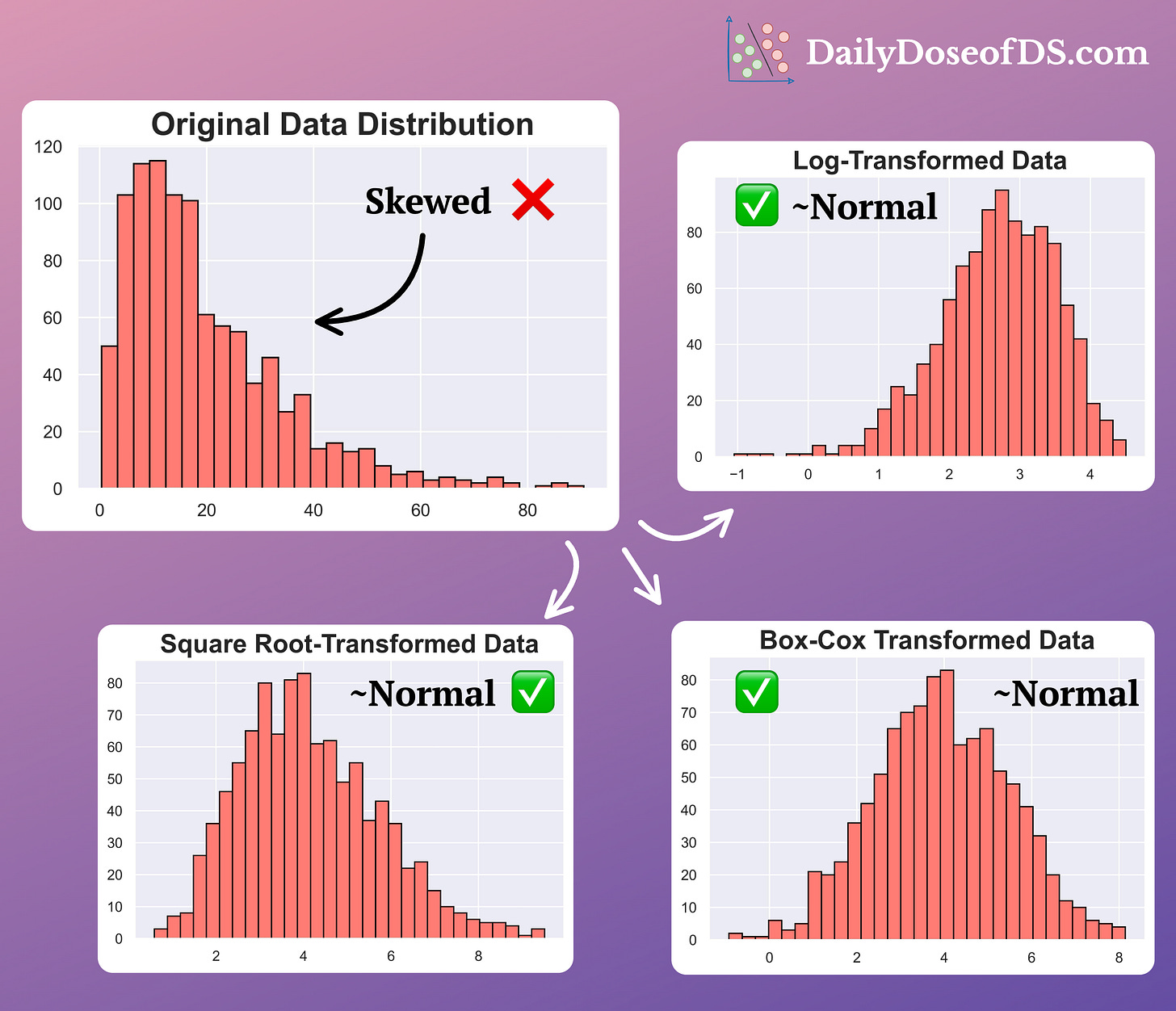

At times, skewness in data can negatively influence the predictive power of your linear regression model.

If the model is underperforming, it may be due to skewness.

There are many ways to handle this:

Try a different model

Transform the skewed variable

The most commonly used transformations are:

Log transform: Apply the logarithmic function.

Sqrt transform: Apply the square root function.

Box-cox transform: You can use it using Scipy’s implementation: Scipy docs.

The effectiveness of the three transformations is clear from the image above.

Applying them transforms the skewed data into a (somewhat) normally distributed variable.

👉 Over to you: What are some other methods to handle skewness that you are aware of?

👉 Read what others are saying about this post on LinkedIn and Twitter.

👉 Tell the world what makes this newsletter special for you by leaving a review here :)

👉 If you liked this post, don’t forget to leave a like ❤️. It helps more people discover this newsletter on Substack and tells me that you appreciate reading these daily insights. The button is located towards the bottom of this email.

👉 If you love reading this newsletter, feel free to share it with friends!

Find the code for my tips here: GitHub.

I like to explore, experiment and write about data science concepts and tools. You can read my articles on Medium. Also, you can connect with me on LinkedIn and Twitter.