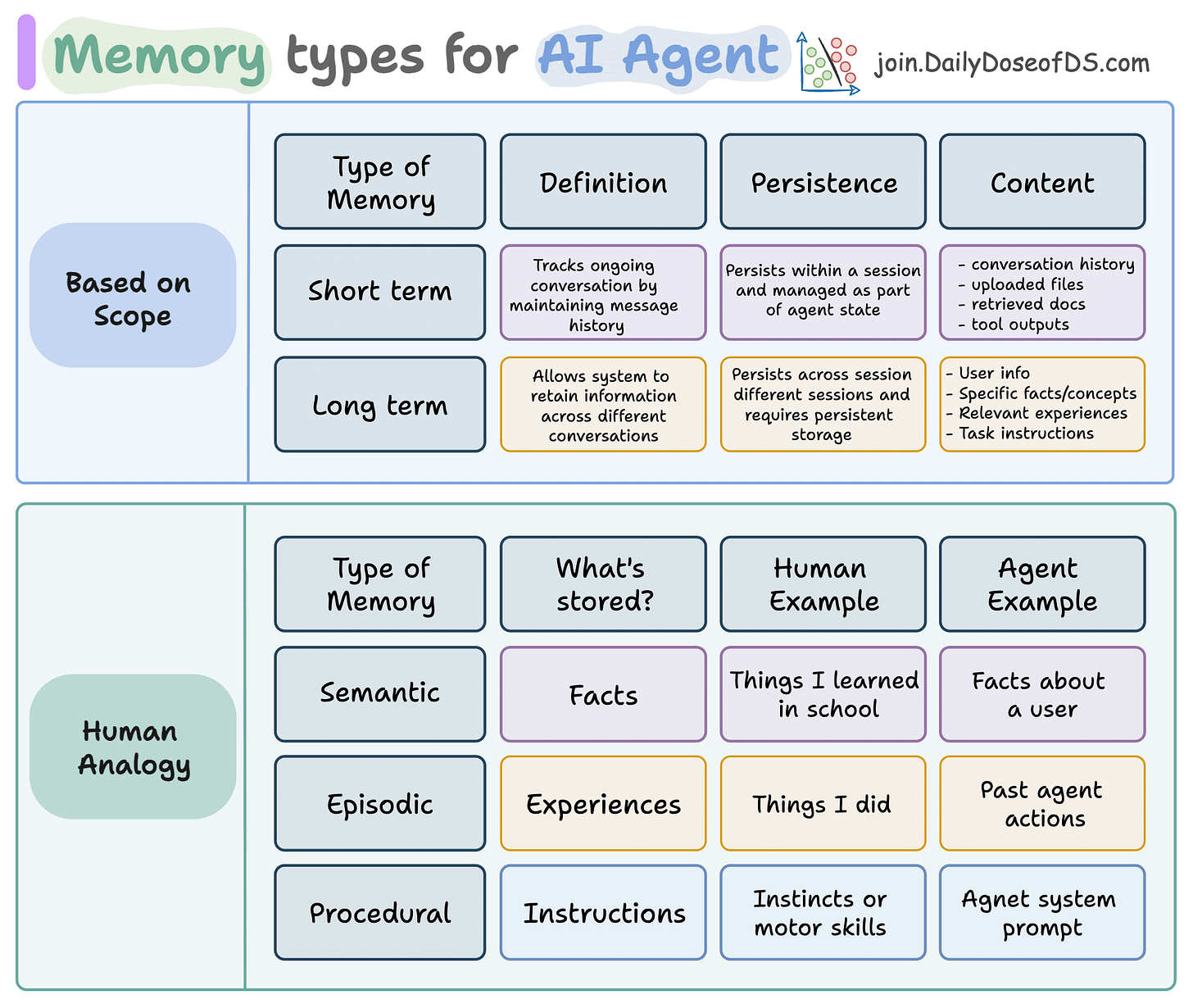

Types of Memory in AI Agents

...explained with examples.

Native RAG over Video in just 5 lines of code!

Most RAG systems stop at text.

But a lot of valuable context lives in spoken words and visuals like calls, interviews, demos, lectures, and it’s tough to build production-grade RAG solutions on them.

Here’s how you can do that in just 5 lines of code with Ragie:

Ingest

.mp4,.wav,.mkv, or 10+ formats.Run a natural language query like “Moments when Messi scored a goal?”

Get the exact timestamp + streamable clip that answers it.

Here’s one of our runs where we gave it a goal compilation video, and it retrieved the correct response:

Top image: ARG vs CRO. It determined there’s a penalty and retrieved the score before and after the penalty.

Bottom image: ARG vs MEX: It fetched the score before the goal and how the goal was scored accurately.

Start building RAG over audio and video here →

Types of Memory in AI Agents

Agents without memory aren’t agents at all.

We often assume LLMs remember things. They feel like humans, but the truth is that LLMs are stateless.

If you want your agent to recall anything (past chats, preferences, behaviors), you have to build memory into it.

But how to do that?

Let’s understand this step-by-step!

Agent memory comes in two scopes:

Short-term: Handles current conversations. Maintains message history, context, and state across a session.

Long-term: Spans multiple sessions. Remembers preferences, past actions, and user-specific facts.

But there’s more.

Just like humans, long-term memory in agents can be:

Semantic → Stores facts and knowledge

Episodic → Recalls past experiences or task completions

Procedural → Learns how to do things (think: internalized prompts/instructions)

This memory isn’t just nice-to-have; it enables agents to learn from past interactions without retraining the model.

This is especially powerful for continual learning: letting agents adapt to new tasks without touching LLM weights.

All this is actually implemented in Zep, a popular open-source framework (18k+ stars), which provides a real-time, graph-based, human-like memory system for your agents.

You can see the full implementation and try it yourself.

Here’s the repo → (don’t forget to star it)

Also, in Part 8 and Part 9 of the Agents’ crash course, we primarily focused on 5 types of Memory for AI agents, with implementation.

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn everything about MCPs in this crash course with 9 parts →

Learn how to build Agentic systems in a crash course with 14 parts.

Learn how to build real-world RAG apps and evaluate and scale them in this crash course.

Learn sophisticated graph architectures and how to train them on graph data.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here.

Learn how to run large models on small devices using Quantization techniques.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust using Conformal Predictions.

Learn how to identify causal relationships and answer business questions using causal inference in this crash course.

Learn how to scale and implement ML model training in this practical guide.

Learn techniques to reliably test new models in production.

Learn how to build privacy-first ML systems using Federated Learning.

Learn 6 techniques with implementation to compress ML models.

All these resources will help you cultivate key skills that businesses and companies care about the most.