Updates to the AG-UI Protocol

Next big thing after MCP and A2A.

We talked about the Agent to User Interaction Protocol last month, which serves as the missing link in the protocol stack:

MCP connects Agents to Tools.

A2A connects Agents to other Agents.

AG-UI connects Agents to Users.

Despite being early, it quickly became the interaction backbone for most agentic apps, gaining ~4,000 stars on GitHub in a couple of weeks.

Yesterday, several key updates were made to AG-UI.

Let’s understand them!

A quick note

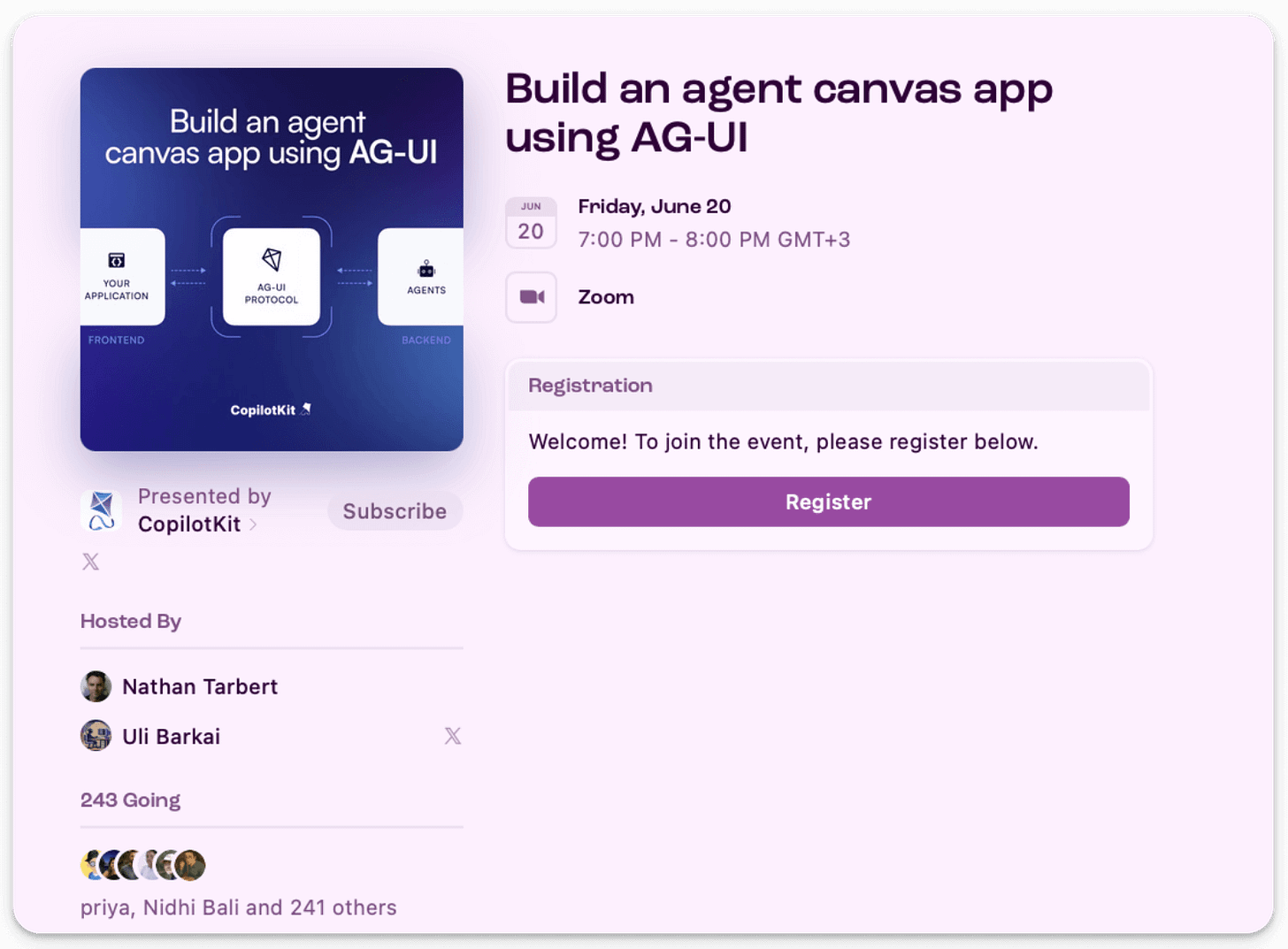

The AG-UI team is organizing a free hands-on online workshop where you can learn how to build an agent app using AG-UI:

You can register for the session for free here →

The problem (and a quick recap)

LangGraph, CrewAI, etc. let you build powerful multi-step agentic workflows:

But when you bring that Agent into a real-world app, you want to:

Stream LLM response token by token, without building a custom WebSocket.

Display tool execution progress in real-time, pause for human feedback, without blocking or losing context.

Sync large changing objects (like tables) without re-sending everything to UI.

Let users interrupt, cancel, or reply mid-agent run, without losing context.

Moreover, if you use LangGraph, the front-end will implement custom WebSocket logic, messy JSON formats, and UI adapters specific to LangGraph.

But to migrate to CrewAI, everything must be adapted.

AG-UI (Agent-User Interaction Protocol) is an open-source protocol that standardizes the interaction layer between backend agents and frontend UIs:

This allows you to write your backend logic once, hook it into AG-UI, and everything just works:

LangGraph, CrewAI, Mastra, etc., all can emit AG-UI events.

UIs can be built using CopilotKit components or your own React stack.

What’s New in AG-UI?

From 4 to 16 Event Types

AG-UI now defines 16 standardized event types (earlier there were 4), covering everything an agent might do during its lifecycle. That means:

TEXT_MESSAGE_CONTENTfor token streamingTOOL_CALL_STARTandTOOL_CALL_ENDfor real-time tool trackingSTATE_DELTAfor shared state updatesAGENT_HANDOFFto transfer control between agents

This makes AG-UI expressive enough for complex, interruptible, real-time apps—far beyond simple chat UIs.

Ecosystem growth

LangGraph, CrewAI, Mastra, AG2, Agno, or LlamaIndex, all now support AG-UI.

With the adapter architecture, even legacy agents can emit AG-UI-compatible events without deep rewrites.

Once the frontend speaks AG-UI, it’s automatically compatible with every supported agent framework.

AG-UI is becoming the norm for the interactivity layer for the agent ecosystem.

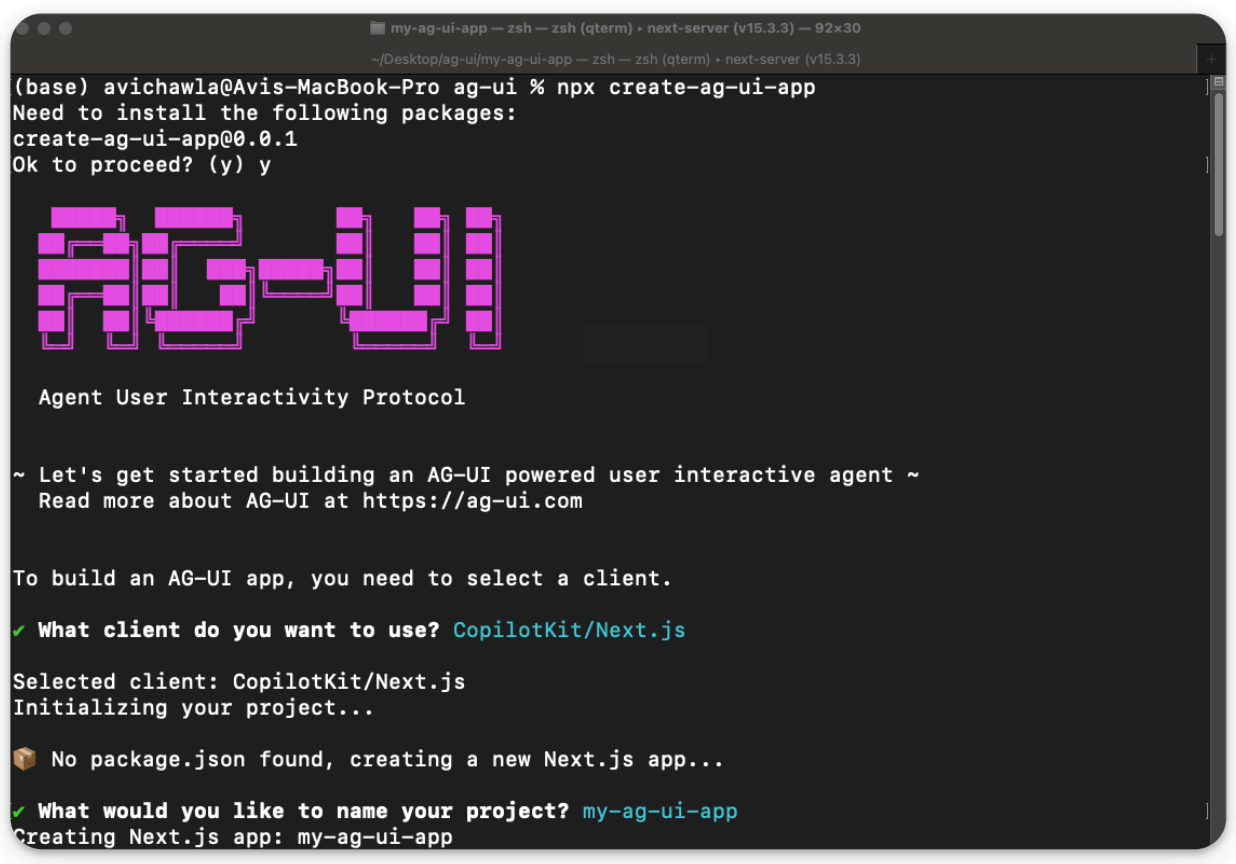

You can try this out with a single command:

npx create-ag-ui-appIt spins up a fully functional starter with:

Agent backend

Streaming frontend UI

Built-in support for shared state, tool calling, and event-based updates

AG-UI allows Agents to do more than just reply with text and make them feel like part of the app.

The team is also organizing a free hands-on online workshop where you can learn how to build an agent canvas app using AG-UI:

You can register for the session for free here →

Here are some more resources to learn more about AG-UI:

Thanks for reading!

Please also post a tutorial on the Agent User Interaction Protocol (AG-UI)