Visual Guide to Model Context Protocol (MCP)

...and understanding API vs. MCP.

Augment Code: AI coding assistant for real developer work

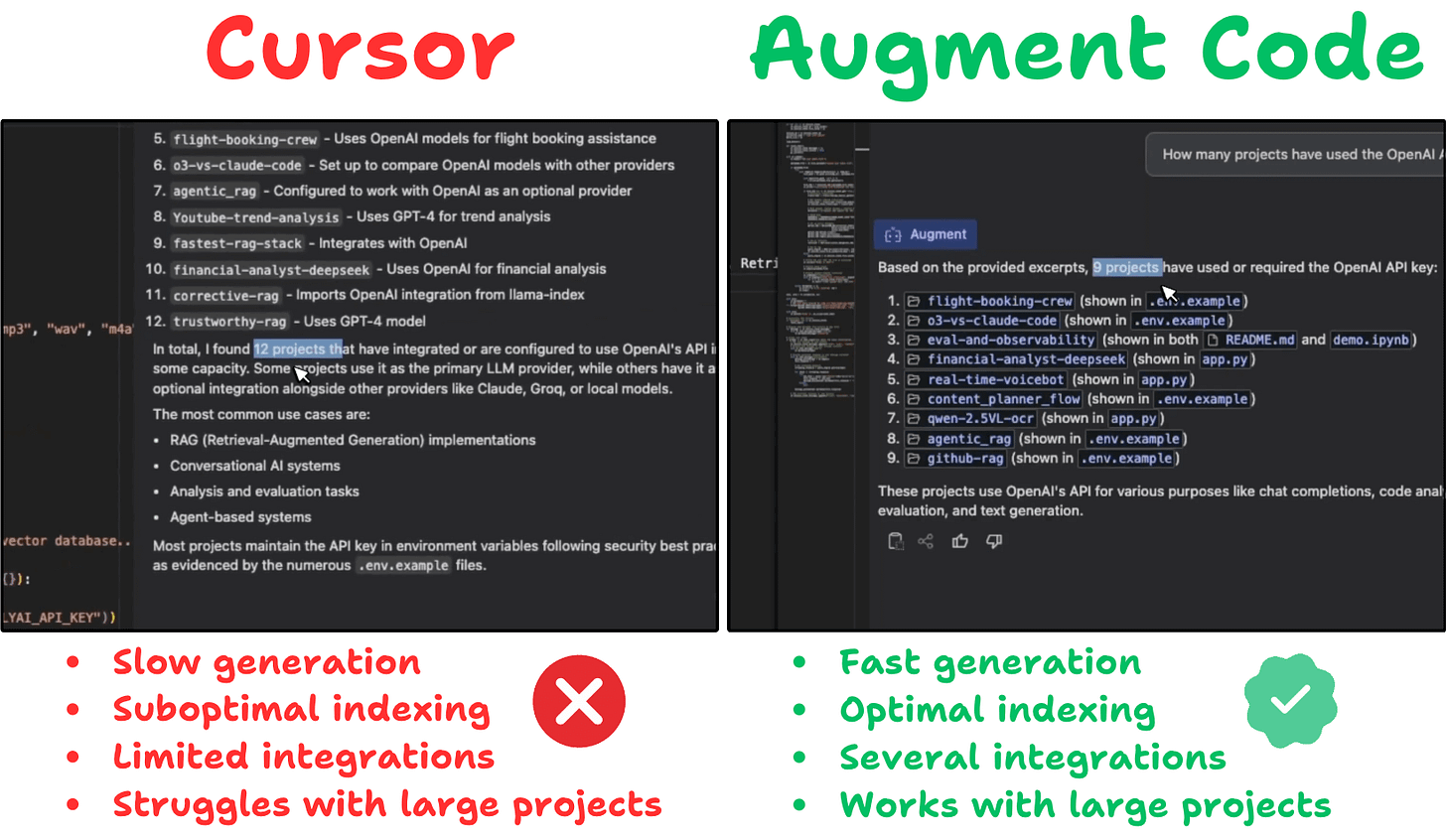

Being capped at 10-12k tokens, Cursor struggles to answer questions requiring full codebase context. And it’s extremely verbose.

Augment Code is a powerful AI Assistant built for developers working with large, evolving codebases—needing an assistant that understands the full context of their projects.

Its effectiveness over Cursor is evident from the test below where I asked the AI to list all my projects built with OpenAI:

Augment Code indexes your entire repo upfront, so it answers questions instantly, no matter how large your project is—and with fewer tokens.

Lastly, Augment Code is fully compatible with VSCode, JetBrains, Vim, Slack, and more.

Thanks to Augment Code for partnering today.

Model Context Protocol (MCP)

Lately, there has been a lot of buzz around Model Context Protocol (MCP). You must have heard about it.

Today, let’s understand what it is.

Intuitively speaking, MCP is like a USB-C port for your AI applications.

Just as USB-C offers a standardized way to connect devices to various accessories, MCP standardizes how your AI apps connect to different data sources and tools.

Let’s dive in a bit more technically.

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers.

It has three key components

Host

Client

Server

Here’s an overview before we dig deep👇

Host represents any AI app (Claude desktop, Cursor) that provides an environment for AI interactions, accesses tools and data, and runs the MCP Client.

MCP Client operates within the host to enable communication with MCP servers.

Finally, the MCP Server exposes specific capabilities and provides access to data like:

Tools: Enable LLMs to perform actions through your server.

Resources: Expose data and content from your servers to LLMs.

Prompts: Create reusable prompt templates and workflows.

Understanding client-server communication is essential for building your own MCP client-server.

So let’s understand how the client and the server communicate.

Here’s an illustration before we break it down step by step...

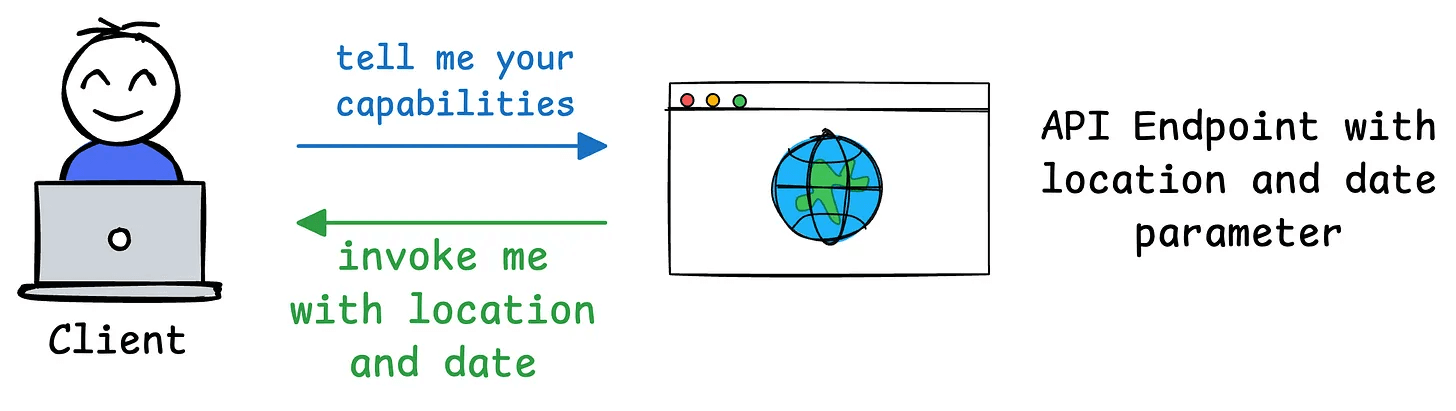

First, we have capability exchange, where:

The client sends an initial request to learn server capabilities.

The server then responds with its capability details.

For instance, a Weather API server, when invoked, can reply back with available “tools”, “prompts templates”, and any other resources for the client to use.

Once this exchange is done, Client acknowledges the successful connection and further message exchange continues.

Here’s one of the reasons this setup can be so powerful:

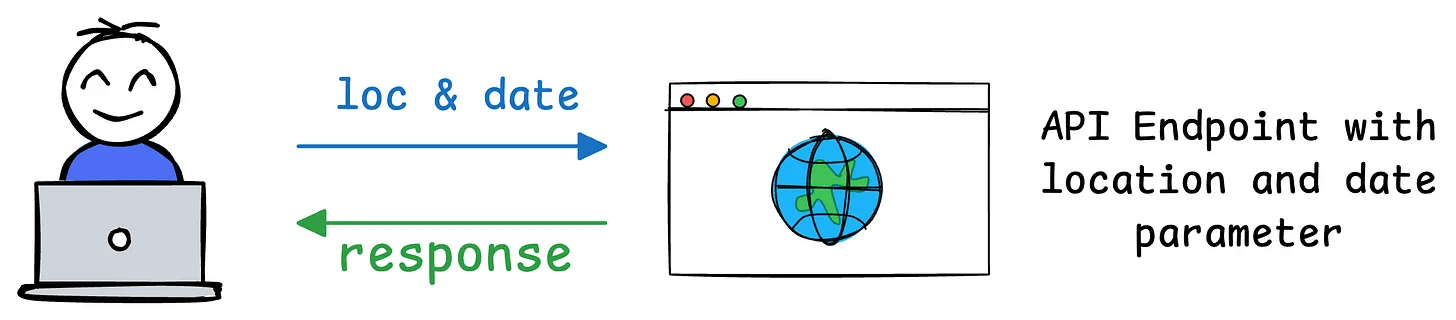

In a traditional API setup:

If your API initially requires two parameters (e.g.,

locationanddatefor a weather service), users integrate their applications to send requests with those exact parameters.

Later, if you decide to add a third required parameter (e.g.,

unitfor temperature units like Celsius or Fahrenheit), the API’s contract changes.

This means all users of your API must update their code to include the new parameter. If they don’t update, their requests might fail, return errors, or provide incomplete results.

MCP’s design solves this as follows:

MCP introduces a dynamic and flexible approach that contrasts sharply with traditional APIs.

For instance, when a client (e.g., an AI application like Claude Desktop) connects to an MCP server (e.g., your weather service), it sends an initial request to learn the server’s capabilities.

The server responds with details about its available tools, resources, prompts, and parameters. For example, if your weather API initially supports

locationanddate, the server communicates these as part of its capabilities.

If you later add a

unitparameter, the MCP server can dynamically update its capability description during the next exchange. The client doesn’t need to hardcode or predefine the parameters—it simply queries the server’s current capabilities and adapts accordingly.

This way, the client can then adjust its behavior on-the-fly, using the updated capabilities (e.g., including unit in its requests) without needing to rewrite or redeploy code.

We hope this clarifies what MCP does.

In the future, we shall explore creating custom MCP servers and building hands-on demos around them. Stay tuned!

👉 Over to you: Do you think MCP is powerful than traditional API setup?

Thanks for reading!

P.S. For those wanting to develop “Industry ML” expertise:

At the end of the day, all businesses care about impact. That’s it!

Can you reduce costs?

Drive revenue?

Can you scale ML models?

Predict trends before they happen?

We have discussed several other topics (with implementations) that align with such topics.

Here are some of them:

Learn sophisticated graph architectures and how to train them on graph data: A Crash Course on Graph Neural Networks – Part 1.

So many real-world NLP systems rely on pairwise context scoring. Learn scalable approaches here: Bi-encoders and Cross-encoders for Sentence Pair Similarity Scoring – Part 1.

Learn techniques to run large models on small devices: Quantization: Optimize ML Models to Run Them on Tiny Hardware.

Learn how to generate prediction intervals or sets with strong statistical guarantees for increasing trust: Conformal Predictions: Build Confidence in Your ML Model’s Predictions.

Learn how to identify causal relationships and answer business questions: A Crash Course on Causality – Part 1

Learn how to scale ML model training: A Practical Guide to Scaling ML Model Training.

Learn techniques to reliably roll out new models in production: 5 Must-Know Ways to Test ML Models in Production (Implementation Included)

Learn how to build privacy-first ML systems: Federated Learning: A Critical Step Towards Privacy-Preserving Machine Learning.

Learn how to compress ML models and reduce costs: Model Compression: A Critical Step Towards Efficient Machine Learning.

All these resources will help you cultivate key skills that businesses and companies care about the most.

This illustration is so helpful! thanks you so much for writing it up with such amazing illustrations. Can you please make one for A2A and the difference to MCP? Thank you!

Hi Avi, I read your posts as frequently as possible and your subscribers for more than a year now. Your team team did a wonderful job in making us to understand the depths. One feedback on your articles i have. Please cite the references in your article. That helps us to deep dive and also it is a fair ask to refer others hard work too. As yours is a paid blog that kind of citation avoids plagiarism too. Regards, Prem