What is (was?) GIL in Python?

+ memory for OpenClaw Agents and a new learning paradigm for Agents.

In today’s newsletter:

Give your OpenClaw Agents a knowledge graph-based memory.

A new learning paradigm for AI agents.

What is (was?) GIL in Python?

Give your OpenClaw agents a knowledge graph-based memory

Cognee is an open-source framework that turns your data into a knowledge graph, making it easy to store, retrieve, and reason over information. Their new OpenClaw plugin brings this directly into your agent workflow.

Here’s what makes it worth trying:

Auto-indexes memory files on startup and after every run

Recalls relevant context before each prompt automatically

Natural language search across sessions via graph traversal

Hash-based change detection only syncs what’s changed

Runs entirely local via Docker

Check it out on GitHub (don’t forget to star ⭐ ) →

Read about the OpenClaw plugin here →

A new learning paradigm for AI agents

Make agents learns the way humans do.

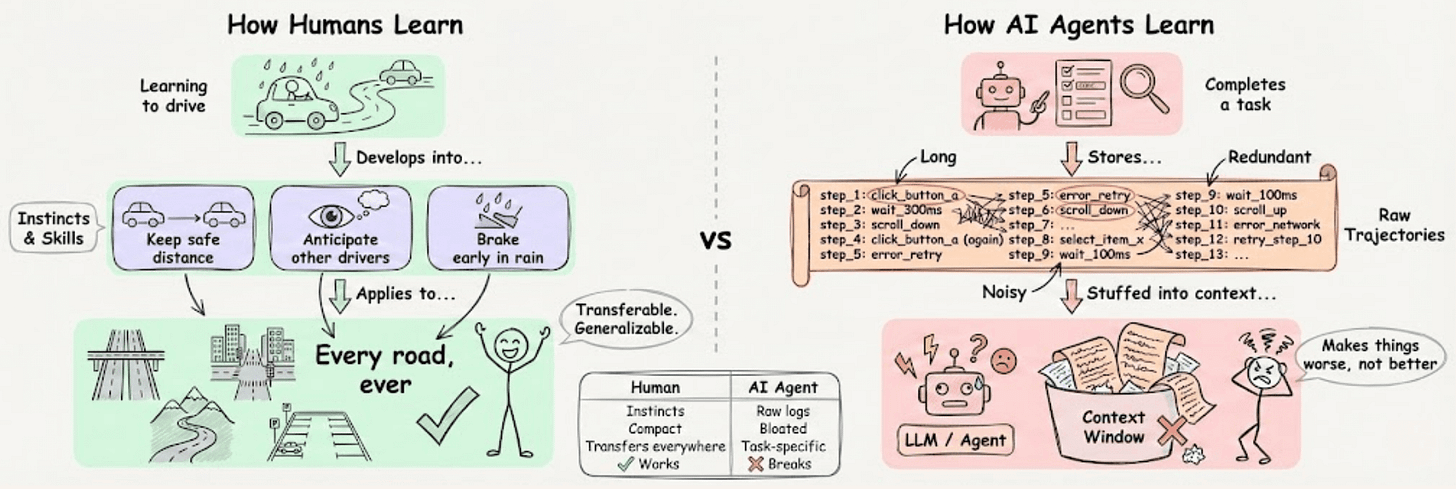

Think about how you learned to drive. Nobody memorizes every route turn by turn. You develop instincts such as maintaining a safe distance, anticipating what other drivers will do, and braking early in the rain.

Those instincts become skills you carry to every road you ever drive on.

AI agents today do the opposite.

Most memory systems store raw trajectories, which are full logs of every action the agent took during a task.

These logs are long, noisy, and full of redundant steps. Stuffing them into context often makes things worse, not better.

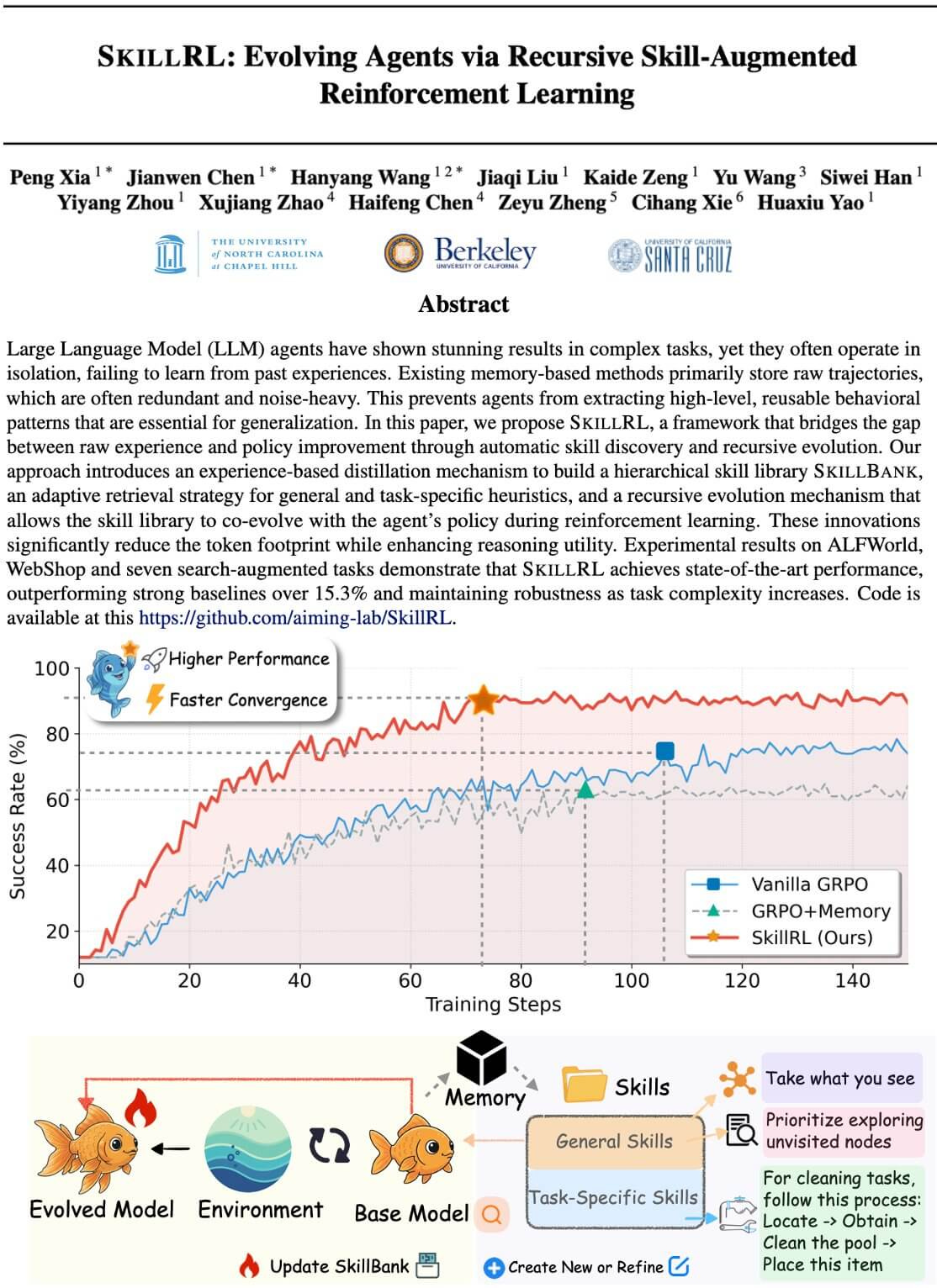

A new paper called SKILLRL rethinks this entirely.

Instead of memorizing raw experiences, it distills them into compact, reusable skills that the agent can retrieve and apply to future tasks just like humans do.

Here’s how it works:

1. Experience-based distillation: The agent collects both successful and failed trajectories. Successes become strategic patterns. Failures become concise lessons covering what went wrong, why, and what to do instead.

2. Hierarchical skill library: General skills apply everywhere while task-specific skills apply to particular problem types. The agent retrieves only what’s relevant at inference time.

3. Recursive skill evolution: The skill library is not static. It co-evolves with the agent during RL training, and new failures automatically generate new skills to fill the gaps.

The skill library starts with 55 skills and grows to 100 by the end of training. The agent keeps discovering what it doesn’t know and builds new skills to address those gaps automatically.

The results are impressive.

A 7B model beat GPT-4o by 41.9% with 10-20x less context. The biggest gains came on the hardest multi-step tasks.

The takeaway for anyone building agents today is simple. Raw experience is not knowledge. The agents that learn to abstract reusable skills from experience will always outperform the ones hoarding raw logs.

What is (was?) GIL in Python?

For years, the GIL (global interpreter lock) has been the single biggest bottleneck for multi-threaded Python code.

With Python 3.14, you can run Python without the GIL for the first time. Here’s everything you need to know about GIL in Python.

Let’s dive in to learn more today!

Some fundamentals

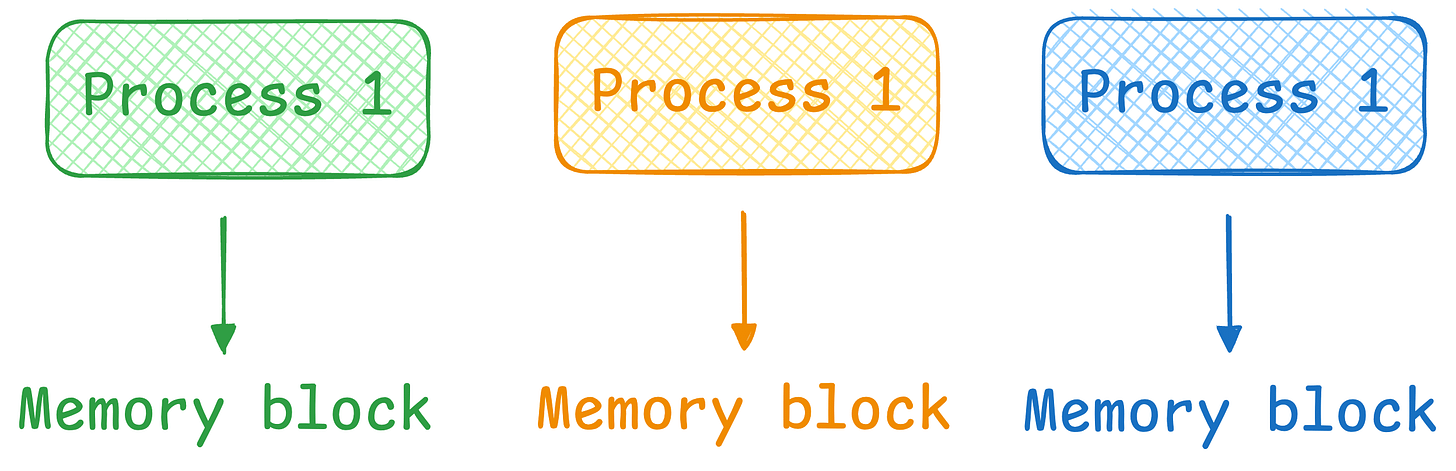

A process is isolated from other processes and operates in its own memory space. This isolation means that if one process crashes, it typically does not affect other processes.

Multi-threading occurs when a single process has multiple threads. These threads share the same resources, like memory.

What is GIL?

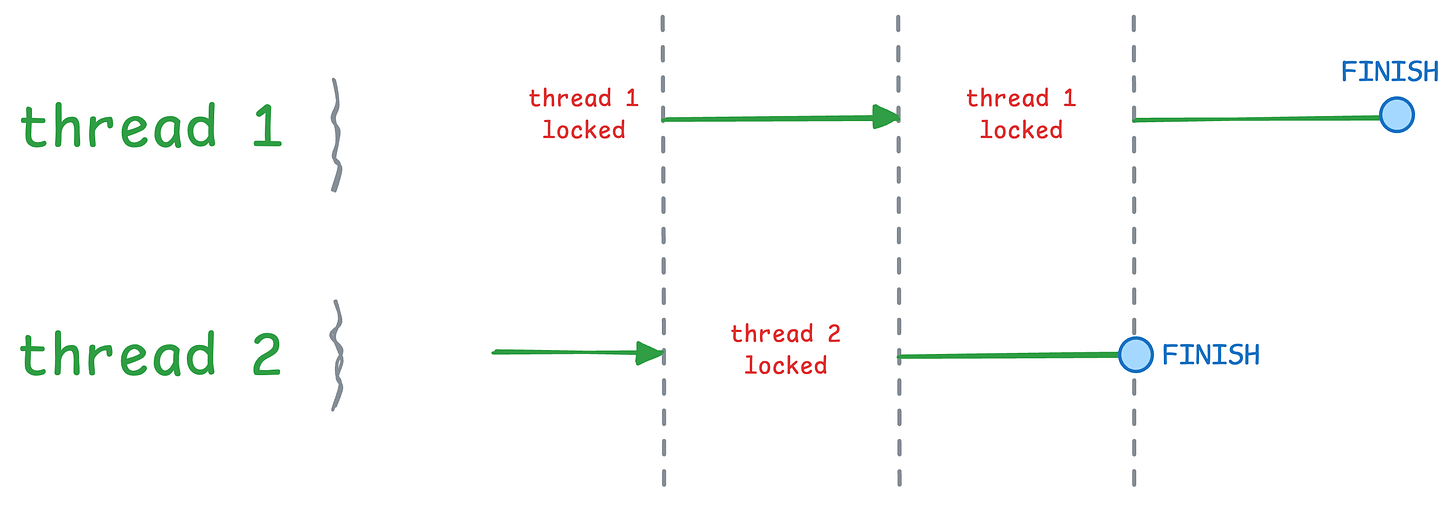

Simply put, GIL (global interpreter lock) restricts a process from running more than ONE thread at a time.

So essentially, a process can have multiple threads, but ONLY ONE can run at a given time.

This means the process cannot use multiple CPU cores for performance optimization, which means multi-threading leads to similar performance as single-threading.

Let’s understand with a code demo!

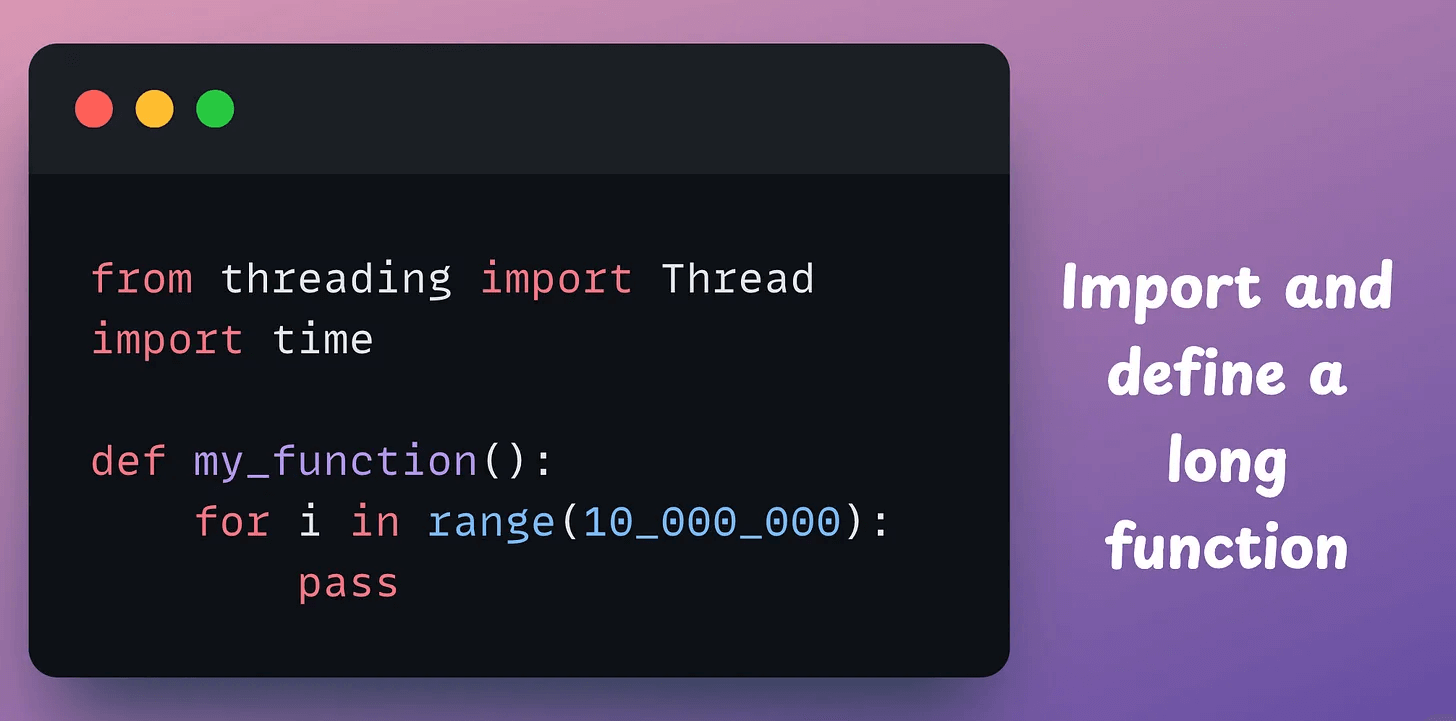

First, we start with some imports and define a long function:

Single threading, wherein we invoke the same function twice, takes 0.432 seconds.

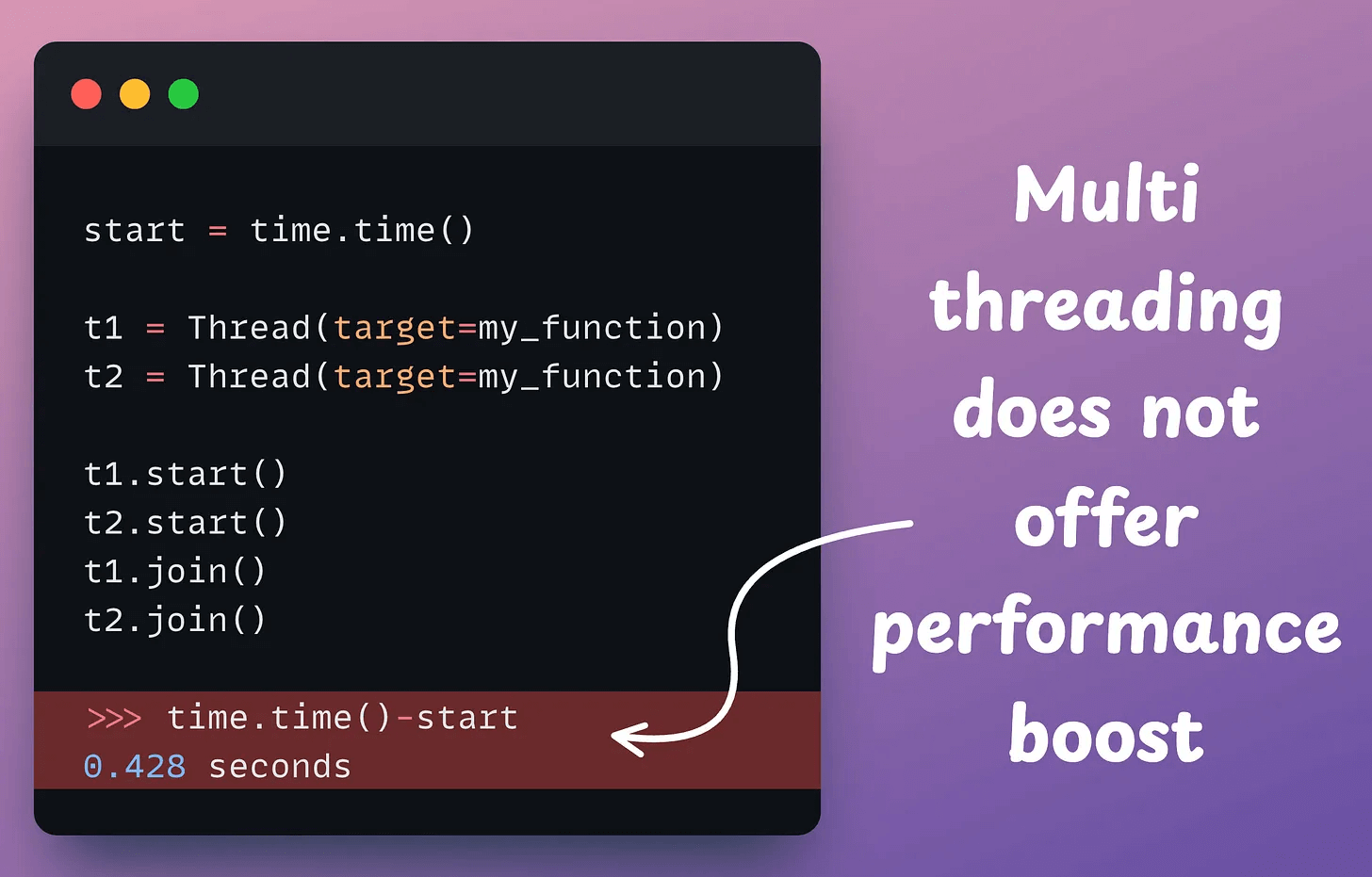

With multi-threading, we create two threads, one for each function, and this takes 0.428 seconds:

The reason for similar run-time, despite multi-threading, is…

GIL.

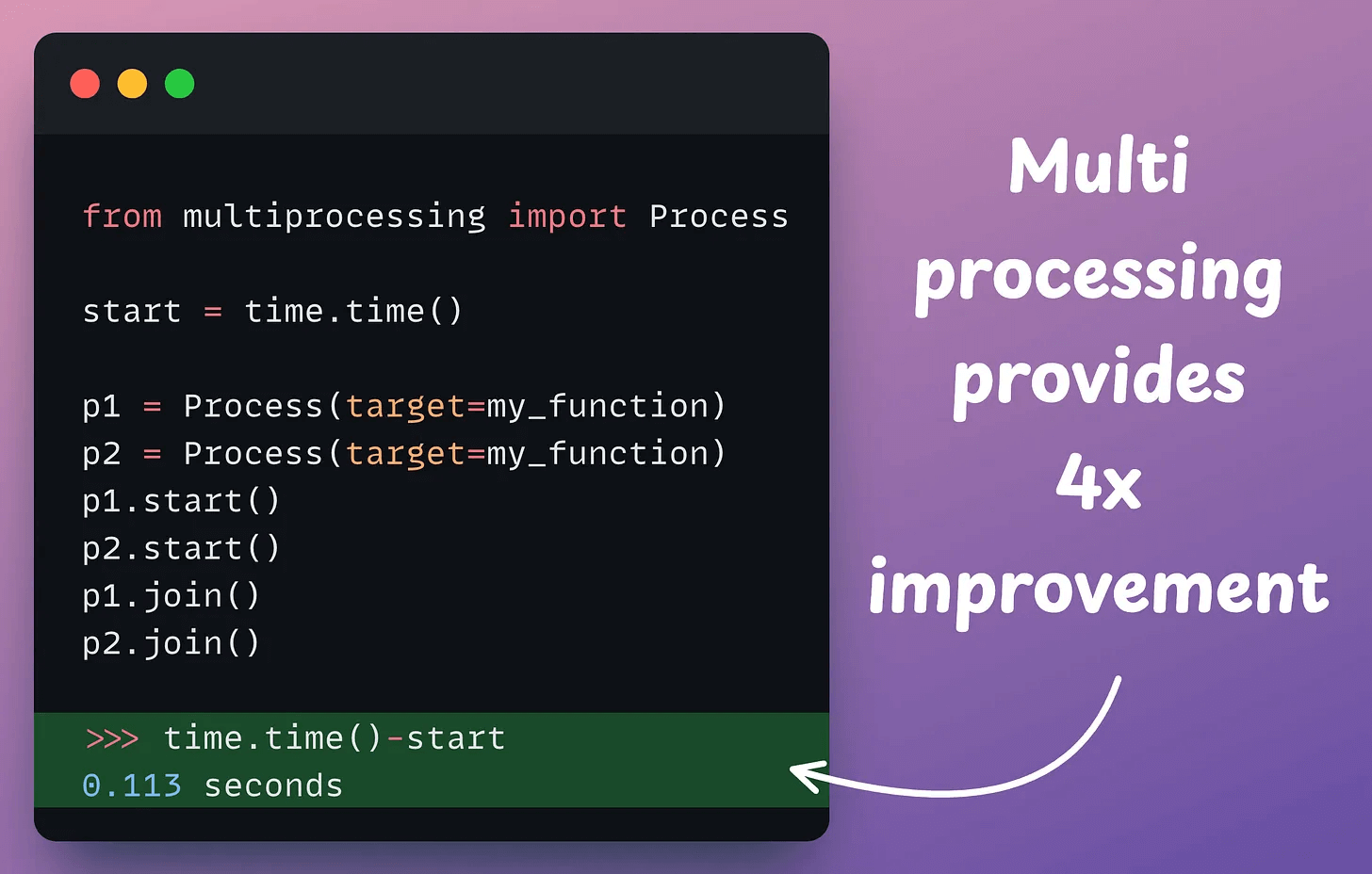

On a side note, we do experience a run-time boost with multi-processing:

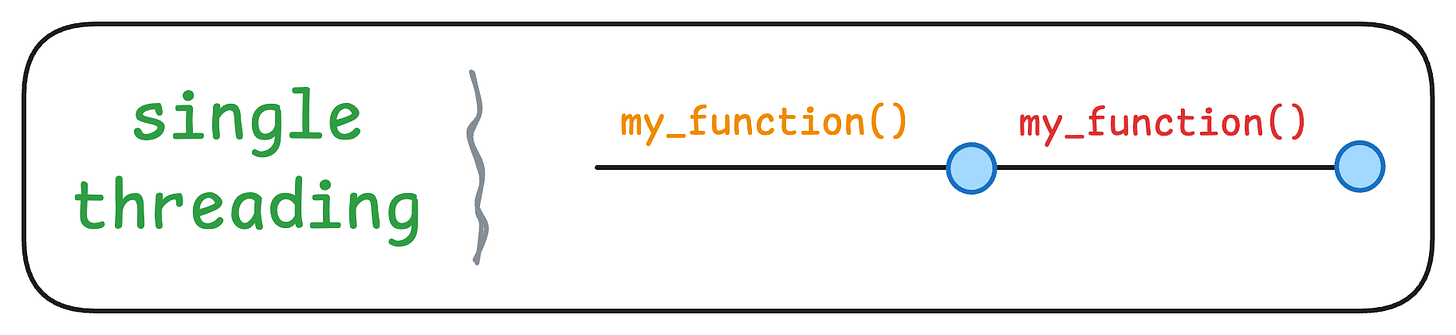

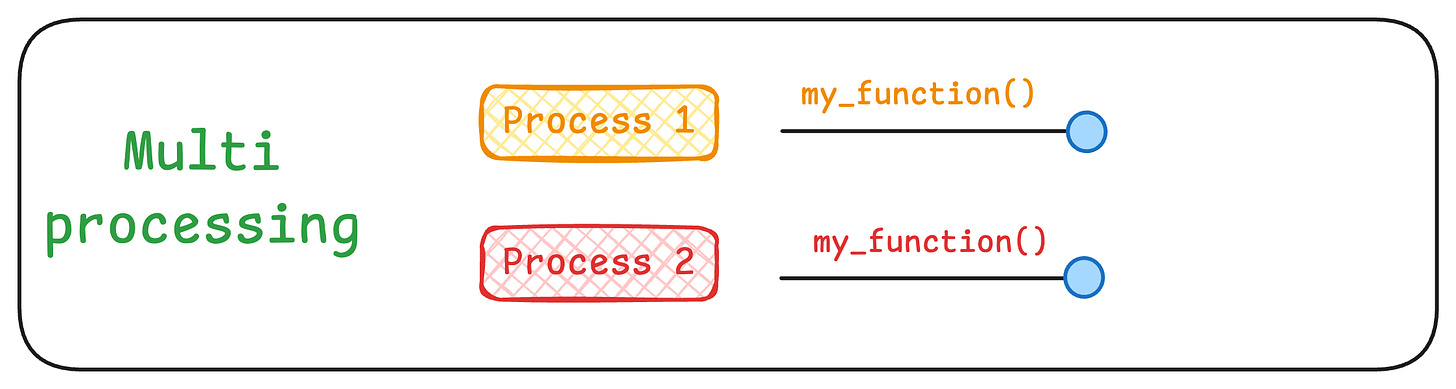

The above three scenarios (single-threading, multi-threading, and multi-processing) can be explained visually as follows:

Single-threading: A single thread executes the same function twice in order:

Multi-threading: Each thread is assigned the job to execute the function once. But due to GIL, only one thread can run at a time:

Multi-processing: Each function is executed under a different process:

If this is clear, let’s answer two questions now:

1) Why has Python been using GIL even when it is suboptimal?

Thread safety.

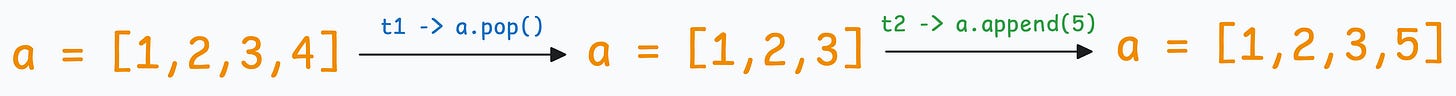

When multiple threads run in a process and share the same resources (such as memory), problems can arise when they try to access and modify the same data.

For instance, say we want to run two operations with two threads on a Python list:

If t1 runs before t2, we get the following output:

If t2 runs before t1, we get the following output:

We get different outputs!

This can lead to race conditions, where the outcome depends on the timing of the threads’ execution.

This, and a few more reasons, made it convenient to execute just one thread at a time.

On a side note, GIL usually affects CPU-bound tasks and not I/O-bound tasks, where multi-threading can still be useful.

2) If multi-processing works, why not use that as a workaround?

This is easier said than done.

Unlike threads, which share the same memory space, processes are isolated.

As a result, they cannot directly share data as threads do.

While there are inter-process communication (IPC) mechanisms like pipes, queues, or shared memory to exchange information between processes, they add a ton of complexity.

Thankfully, Python 3.14 allows us to disable GIL, which means a process can fully utilize all CPU cores.

This video depicts the run-time difference:

We have been testing Python 3.14 lately, and we’ll share these updates in a detailed newsletter issue soon.

That said, if you want to get hands-on with actual GPU programming using CUDA, learn about how CUDA operates GPU’s threads, blocks, grids (with visuals), etc., we covered it here: Implementing (Massively) Parallelized CUDA Programs From Scratch Using CUDA Programming.

👉 Over to you: What are some other reasons for enforcing GIL in Python?

Thanks for reading!