What is (was?) GIL in Python?

...explained with visuals and code.

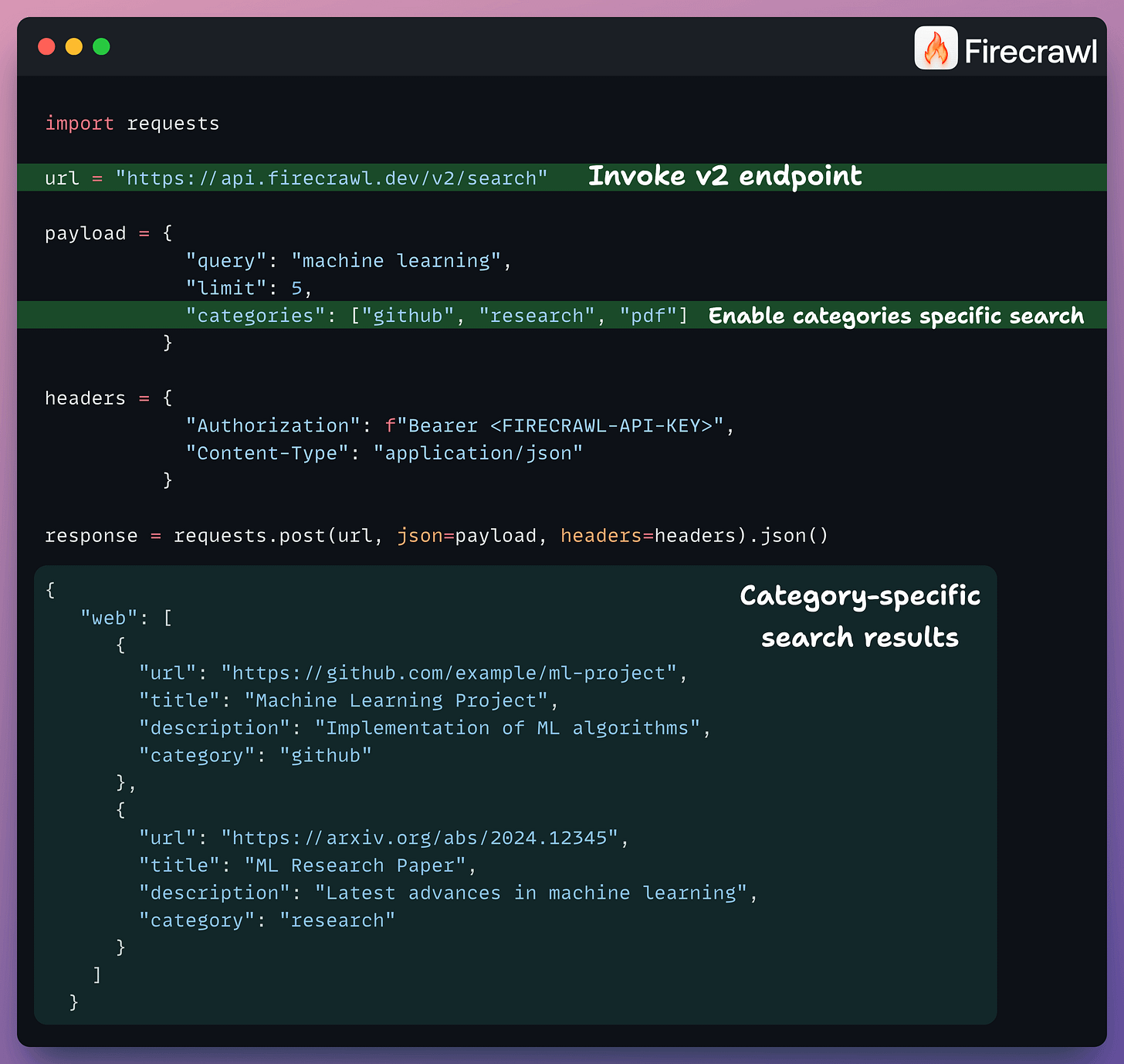

Scrape the web based on search categories

With Firecrawl, you can now filter your searches by categories, like finding research papers, GitHub repos, etc.

Here’s how to do it:

This gives much more targeted results by narrowing your search call to specific content types before you scrape them.

Along with this, here are some more recent updates:

10x better Semantic Crawling

New x402 Search Endpoint via CoinbaseDev

Fire-enrich v2 example

Improved crawl status + endpoint warnings, and much more.

What is (was?) GIL in Python?

Python 3.14 was released recently.

Of the many interesting updates (which we shall cover soon), the update that you can disable GIL (global interpreter lock) is getting the most attention.

Let’s dive in to learn more today!

Some fundamentals

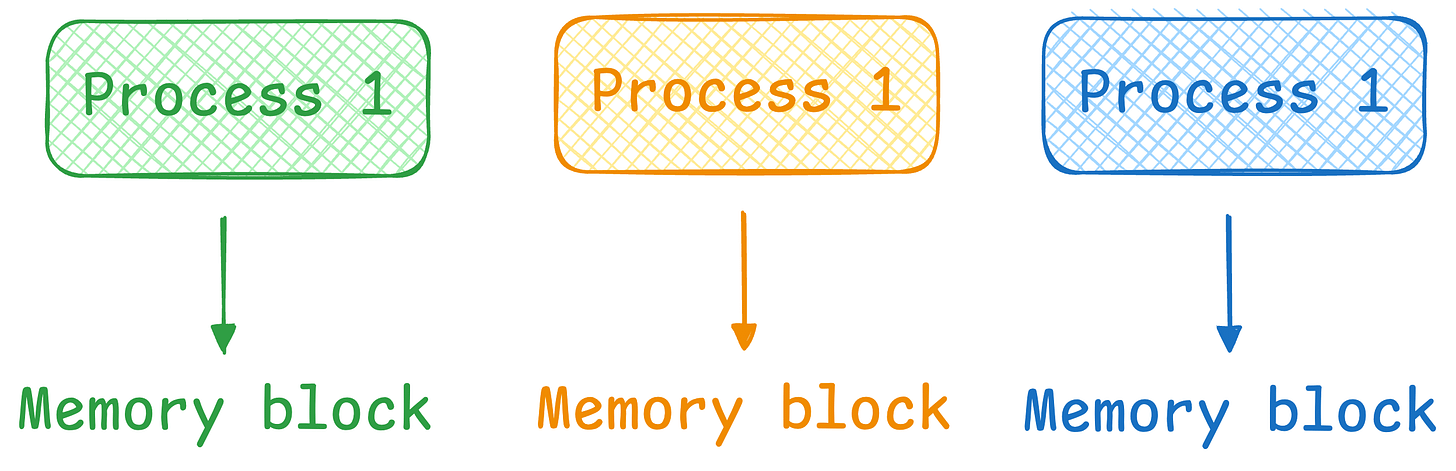

A process is isolated from other processes and operates in its own memory space. This isolation means that if one process crashes, it typically does not affect other processes.

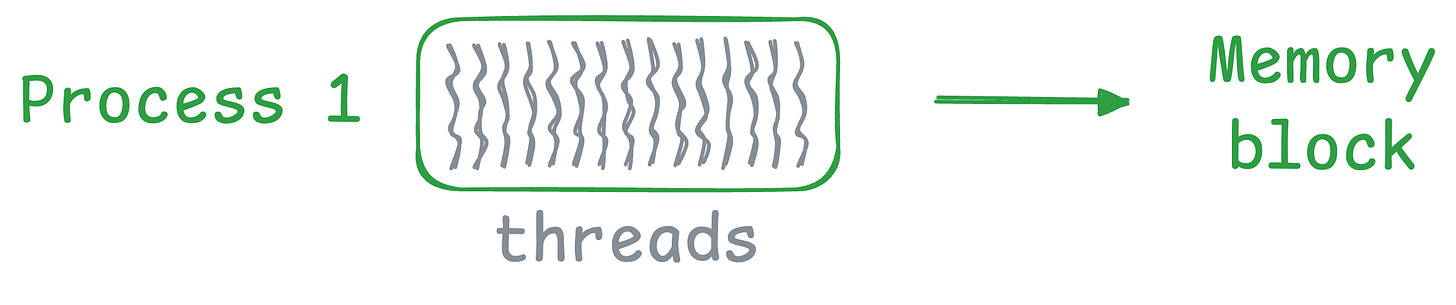

Multi-threading occurs when a single process has multiple threads. These threads share the same resources, like memory.

What is GIL?

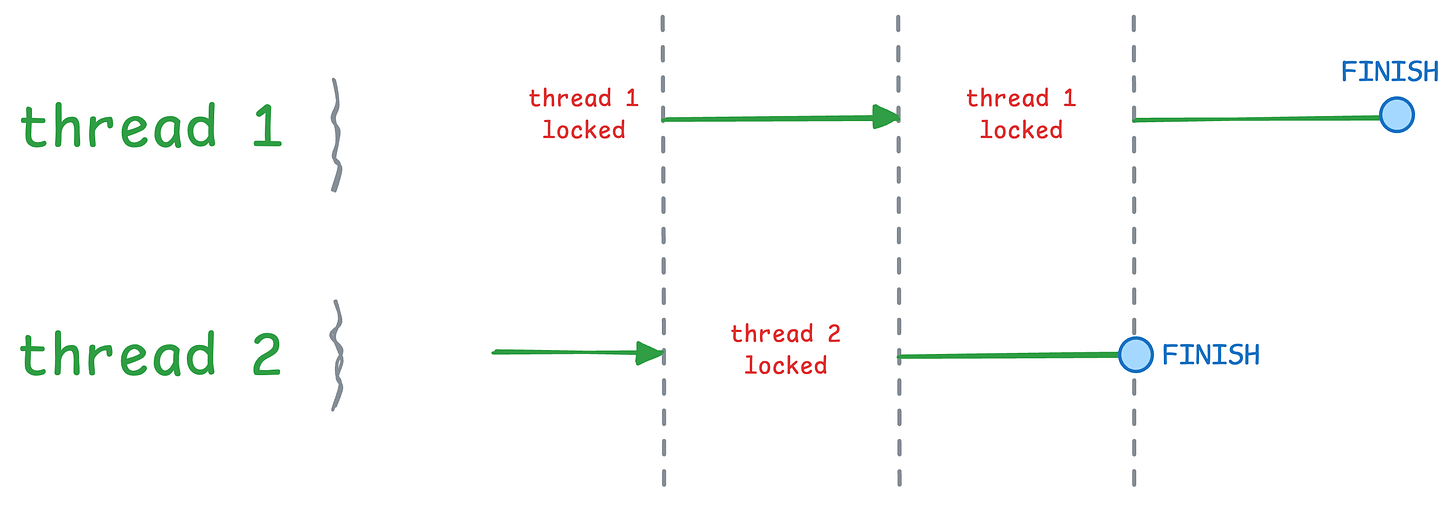

Simply put, GIL (global interpreter lock) restricts a process from running more than ONE thread at a time.

So essentially, a process can have multiple threads, but ONLY ONE can run at a given time.

This means the process cannot use multiple CPU cores for performance optimization, which means multi-threading leads to similar performance as single-threading.

Let’s understand with a code demo!

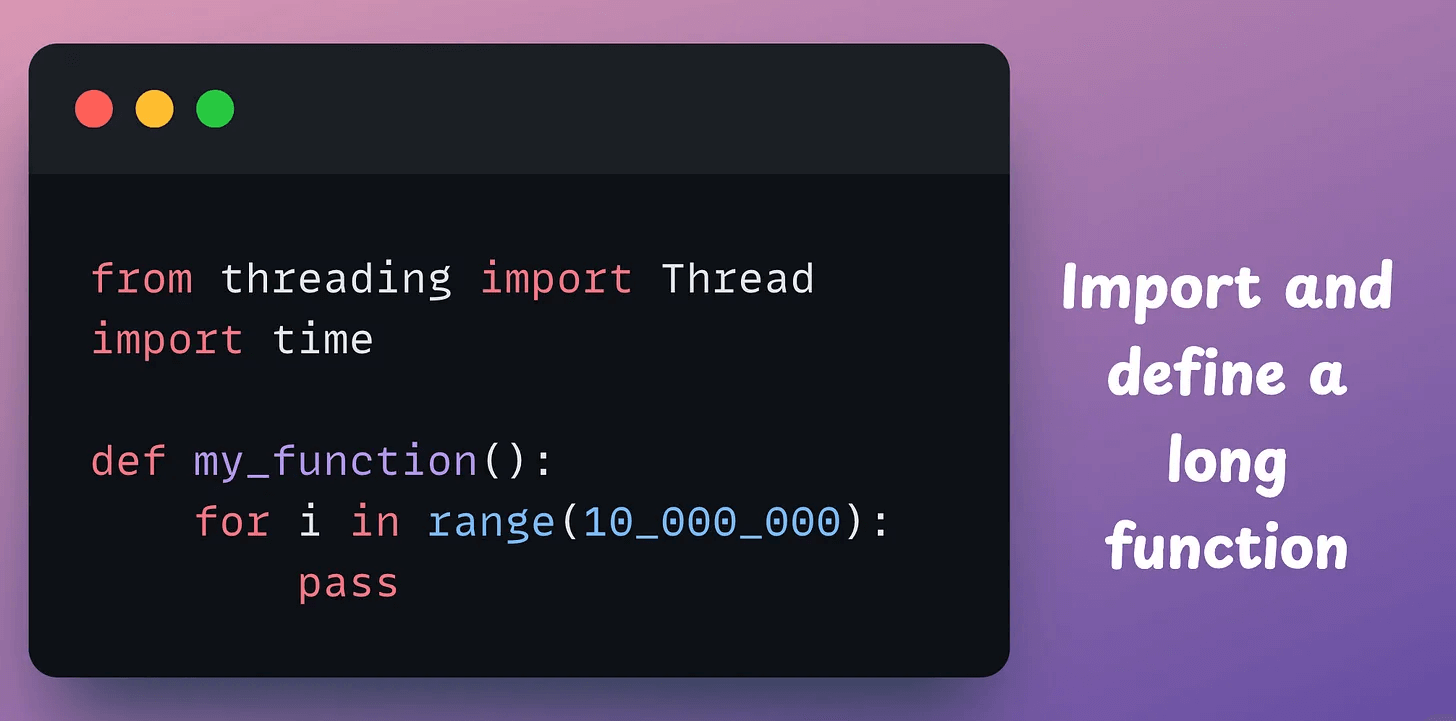

First, we start with some imports and define a long function:

Single threading, wherein we invoke the same function twice, takes 0.432 seconds.

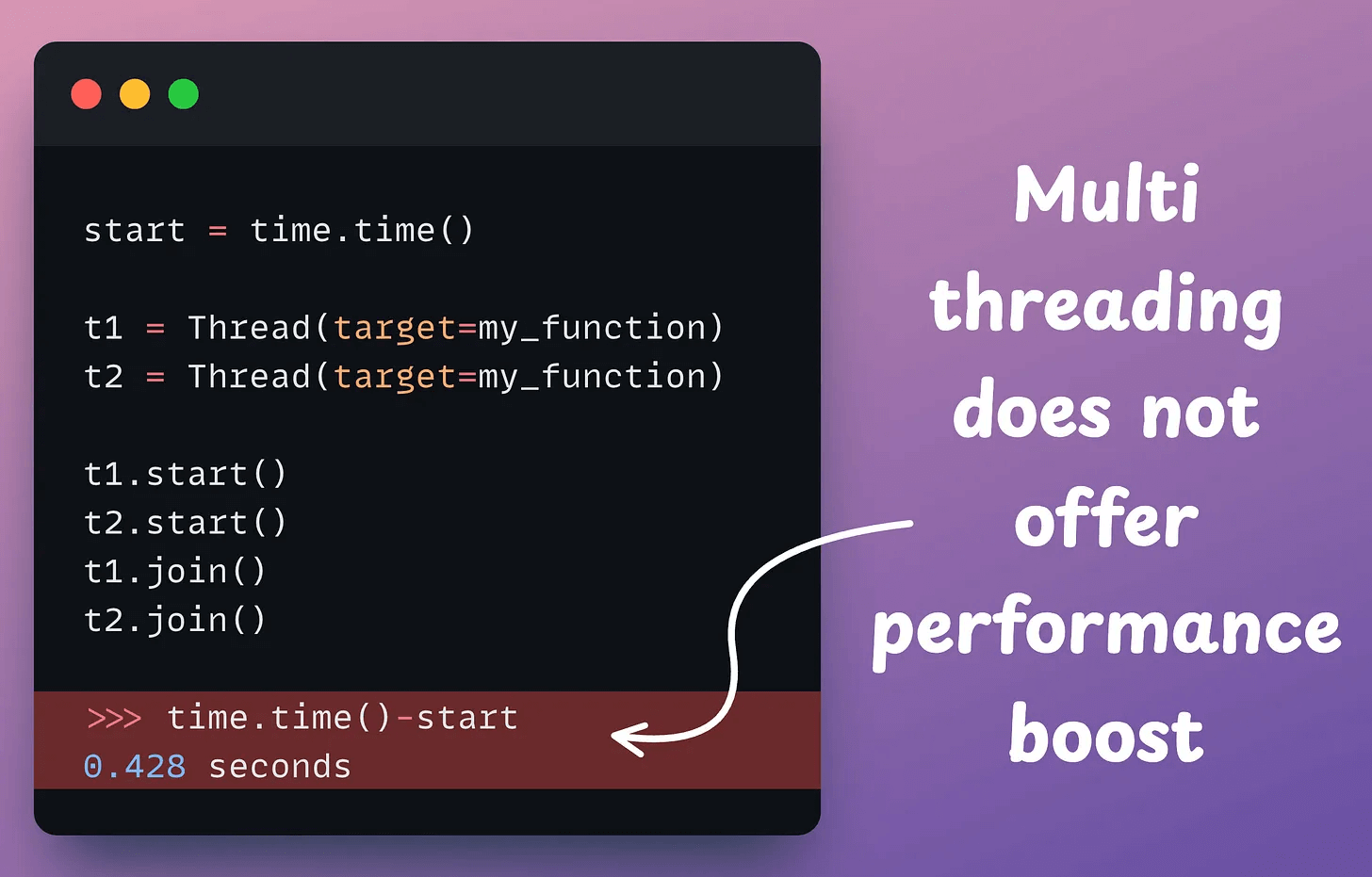

With multi-threading, we create two threads, one for each function, and this takes 0.428 seconds:

The reason for similar run-time, despite multi-threading, is…

GIL.

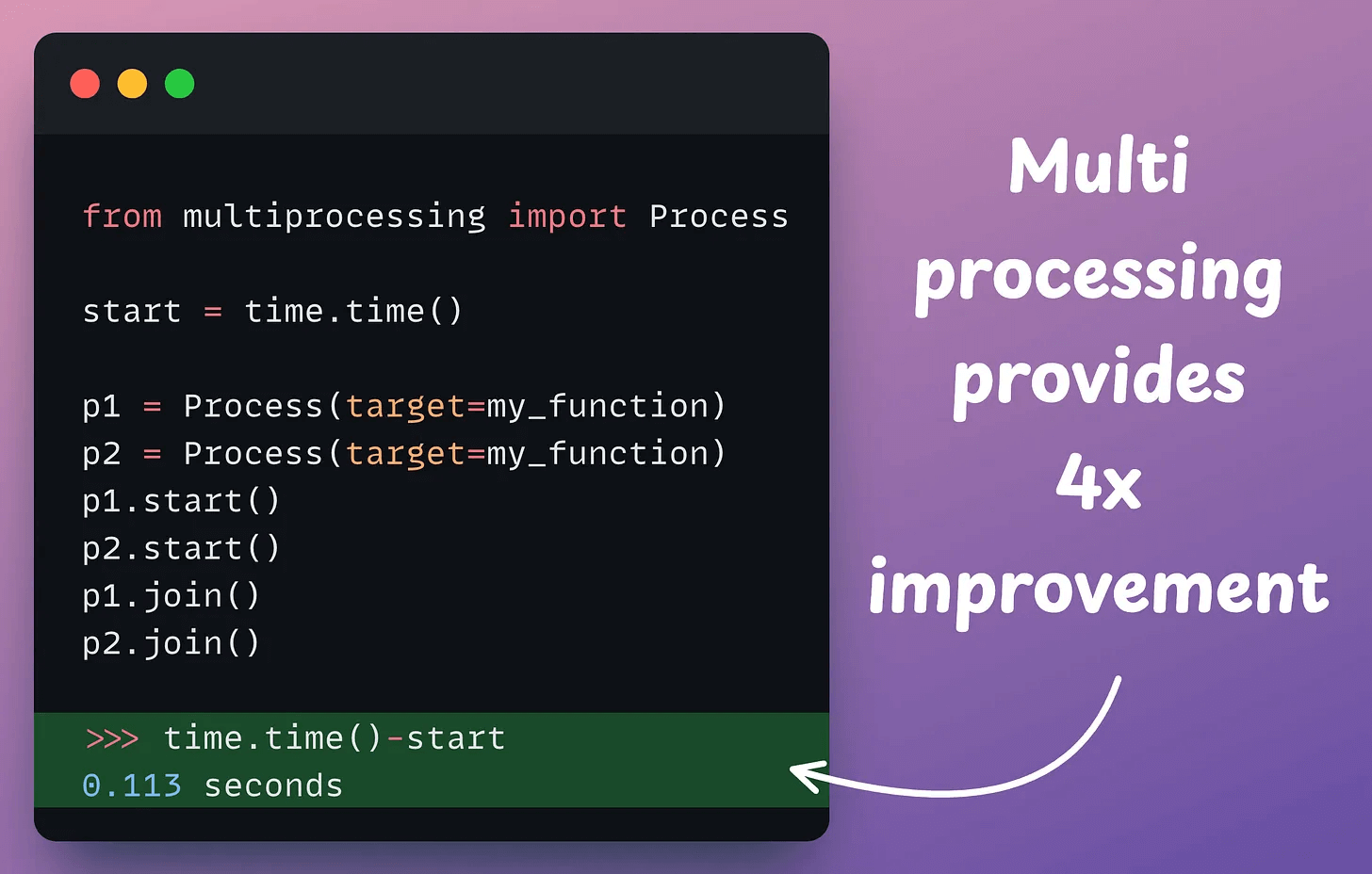

On a side note, we do experience a run-time boost with multi-processing:

The above three scenarios (single-threading, multi-threading, and multi-processing) can be explained visually as follows:

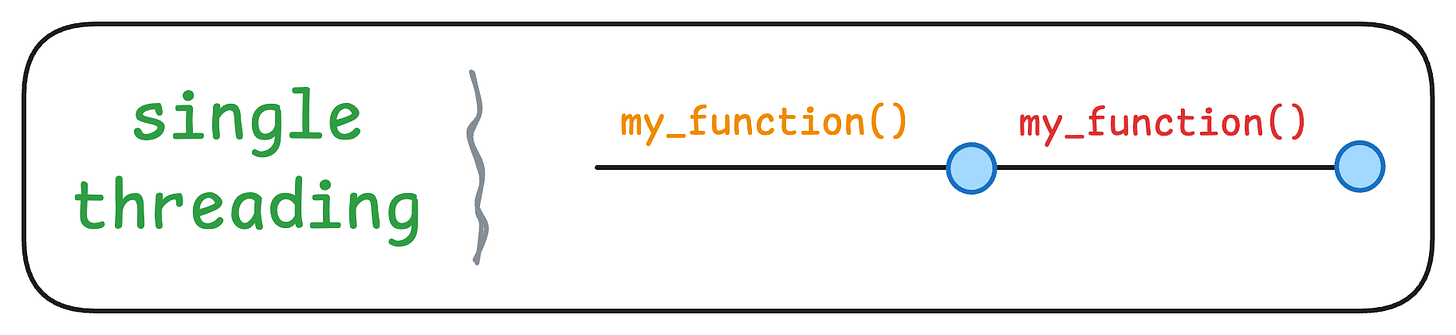

Single-threading: A single thread executes the same function twice in order:

Multi-threading: Each thread is assigned the job to execute the function once. But due to GIL, only one thread can run at a time:

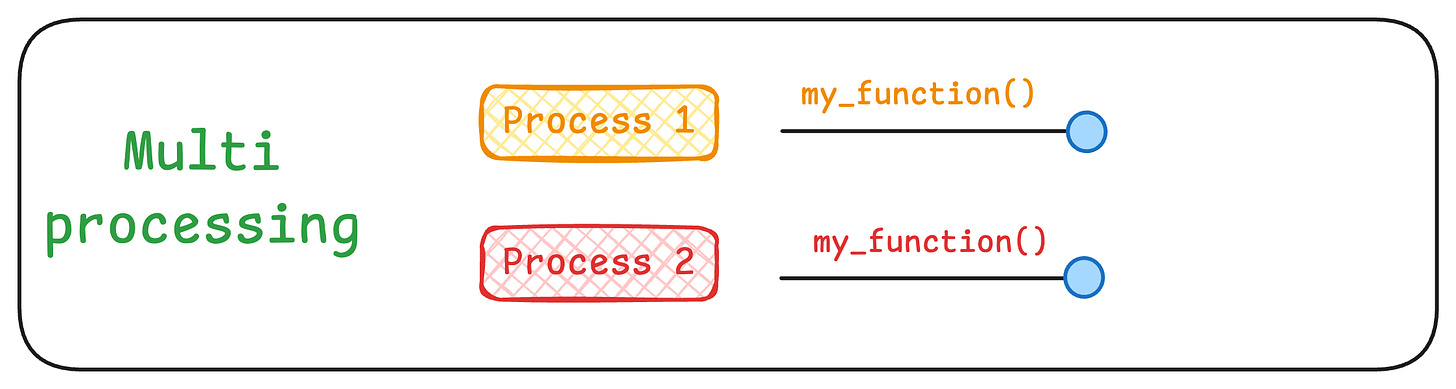

Multi-processing: Each function is executed under a different process:

If this is clear, let’s answer two questions now:

1) Why has Python been using GIL even when it is suboptimal?

Thread safety.

When multiple threads run in a process and share the same resources (such as memory), problems can arise when they try to access and modify the same data.

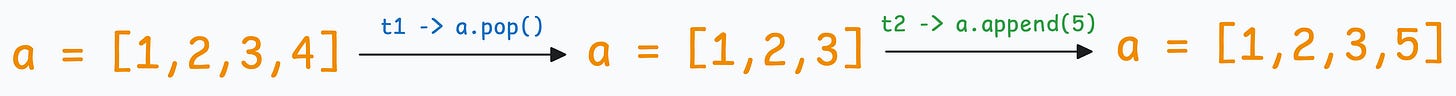

For instance, say we want to run two operations with two threads on a Python list:

If t1 runs before t2, we get the following output:

If t2 runs before t1, we get the following output:

We get different outputs!

This can lead to race conditions, where the outcome depends on the timing of the threads’ execution.

This, and a few more reasons, made it convenient to execute just one thread at a time.

On a side note, GIL usually affects CPU-bound tasks and not I/O-bound tasks, where multi-threading can still be useful.

2) If multi-processing works, why not use that as a workaround?

This is easier said than done.

Unlike threads, which share the same memory space, processes are isolated.

As a result, they cannot directly share data as threads do.

While there are inter-process communication (IPC) mechanisms like pipes, queues, or shared memory to exchange information between processes, they add a ton of complexity.

Thankfully, Python 3.14 allows us to disable GIL, which means a process can fully utilize all CPU cores.

This video depicts the run-time difference:

We have been testing Python 3.14 lately, and we’ll share these updates in a detailed newsletter issue soon.

That said, if you want to get hands-on with actual GPU programming using CUDA, learn about how CUDA operates GPU’s threads, blocks, grids (with visuals), etc., we covered it here: Implementing (Massively) Parallelized CUDA Programs From Scratch Using CUDA Programming.

👉 Over to you: What are some other reasons for enforcing GIL in Python?

Thanks for reading!